ETL with a Glue Python Shell Job: Load data from S3 to Redshift

Gaining valuable insights from data is a challenge. After collecting data, the next step is to extract, transform, and load (ETL) the data into an analytics platform like Amazon Redshift. Luckily, there is a platform to build ETL pipelines: AWS Glue.

In short, AWS Glue solves the following problems: a managed-infrastructure to run ETL jobs, a data catalog to organize data stored in data lakes, and crawlers to discover and categorize data.

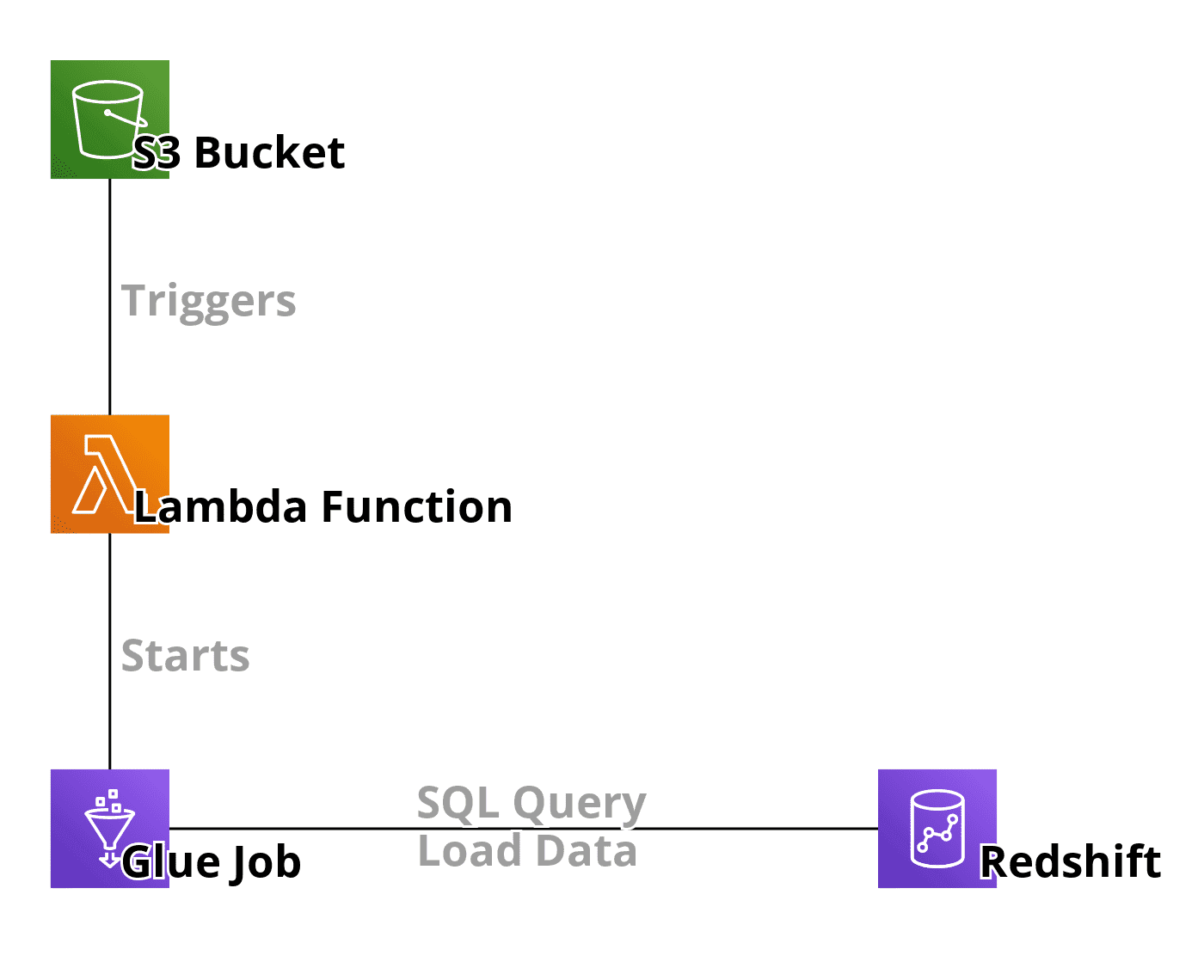

In the following, I would like to present a simple but exemplary ETL pipeline to load data from S3 to Redshift.

- Someone uploads data to S3.

- An S3 event triggers a Lambda function.

- The Lambda function starts a Glue job.

- The Glue job executes an SQL query to load the data from S3 to Redshift.

AWS Glue offers two different job types:

- Apache Spark

- Python Shell

An Apache Spark job allows you to do complex ETL tasks on vast amounts of data. However, the learning curve is quite steep. Luckily, there is an alternative: Python Shell. A Python Shell job is a perfect fit for ETL tasks with low to medium complexity and data volume.

Therefore, I recommend a Glue job of type Python Shell to load data from S3 to Redshift without or with minimal transformation.

All you need to configure a Glue job is a Python script. The code example executes the following steps:

importmodules that are bundled by AWS Glue by default.- Define some configuration parameters (e.g., the Redshift hostname

RS_HOST). - Read the S3 bucket and object from the arguments (see

getResolvedOptions) handed over when starting the job. - Establish a connection to Redshift:

connect(...). - Increase the statement timeout (see

statement_timeout) to one hour. - Execute the

COPYquery to tell Redshift to the object from S3.

from pgdb import connect |

To trigger the ETL pipeline each time someone uploads a new object to an S3 bucket, you need to configure the following resources:

- Create a Lambda function (Node.js) and use the code example from below to start the Glue job

LoadFromS3ToRedshift. - Attach an IAM role to the Lambda function, which grants access to

glue:StartJobRun. - Create a S3 Event Notification that invokes the Lambda function each time someone uploads an object to your S3 bucket.

The following example shows how to start a Glue job and pass the S3 bucket and object as arguments.

; |

There is only one thing left. You might want to set up monitoring for your simple ETL pipeline.

- Create an SNS topic and add your e-mail address as a subscriber.

- Create a CloudWatch Rule with the following event pattern and configure the SNS topic as a target.

{ |

By doing so, you will receive an e-mail whenever your Glue job fails.

Summary

AWS Glue offers tools for solving ETL challenges. A Glue Python Shell job is a perfect fit for ETL tasks with low to medium complexity and data volume. For example, loading data from S3 to Redshift can be accomplished with a Glue Python Shell job immediately after someone uploads data to S3.

And by the way: the whole solution is Serverless! No need to manage any EC2 instances.

Further reading

- Article Own your analytics data: Replacing Google Analytics with Amazon QuickSight

- Article Cleaning up an S3 bucket with the help of Athena

- Article How to avoid S3 data leaks?

- Tag s3

- Tag redshift

- Tag glue