What's the CO² footprint of your architecture?

Fighting climate change is one of the biggest challenges of our days. When designing an architecture, there are many important factors to consider: security, reliability, performance and costs. I’d like to add another factor to that list: the CO² footprint.

This is a cross-post from the Cloudcraft blog.

Besides that fact that AWS aims to achieve 100% renewable energy for its data centers, there is also a lot to do for AWS customers to reduce energy consumption at all. Read on to learn about how you can take part and minimize the CO² footprint of your AWS architecture.

Luckily, in the world of cloud computing, reducing the CO² footprint will reduce costs in most scenarios. Therefore, it should be easy for you to develop a business case for a greener architecture.

Shut down unused resources

Shutting down unused resources has a significant impact on the CO² footprint as well as on costs. When building a greenfield application or migrating a legacy application, you should always think about whether there are times where you could shut down the whole application. For example, when an internal application is only used during business hours from within a single timezone.

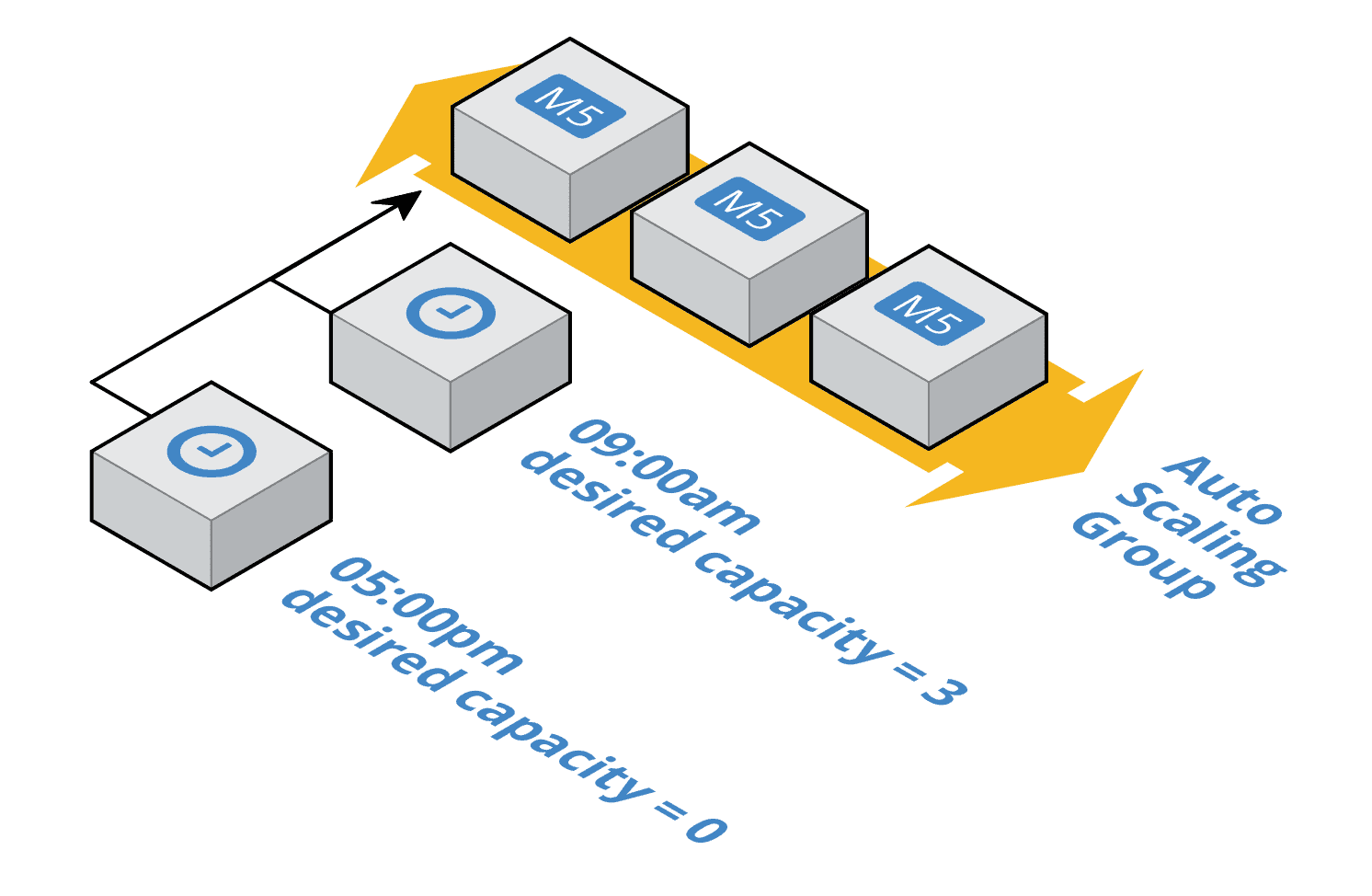

There are two simple approaches to shut down unused resources automatically. When using an Auto Scaling Group to orchestrate EC2 instances, it is simple to define scheduled actions to increase and decrease running instances. For example, you could set the desired capacity of the Auto Scaling Group to zero at 05:00 pm and back to three at 09:00 am each working day.

The AWS Instance Scheduler - a solution based on Lambda and DynamoDB maintained by AWS - is a good alternative for EC2 instances not managed by an Auto Scaling Group. The tool covers RDS database instances as well.

Right size your resources

As a consultant, I often review cloud architectures. About 80% of the infrastructures I see are wasting resources by oversizing their cloud components. There are two reasons for that. One, we are not used to the fact that increasing or decreasing capacity is something we can do with the click of a button, and two - no one wants to take the risk of performance degradation because of picking a smaller size.

However, to reduce the CO² footprint of your cloud infrastructure, you should be careful when provisioning your cloud resources. Here are a few tips:

- Start with small instance types for EC2 and RDS when setting up the infrastructure for a greenfield or lift & shift project. It is no big deal to increase the instance size later. But downsizing an instance later is a hard decision because it could potentially cause service degradation.

- Do not extensively overprovision storage capacity when using EBS. It is possible to increase the size of an EBS volume on the fly later. But it is not possible to downsize the storage capacity of an EBS volume.

- Same for provisioning I/O throughput of EBS, EFS, RDS, DynamoDB, and similar services. Start with the smallest possible configuration and increase I/O throughput later.

By the way, right-sizing your infrastructure does not only reduce the CO² footprint but reduces costs dramatically as well.

Reserved Capacity vs. Flexible Resources

When choosing the building blocks for your architecture, you should consider whether a service requires reserved capacity or offers flexible resources. By using services without the need to provision a specific capacity, you avoid idle times.

A comparison of different options:

| Reserved Capacity | Flexible Resources |

|---|---|

| EBS requires you to provision the storage capacity upfront; the same is true for the I/O throughput. | S3 provides unlimited storage and bills per read/write request. |

| EC2 launches an instance with a predefined amount of CPU, memory, and network resources. As long as the instance runs, the resources are occupied, no matter if the virtual machine is idling or under high load. | Lambda spins up execution environments on-demand. When no requests are coming in, Lambda will not occupy any compute resources. |

| RDS launches virtual machines under the hood. Those virtual machines are running 24/7 to provide access to the data stored in the relational database. When dealing with spiky workloads, the database needs to be provisioned for the maximum throughput required. Doing so wastes a lot of resources during times with low demand. | DynamoDB provides unlimited storage that grows on demand. On top of that, you can use DynamoDB On-Demand, which means you will pay per request. That also means that you will reduce idle resources to a minimum. |

| ECS/EKS with EC2 requires a fleet of EC2 instances to run your container workload. Typically, that results in wasted resources due to fragmentation. | ECS/EKS with Fargate launches containers on an infrastructure managed by AWS. Doing so allows AWS to reduce waste by optimizing the underlying infrastructure. |

AWS regions with renewable energy

One of the first steps when thinking about an AWS architecture is to choose the AWS region. At this point, AWS provides more than 20 regions worldwide thoughout US East (N. Virginia), Asia Pacific (Tokyo) and Europe (Ireland).

You should take into consideration the following criteria when deciding which regions fit best for your scenario:

- The network latency, a factor to consider when choosing the region that is closest to the majority of users.

- Legal requirements like data protection laws.

- How cost effective each region is as the pricing for most services differs between regions.

- Not all AWS services are available in all regions and new services are typically announced in a certain order for the different regions.

- Some regions are known for high stability and availability of resources (e.g., virtual machines with GPU cores).

To reduce the CO² footprint of your architecture, you should consider another criterion: “AWS purchases and retires environmental attributes, like Renewable Energy Credits and Guarantees of Origin, to cover the non-renewable energy we use in these regions.” (Source AWS & Sustainability)

- US West (Oregon)

- Europe (Frankfurt)

- Europe (Ireland)

- GovCloud (US-West)

- Canada (Central)

By spinning up your workload in one of these regions, you are dramatically reducing the CO² footprint of your architecture.

Summary

Keeping the CO² footprint to a minimum is your duty when designing an AWS architecture. Wasting cloud resources increases energy consumption as well as hardware usage. The good news is that you can kill two birds with one stone: reducing your CO² footprint does also reduce costs.

Further reading

- Article A recap of the re:Invent 2020 Keynote with Andy Jassy

- Article How to configure SAML for AWS SSO?

- Article Unusual AWS Architectures

- Tag aws-architecture

- Tag highlight