Lessons learned: Serverless Chatbot architecture for marbot

marbot forwards alerts from AWS to your DevOps team via Slack. marbot was one of the winners of the AWS Serverless Chatbot Competition in 2016. Today I want to show you how marbot works and what we learned so far.

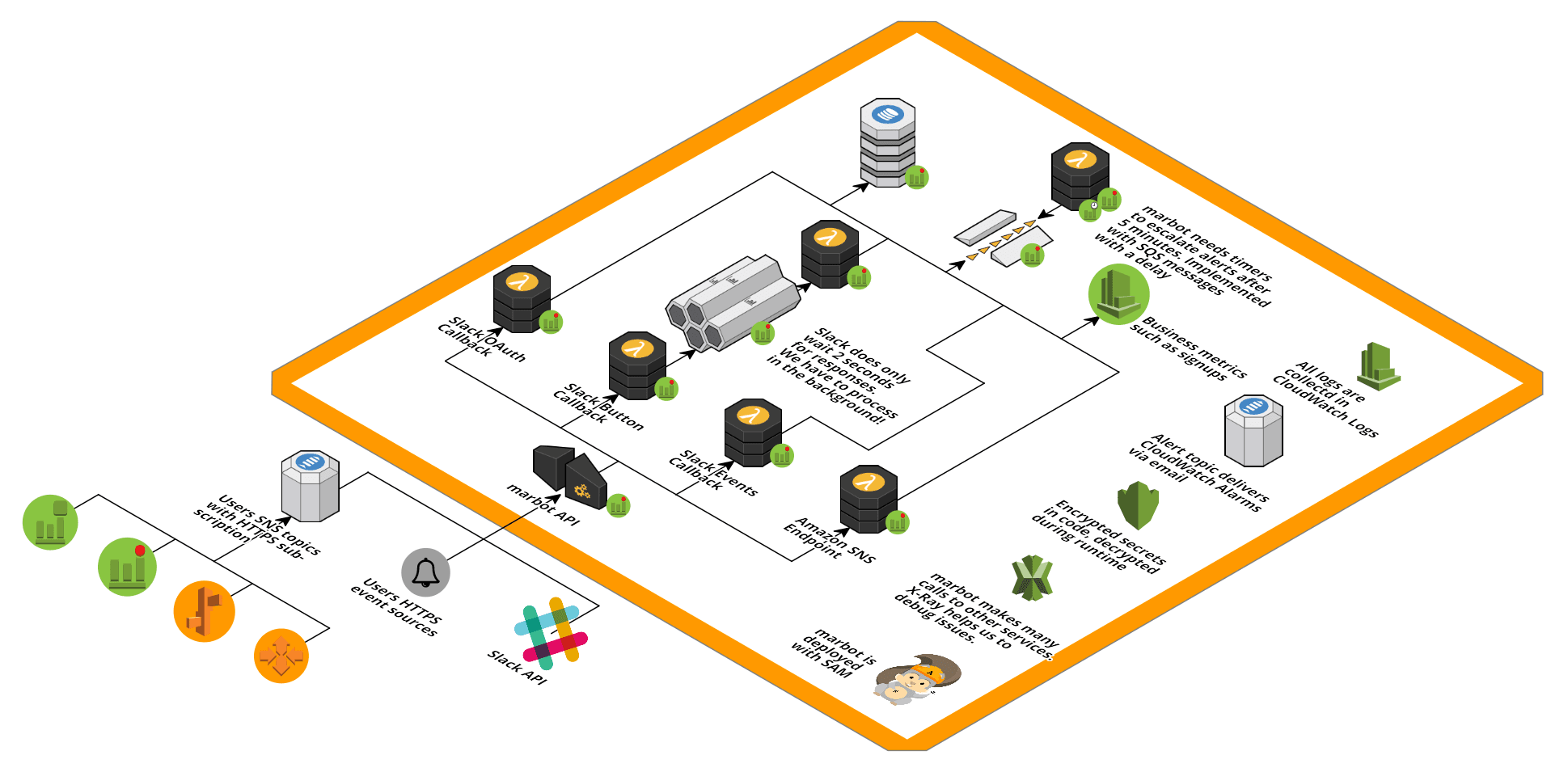

Let’s start with the architecture diagram.

The diagram was created with Cloudcraft - Visualize your cloud architecture like a pro.

Architecture

The marbot API is provided by an API Gateway. We get most of your requests from:

- Amazon SNS: Our users use SNS topics with an HTTPS subscription to transport alerts, notifications, and incidents from within their AWS accounts to marbot.

- Non-AWS sources that make HTTPS calls like New Relic, Uptime Robot, or just

curlclients. - Slack: marbot uses the Slack Events API to drive conversations with his users and Slack Buttons to allow users to acknowledge, close, or pass alerts.

The API Gateway forwards HTTP requests to one of our Lambda functions. All of them are implemented in Node.js and store their state in DynamoDB tables.

One special case is the Slack Button API. When you press a button in a Slack message, marbot has 3 seconds to respond to this message. To respond to a button press, marbot may need to make a bunch of calls to the Slack API.

Learnings

Decoupling the process

We learned that we miss the 2-second timeout very often by looking at our CloudWatch data. To not miss the 2-second timeout, we now only put a record into a Kinesis stream that contains all relevant data before we respond to the API request. Writing to Kinesis is a quick operation, and we haven’t seen 2-second timeouts since we switched to Kinesis streams.

As soon as possible we read the Kinesis stream and process the records within a Lambda function. Kinesis comes with its challenges. If you fail to process a record, the Lambda Kinesis integration will retry this record as long as the record is deleted from the stream. All the newer records will not be processed until the failed record is deleted or you fix the bug!

We also thought about using SQS, but:

- there is no native SQS Lambda integration

- we can not build one on our own that is serverless and responds within a second

So we decided to use Kinesis knowing that an error can stop our whole processing pipeline.

Resilient remote calls

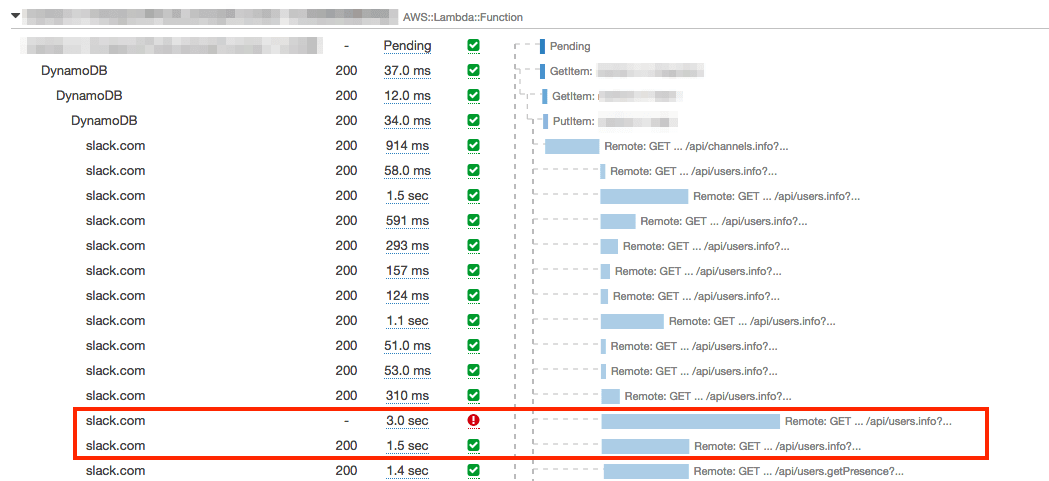

HTTP requests are hard. A lot of things can go wrong. Two things that we learned early when talking to the Slack API:

- Set timeouts: We use 3 seconds at the moment and think about reducing this to 2 seconds

- Retry on failures like timeouts or 5XX responses.

Our Node.js implementation of Slack API calls relies on the requestretry package:

const requestretry = require('requestretry'); |

The following screenshot shows a X-Ray trace where the code retried Slack API calls because of the 3 seconds timeout.

Implementing timers on AWS

For every alert that arrives in marbot, we keep a timer. 5 minutes after the alert is received we check if someone acknowledged the alert. If not, we escalate the alert to another engineer or the whole team. We have decided to use SQS queues for that. If you send a message to an SQS queue, you can set a delay. Only after the delay, the message becomes visible in the queue. Exactly what we need! The only downside to this solution is that there is no native way to connect Lambda and SQS. But with a few lines of code, you can implement this on your own.

Keeping secrets secure

We use git to version our source code. To communicate with the Slack API, we need to store a secret that we use to authenticate with Slack. We keep those secrets in a JSON file that is added to git as well. But we encrypt the whole file with KMS before we put it into git with the AWS CLI:

aws kms encrypt --key-id XXX --plaintext fileb://config_plain.json --output text --query CiphertextBlob | base64 --decode > config.json |

Make sure to put

config_plain.jsoninto your.gitignorefile!

Outside of the Lambda handler code, we use this code snippet to decrypt the configuration:

const fs = require('fs'); |

Inside the Lambda handler code, you can access the config like this:

config |

Using this approach, you will only make one API call to KMS (for every Lambda runtime).

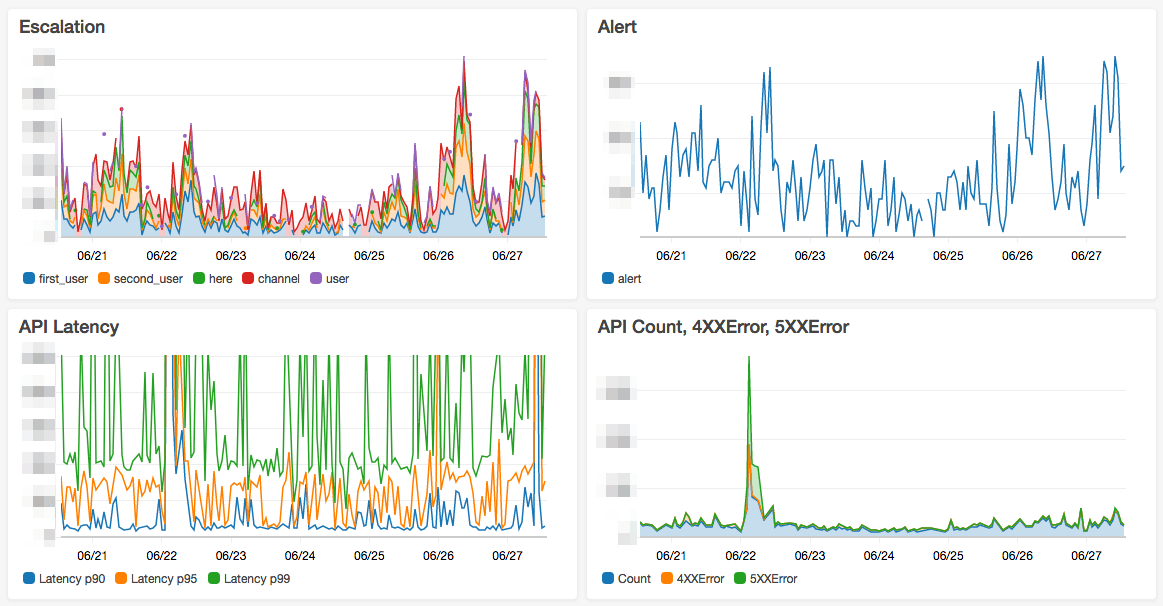

Getting insights

We use custom CloudWatch metrics to get insights into:

- How many Slack teams installed marbot

- Number of alerts and escalations created

We use a CloudWatch Dashboard to display those business metrics together with some technical metrics.

Deploying the infrastructure

Our pipeline for deploying marbot works like this:

- Download dependencies (

npm install) - Lint code

- Run unit tests (we mock all external HTTP calls with nock

cloudformation packagecloudformation deployto an integration stack- Run integration tests with newman

cloudformation deployto a prod stack

Jenkins runs the pipeline. Since our code is hosted on BitBucket, we can not easily use CodePipeline at the moment.