AWS Month in Review: July 2020

The world of AWS changes fast. This review summarizes the most important news from July 2020. The roundup does not include version updates, region expansion news, and minor changes. Instead, we focus on the most important news. But not only that! Our monthly review does include our opinion and evaluation as well.

Performance boost for EFS

In theory, sharing a file system with multiple virtual machines, containers, or function invocations is a great thing. EFS is the go-to service in those scenarios (at least if your workload runs on a UNIX-based OS). However, I have struggled with high latencies when accessing files from EFS from time to time.

Therefore, the following announcement caught my eye: Amazon Elastic File System increases per-client throughput by 100%.

I need to run some performance tests on EFS shortly to find out more about the details.

WAF is growing up

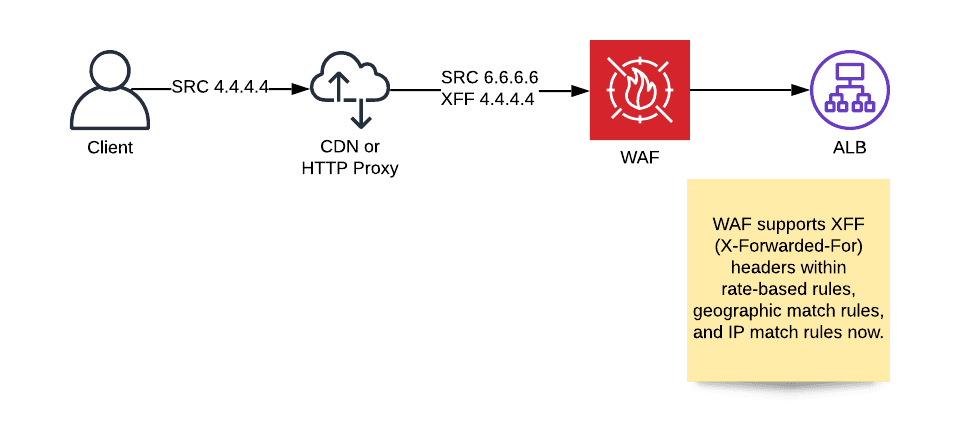

The AWS WAF allows you to block or throttle incoming HTTP requests to your CloudFront distributions or Application Load Balancers (ALB). I ran into the problem that AWS WAF was not capable of inspecting IP addresses when placed behind a 3rd party CDN or any other HTTP proxy. Unfortunately, there was no way to define a rule taking the original source IP address into account.

Luckily, support for X-Forwarded-For (XFF) header is now available for AWS WAF.

Containers for the masses?

AWS and Docker announced a partnership: Docker and AWS collaborate to help deploy applications to Amazon ECS on AWS Fargate.

What’s behind the announcement? Docker Compose supports ECS. However, the feature is a beta release. The functionality is not that impressive. docker ecs compose up generates and executes a CloudFormation template. The CloudFormation template creates a load balancer, ECS cluster, ECS service, security groups, and IAM roles. However, there is only very little control over the details (e.g., IAM policy, …). In my opinion, integrating ECS into Docker Compose will only work for simple scenarios. Using CloudFormation, Terraform, or the CDK will give you much more flexibility, which will pay off in the long run for complex situations.

The partnership between Docker and AWS is probably not too strong as Amazon ECS announces AWS Copilot, a new CLI to deploy and operate containers in AWS as well. Interestingly, AWS Copilot follows the same approach as Docker Compose: you define a manifest describing your container-based service, and the CLI tool generates and deploys CloudFormation templates - including a VPC, ECS cluster, ECS service, and so so - for you. The same problem as above, you have very little flexibility. But whenever you have to impress someone with being able to deploy a container within a few minutes: here you go!

EKS panting after K8s

Kubernetes 1.17 was released on December 9th, 2019. It took AWS about nine months to get that version up and running on EKS: Amazon EKS now supports Kubernetes version 1.17. Unluckily, K8s promises committed to support each version for about nine months. So the latest version of K8s available on EKS will soon lose support from the K8s community. That is annoying!

But there is good news as well. The Amazon EFS CSI Driver is now generally available. The driver allows you to mount EFS file systems for your containers easily and comes with built-in support for in-transit encryption. The following code snippet shows an excerpt from the

encryption_in_transit example.

apiVersion: v1 |

Data Privacy Nightmares

In July, the Court of Justice of the European Union (CJEU) shocked the world of cloud computing and SaaS. The EU-US Privacy Shield is no longer valid for transferring personal data from the EU to the US. In summary, the CJEU said that the US data privacy level is far below the standards applicable within the EU mainly because government agencies have extensive rights to gain access to private data.

AWS responded with a Customer update: AWS and the EU-US Privacy Shield. In short, AWS says that we, as a customer, do not have to rely on the EU-US Privacy Shield when transferring personal data from the EU to AWS regions in the US. Instead, so-called Standard Contractual Clauses (SCCs) are part of the AWS’s service terms.

Disclaimer: I’m not a lawyer. Instead, I summarize what I learned about data privacy within the last weeks.

So far, in its judgment, the CJEU mentioned that SCCs remain valid for the time being. However, the underlying problems with the privacy shield are precisely the same as with SCCs. Therefore, data privacy experts expect that courts and public authorities might also stop the transfer of personal data under SCCs. Right now, we are in a very awkward position. One can be curious whether a new agreement will replace the Privacy Shield between the EU and the US.

Typically, AWS repeats the following mantra at every opportunity: data never leaves a region. However, the following story made the rounds in July: AWS Customers are Opting in to Sharing AI Data Sets with Amazon Outside their Chosen Regions and Many Didn’t Know. Many customers, including myself, have been caught off guard by this news. However, the Service Terms state that Amazon CodeGuru Profiler, Amazon Comprehend, Amazon Lex, Amazon Polly, Amazon Rekognition, Amazon Textract, Amazon Transcribe, and Amazon Translate might transfer data into another region to improve the underlying artificial intelligence.

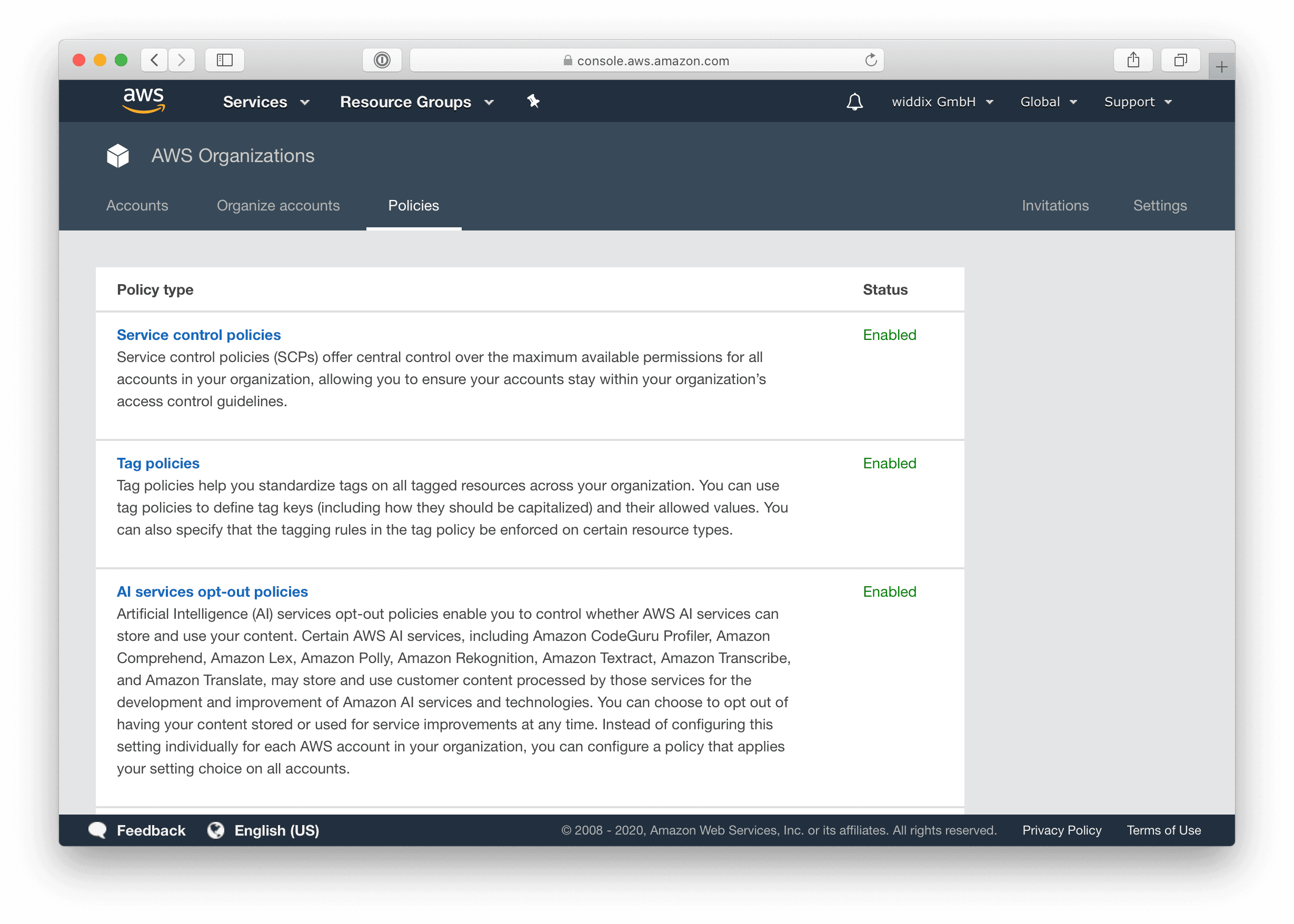

AWS answered with a way to Easily manage your content policies for AI services with AWS Organizations.

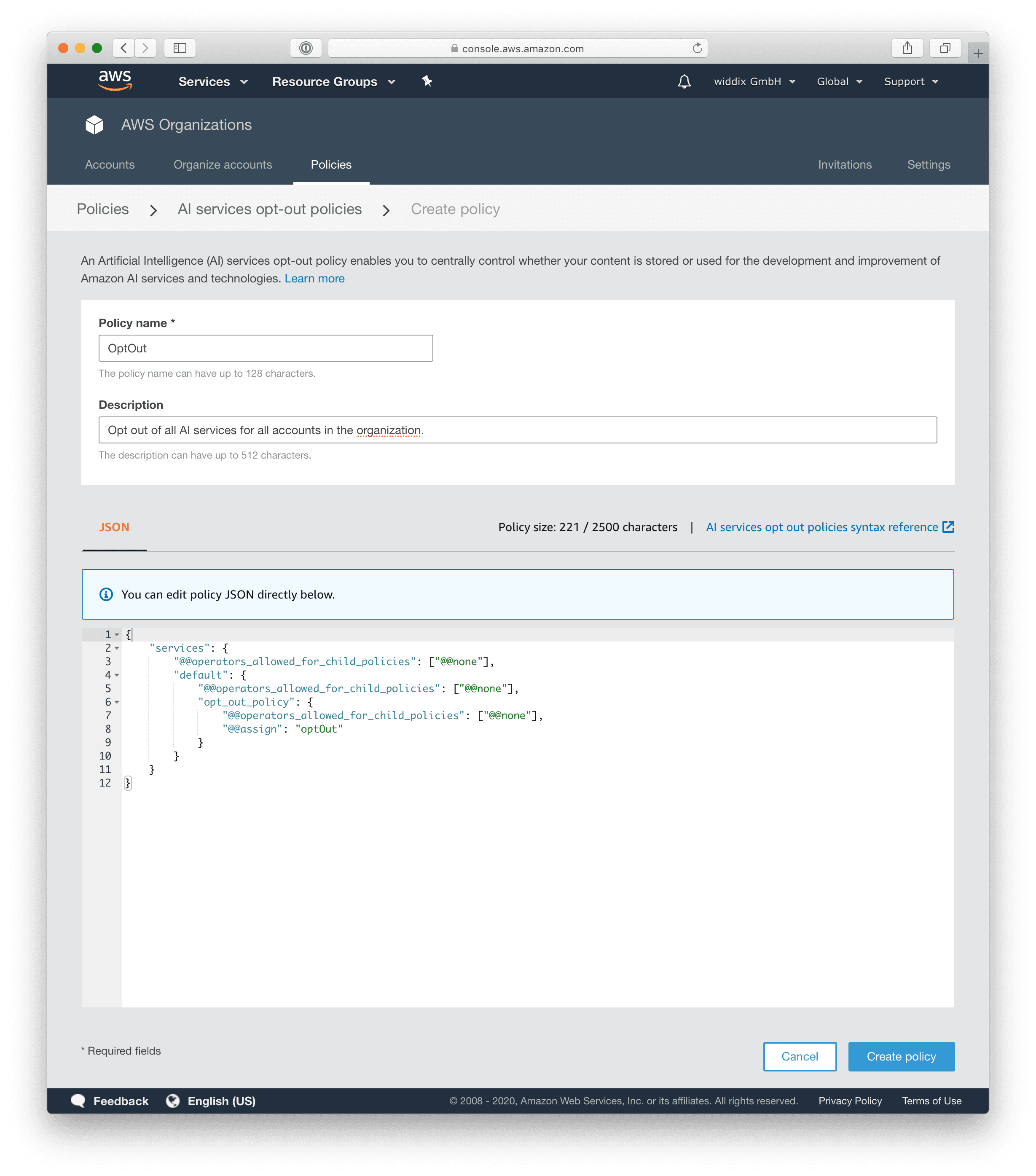

The following steps are needed to opt-out of sharing your AI data. First of all, open AWS Organizations and switch to the Policies tab. Click the AI services opt-out policies link.

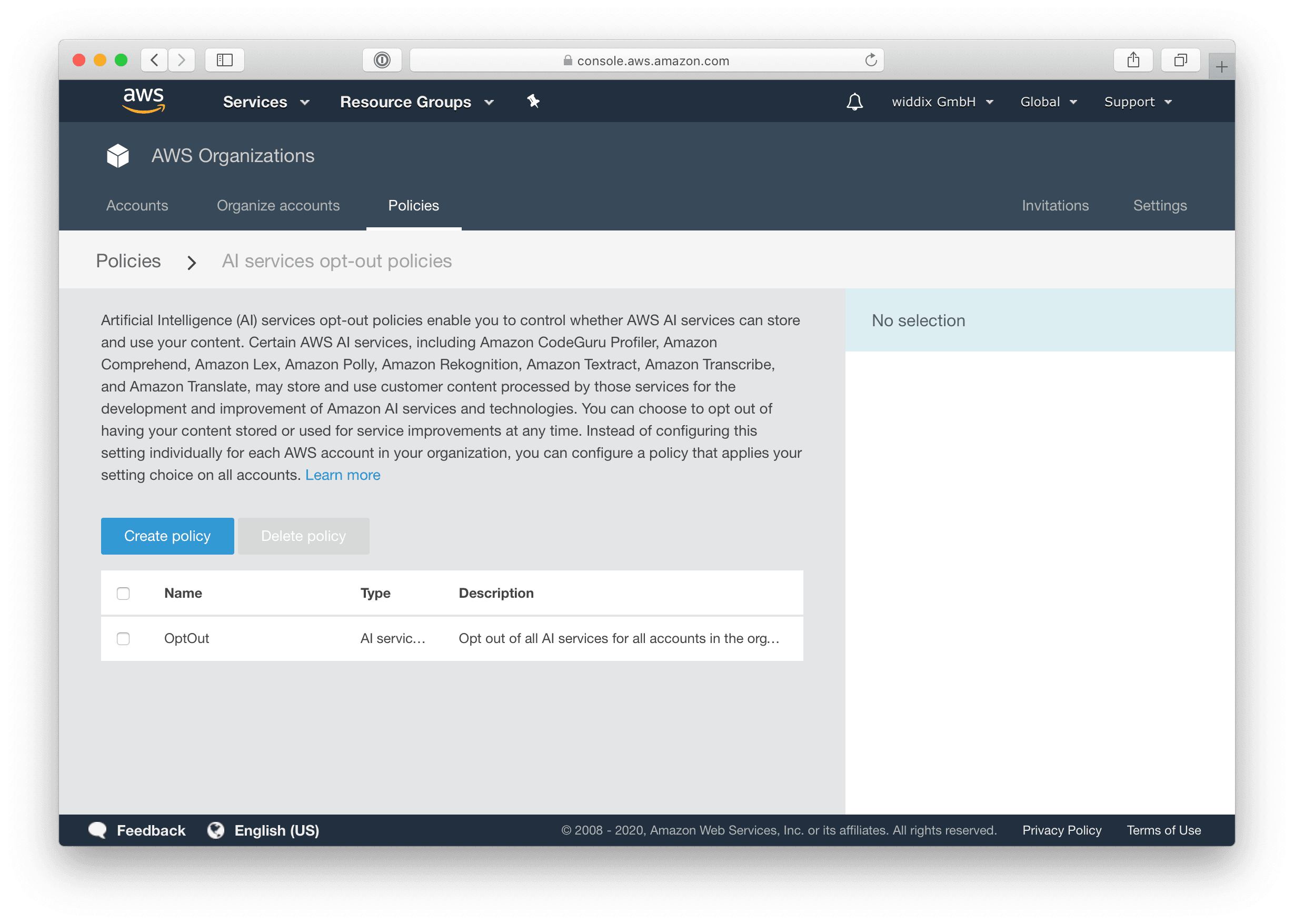

Press the Create policy button.

The following JSON snippet contains a policy defining an opt-out for all AI services of all accounts within the organization.

{ |

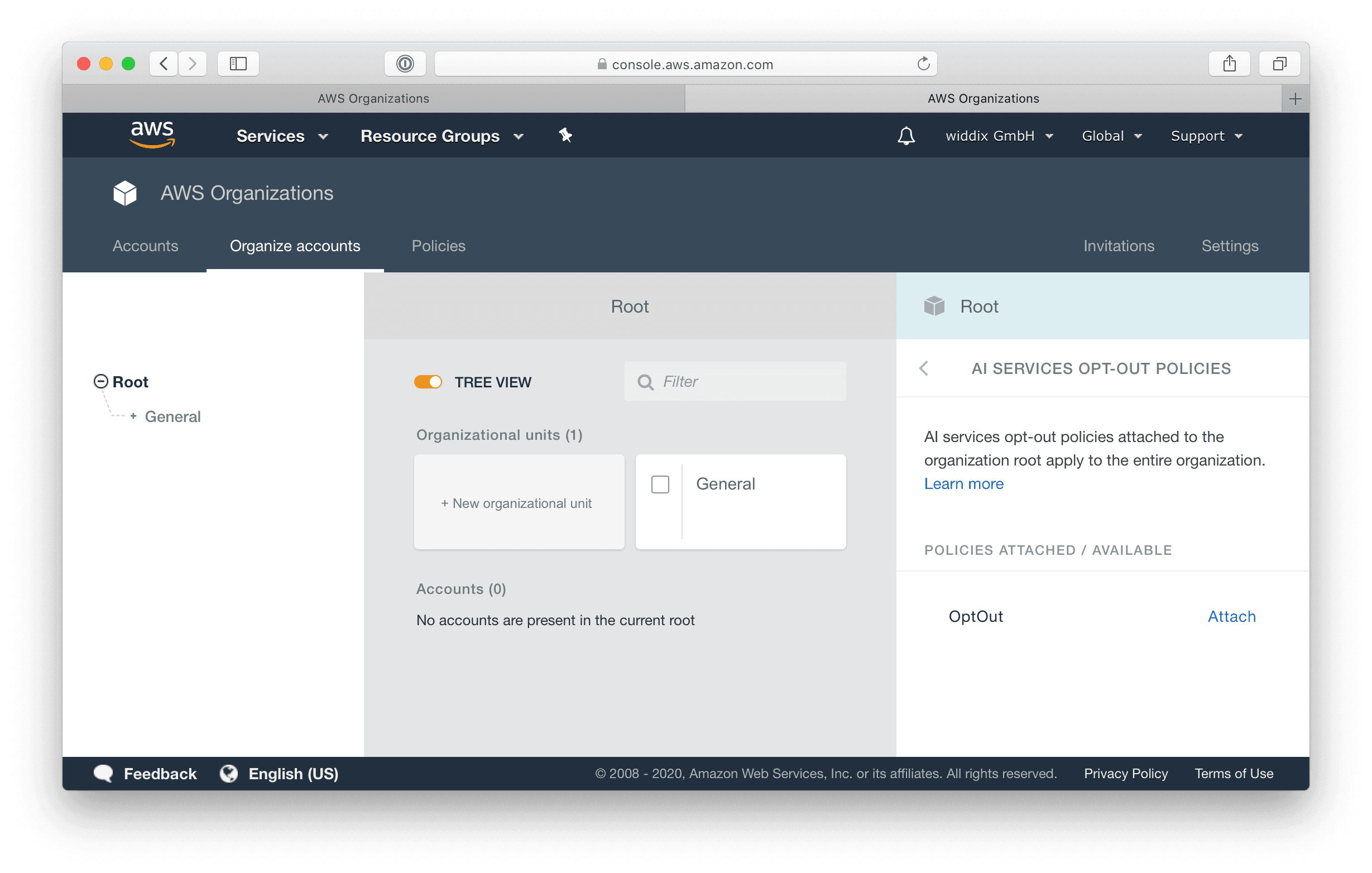

Last but not least, you need to attach the opt-out policy to your organization’s root node.

That’s it. Select an account and use the View effective AI services opt-out policy action to verify the opt-out policy.

Is CDK eating Infrastructure as Code?

The Cloud Development Kit (CDK) enables you to use the programming language of your choice to generate CloudFormation templates more efficiently. AWS and Hashicorp are Introducing the Cloud Development Kit for Terraform (Preview). The CDK for Terraform is marked experimental and is therefore not ready production yet. Also, the project contains low-level constructs (L1) mapping to Terraform resources one-on-one. More powerful high-level constructs will probably follow. You should watch out for what is going on with the CDK for Terraform project.

Creating deployment pipelines based on CodePipeline, CodeBuild, and CloudFormation can be challenging. You need to configure tens of resources and make sure the different steps in the pipeline are authorized to do their work. Luckily, AWS is Announcing CDK Pipelines Preview, continuous delivery for AWS CDK applications. The high-level construct is still in developer preview, but will simplify creating deployment pipelines a lot in the future.

New features for CodeBuild

The team responsible for CodeBuild shipped a bunch of features in July:

First, AWS CodeBuild now supports accessing Build Environments with AWS Session Manager.

- Use

codebuild-breakpointto add a breakpoint to your buildspec.yml. - Check the

Enable session connectionoption when starting the build. - Execute

aws codebuild batch-get-builds --ids ...to get the session target. - Use

aws ssm start-session --target ...to connect to the build run. - Type in

codebuild-resumeto resume the build.

It took me more than 30 minutes to connect with a paused build run. The whole process is a little bit fiddly. However, being able to debug a build run might be worth it.

Second, AWS CodeBuild now supports parallel and coordinated executions of a build project. At first glance, I thought that this new feature allows us to restrict the maximum number of builds per CodeBuild project to 1. However, that is not the case. Instead, the feature will enable you to specify multiple build environments within the buildspec.yml. CodeBuild will execute the build in all those environments in parallel or order. As I’m typically using CodePipeline to orchestrate CodeBuild, I don’t have a use case for that, but your mileage may vary.

Last but not least, AWS CodeBuild supports code coverage reporting.

ARM all the things!

AWS continues to ship new instance types based on its ARM processor. Announcing new Amazon EC2 M6gd, C6gd, and R6gd instances powered by AWS Graviton2 processors. It seems like, not only Apple but AWS is pushing to shift from Intel to ARM. It is time for us to take a closer look at the Graviton2. Stay tuned for more insights!

Streaming video made easy

AWS is Introducing Amazon Interactive Video Service (Amazon IVS), which looks interesting. Michael already played around with the video streaming service. We are thinking about using the service to host some webinars and online events in the future.

Feedback welcome!

I hope you did enjoy this month in review. Did I miss anything important? Please let me know what you think about this format and whether you want to read such a summary monthly.