Caching on AWS 101

Oftentimes, the idea of adding a caching layer arises when users start complaining about the performance of an application. Adding a cache to your architecture does not solve all problems — especially when implementing that change under pressure to fix performance issues. Therefore, thinking about a caching strategy should be part of the process when designing your architecture.

This is a cross-post from the Cloudcraft blog.

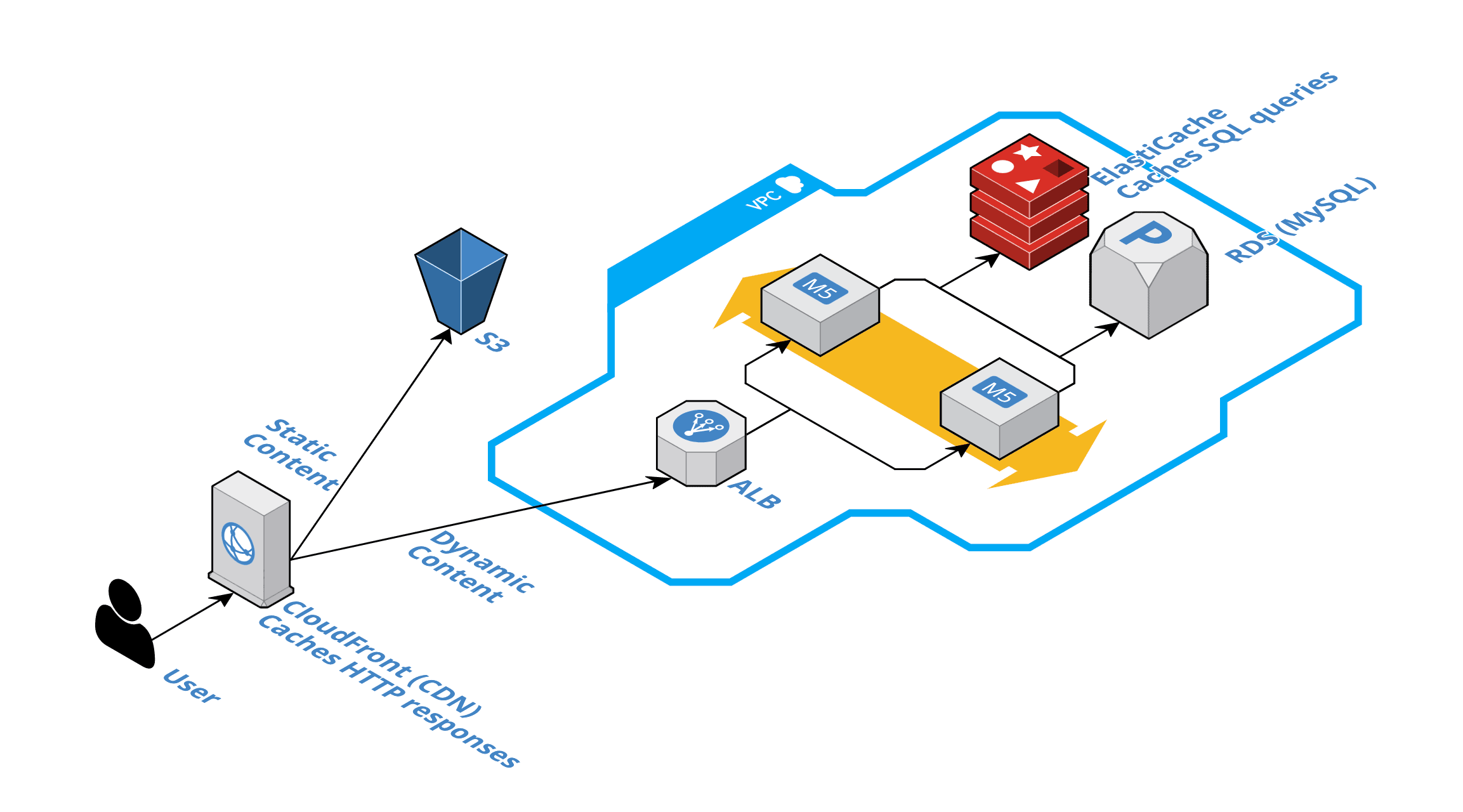

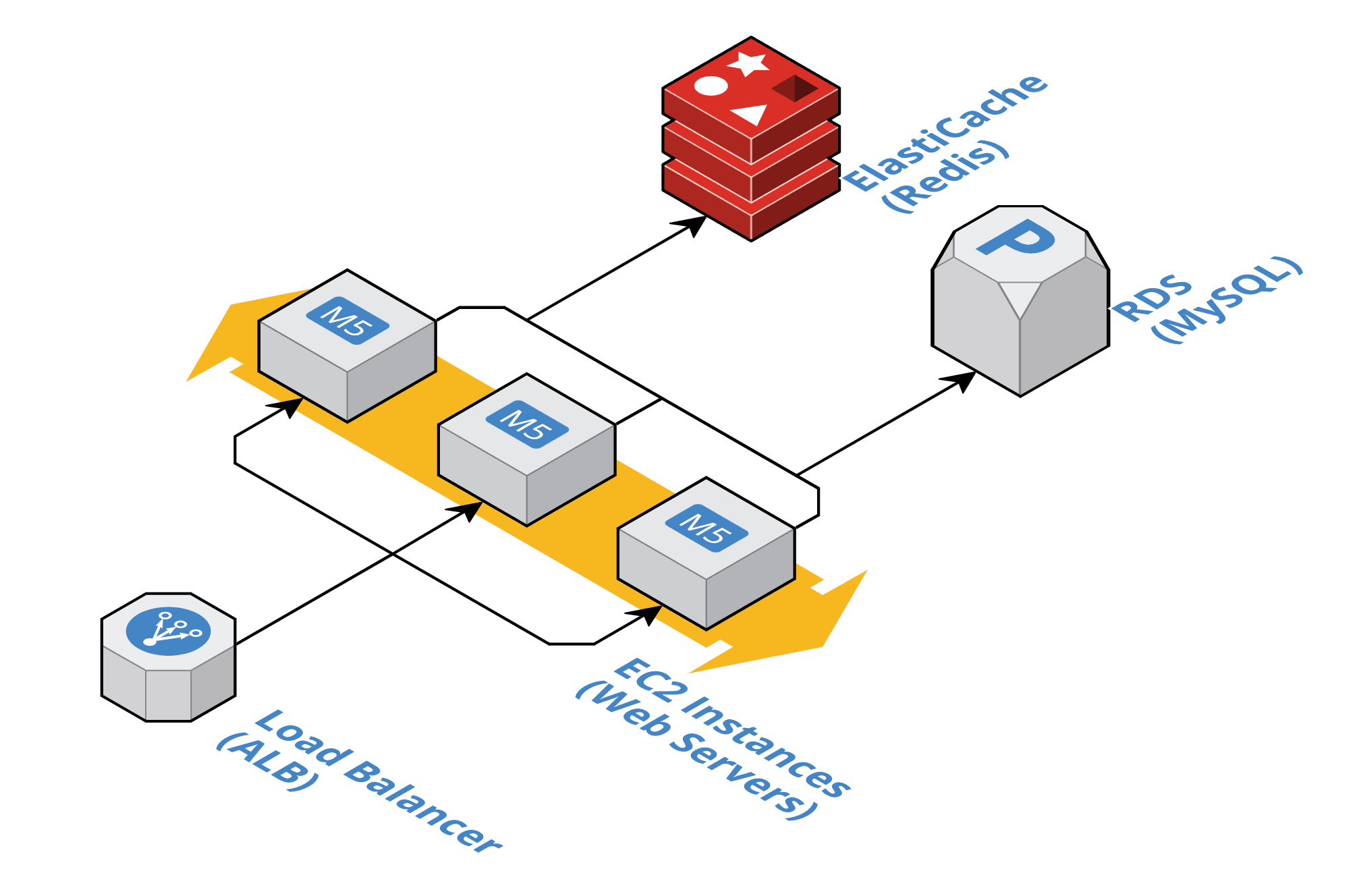

Let’s take a look at the following architecture sketch, created with Cloudcraft.

You will learn how to improve your architecture by adding a cache. After that, I will point out best practices for integrating a cache into your system. Finally, you will learn about caching services provided by AWS.

Why?

In medium to high traffic scenarios, a cache will make a big difference. As a rule of thumb, I would start thinking about caching when expecting more than 100 concurrent users.

What are the benefits of caching?

- Optimize performance by asking the cache for results instead of executing complex SQL queries or wait for relatively slow storage systems. For example, cache results within memory instead of reading data from disk.

- Flatten traffic spikes by storing pre-calculated results to the most frequent requests. For example, the rendered HTML of a very popular product page of an online shop.

- Reduce costs by reducing the load on the resources within your architecture. For example, to be able to downgrade the database instance.

I hope you are sold on the idea of adding a cache to your architecture. Let’s have a look at some best practices for integrating a cache into your system next.

How?

When thinking about how to cache requests, it comes down to two questions.

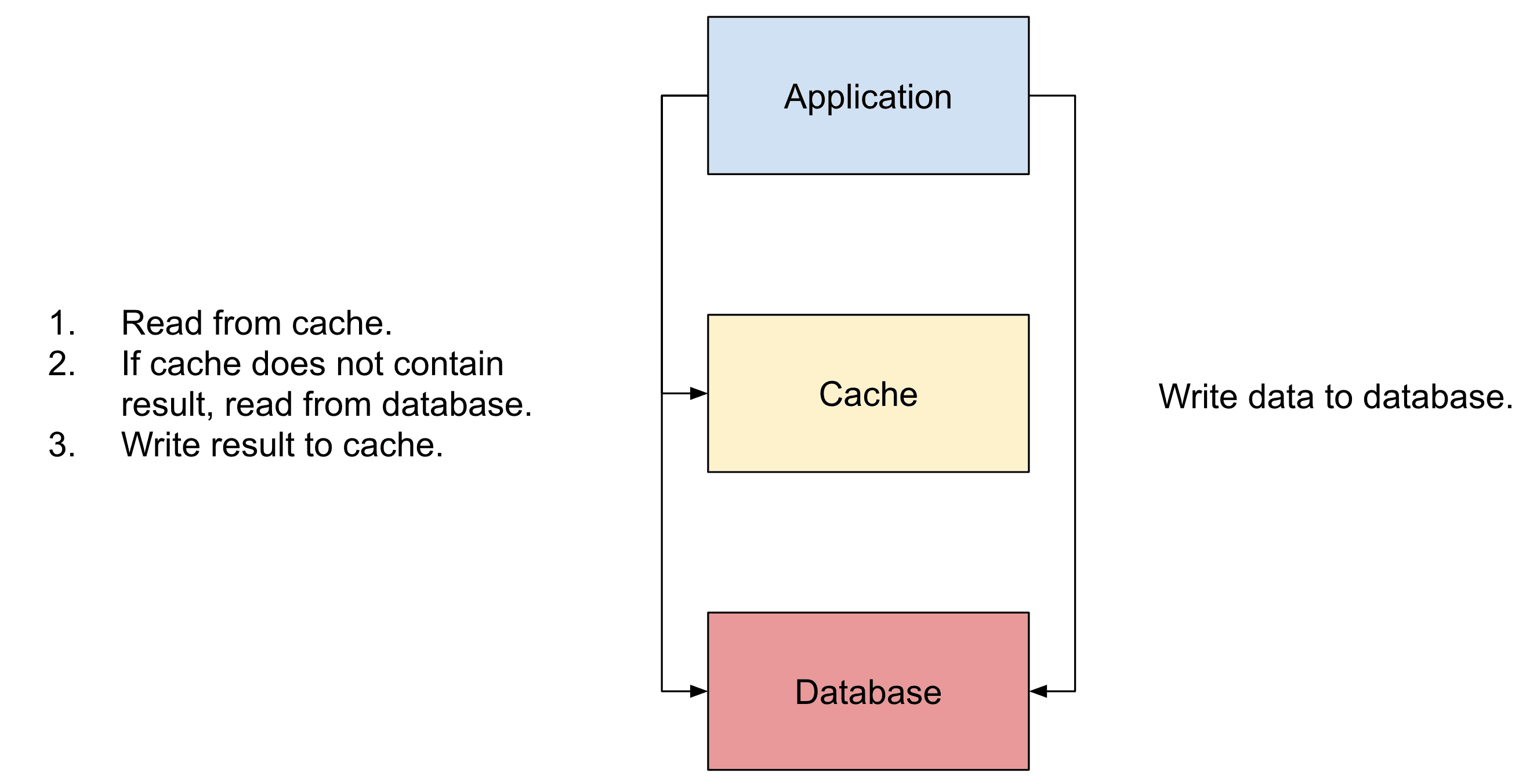

First, how do you populate your cache? The “lazy loading” strategy uses the following approach:

- Ask the cache for a result.

- If the cache cannot answer the request, send the request to the database.

- Write the result from the database to the cache.

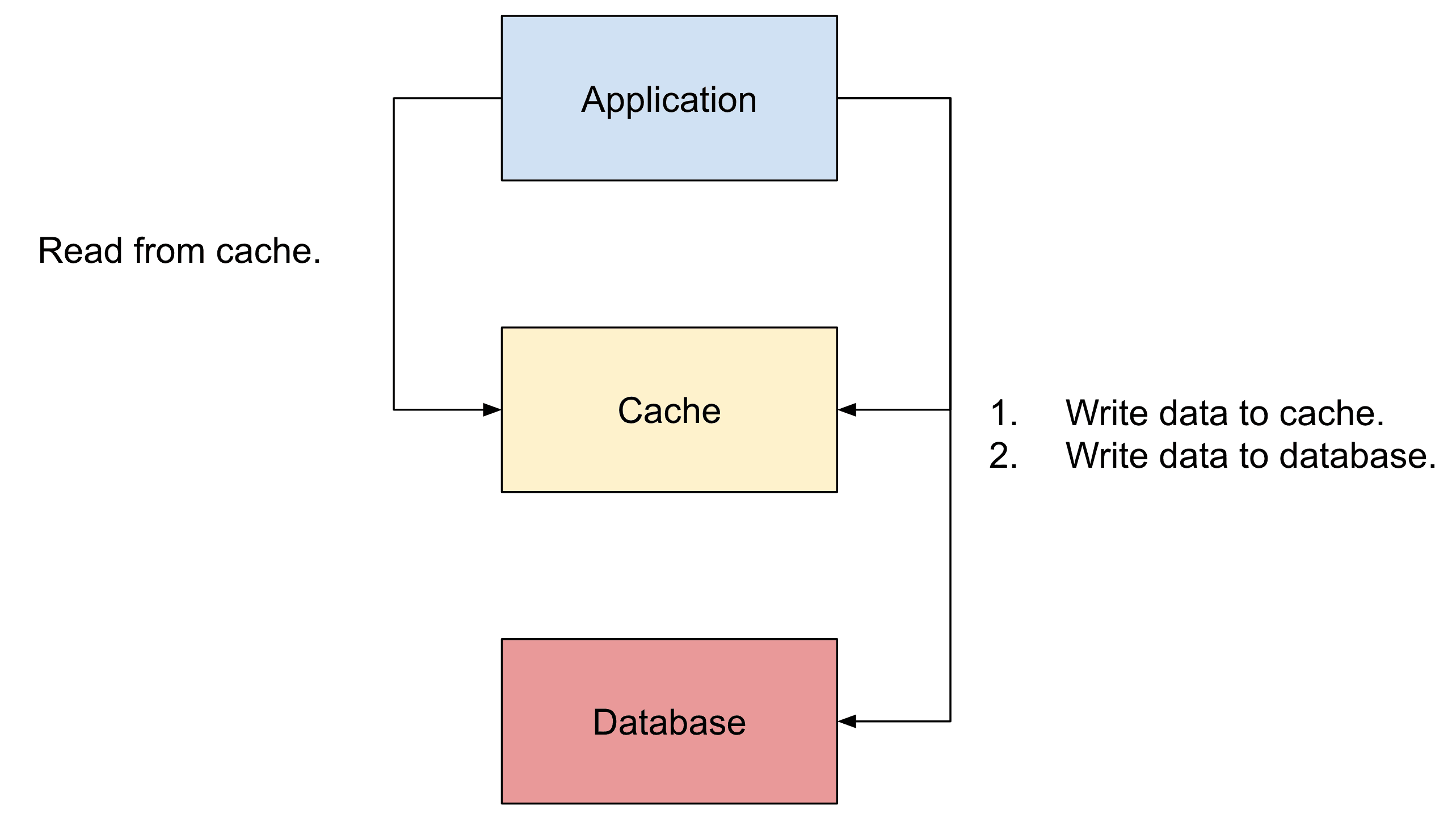

As an alternative, you could use the “write-through” strategy.

- When writing data to the database, update the cache as well.

- For each read request, ask the cache instead of the database.

- Fallback to reading from the database in exceptional cases only.

Second, how do you make sure that when changing data in the database, the cache gets updated as well? Let me answer that question with a quote from Phil Karlton: “There are only two hard things in Computer Science: cache invalidation and naming things.”

Invalidating a cache is a challenge. An approach to that problem: define a time-to-live (TTL) for all results stored in the cache. By doing so, the cached data will expire after a certain amount of time — no need to think through all the scenarios that could require your system to update the cache. However, the clients will receive stale data from the cache.

It might be fine to deliver an outdated profile picture for a few hours, but it might be an issue to display an obsolete price for a product in your online shop or web-based service. Therefore, defining a TTL is typically something to think about from a business perspective.

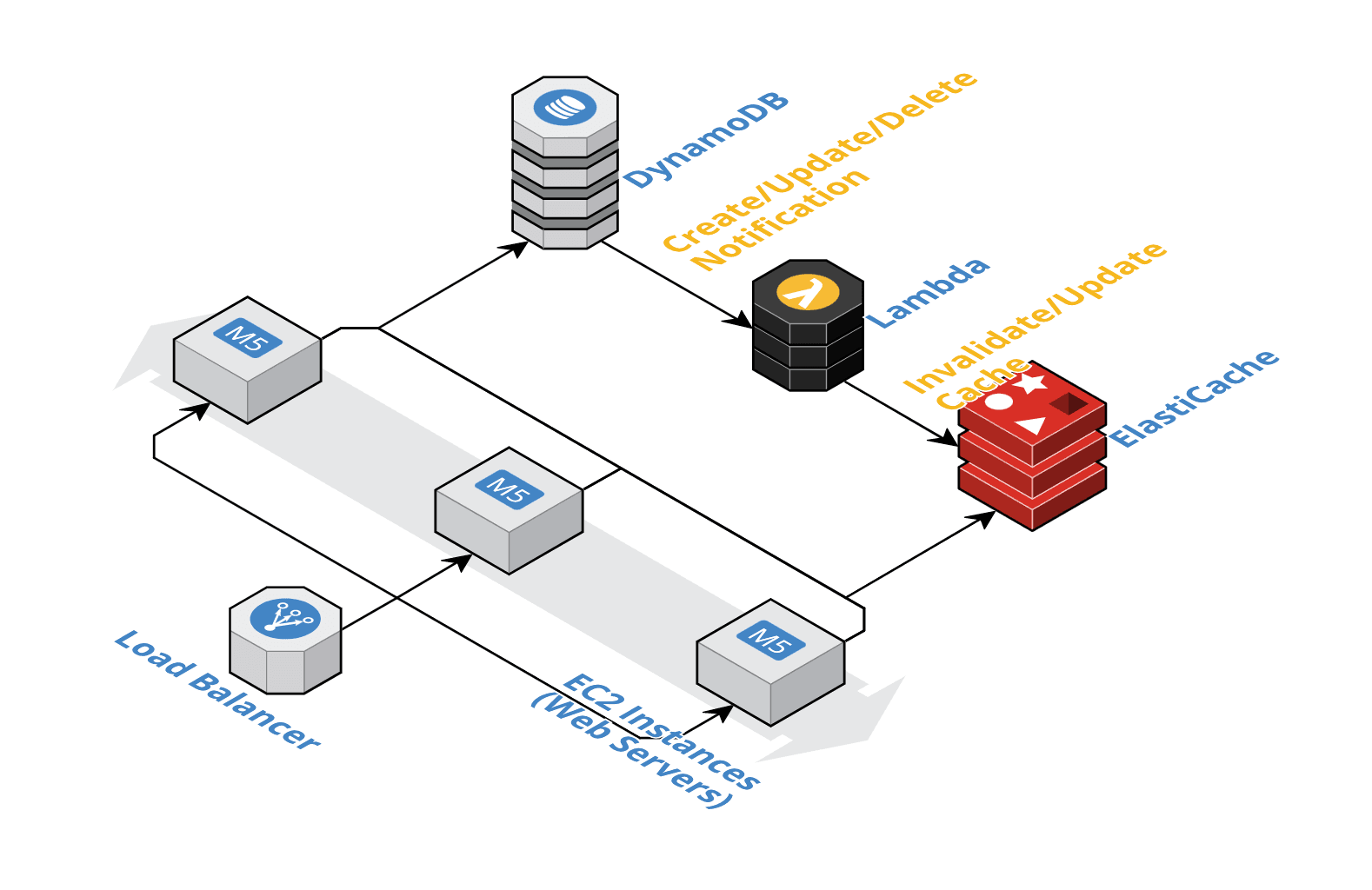

Alternatively, many database systems offer a stream with create, update, and delete events. By subscribing to those change events, you can quickly invalidate your cache.

It is also worth noting that the “write-through” strategy does update the cache automatically. There is no need to think about cache invalidation in that scenario. But it requires that all your data fit into the cache!

Next, you will learn about managed caching services offered by AWS.

Caching on AWS

The large portfolio of AWS also includes services for caching.

- Amazon ElastiCache offers in-memory caches based on Redis or Memcached.

- Amazon CloudFront provides a Content Delivery Network (CDN) caching HTTP close to your worldwide customers.

- Amazon DynamoDB Accelerator (DAX) adds caching capabilities to Amazon’s NoSQL database.

ElastiCache is for in-memory caches what RDS is for SQL databases. You can choose between two engines:

- Redis

- Memcached

Both Redis and Memcached are open-source in-memory data stores. Overall, Redis comes with some advanced features like complex data structures (e.g., sorted sets), in-memory snapshots on disk, and a built-in message broker. Compared to that, Memcached focuses on simplicity and provides only the functionality needed for an in-memory cache.

ElastiCache allows you to deploy a cluster of in-memory instances. All you have to do is to specify the instance type – which defines the CPU, memory, and networking capabilities – as well as the number of instances. The managed service will spin up and maintain the needed instances and provides you a hostname that your application can connect to. It is important to note that ElastiCache runs within your private network (VPC).

Many application frameworks like Spring or Ruby on Rails come with support for either Redis or Memcached to cache database queries, rendered partials, and many more.

By the way, another typical use case for ElastiCache is storing sessions or all kinds of data that you do not need to persist on disk.

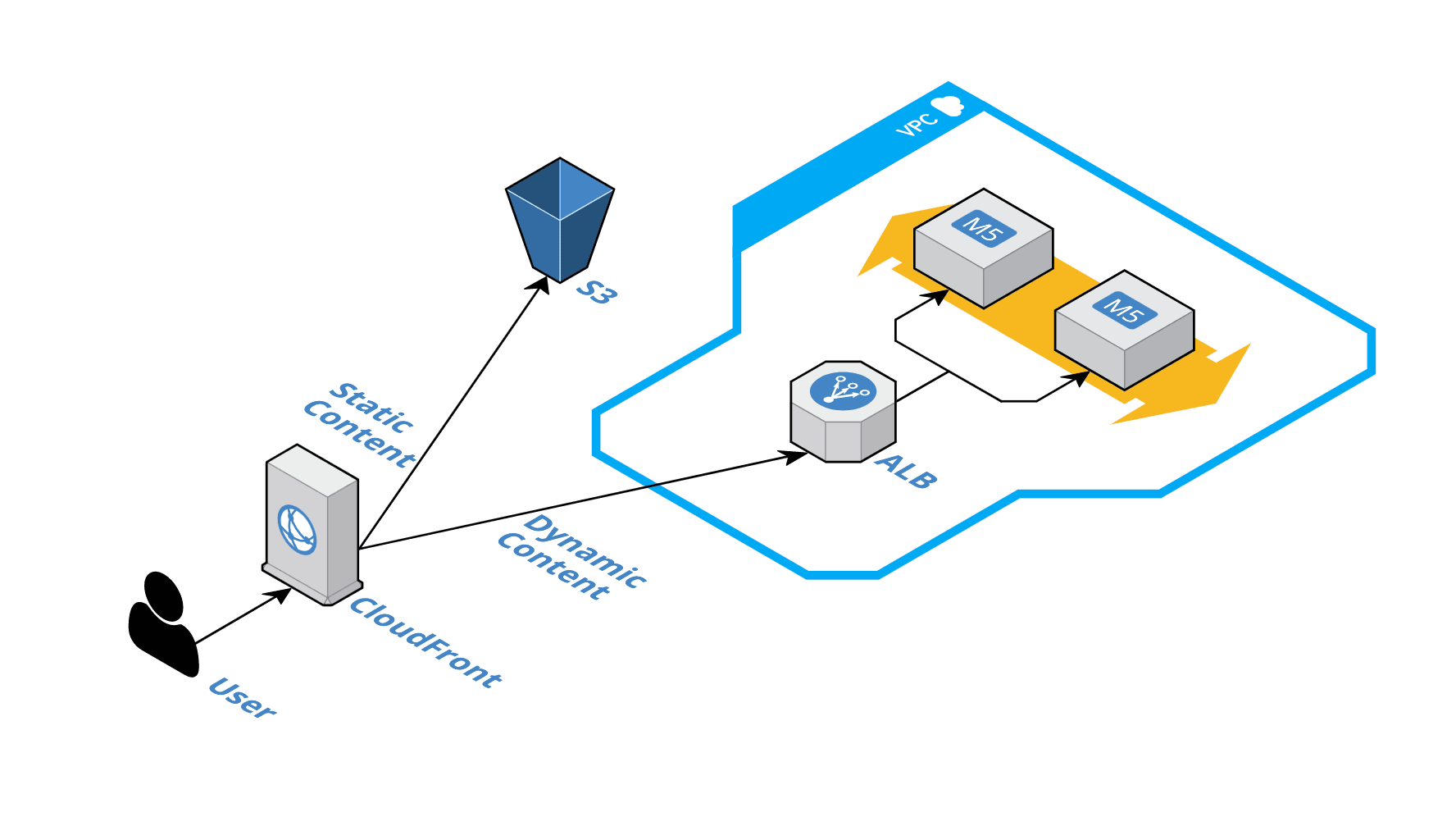

Typically, ElastiCache is used by the application to cache database queries or pre-calculated results. However, the load balancer and web server still need to process the request. Adding CloudFront, a Content Delivery Network (CDN), to the architecture allows you to answer requests even before they hit your load balancer. Doing so reduces latency and costs even further.

AWS operates infrastructure worldwide. Besides the big data centers – called regions – there are more than 200 edge locations all around the world. CloudFront utilizes those edge locations to minimize the distance and, therefore, latency between your users and your cloud infrastructure. You can think of CloudFront as a cache for static web content – like stylesheets, images, and videos – as well as a cache for dynamic content – like a rendered HTML page. CloudFront is priced per request and data transfer. There is no minimum fee.

By default, CloudFront uses the Cache-Control or Expire HTTP header defined by your application to decide how long a response should be cached. Besides that, you can configure the caching behavior for different paths of your application. It is also possible to invalidate the cached responses manually, for example, after a deployment.

Finally, I want to introduce another caching solution. DynamoDB Accelerator (DAX) provides a write-through caching service for DynamoDB, the NoSQL database by AWS. Write requests are stored in the in-memory cache as well as in the DynamoDB table. AWS manages a cluster of caching nodes for you.

When using the AWS SDK for Java, JavaScript, .NET, Python, or Go, switching to the DAX client is simple. However, I have to mention that DAX supports eventually consistent reads only, strongly consistent reads are not supported. Also, DAX requires you to provision caching nodes of a certain instance type. So you are losing the benefits of DynamoDB’s pay-per-request pricing model and automated scaling.

Summary

Adding a cache to your architecture can help to optimize performance, flatten traffic spikes, and reduce costs. I highly recommend thinking about caching already when designing an architecture. Introducing a caching layer later might be challenging.

AWS provides in-memory data stores with ElastiCache. Both Redis and Memcached are popular open-source projects and available as options at ElastiCache. CloudFront — Amazon’s Content Delivery Network — can be used to cache static but also dynamic content close to your users worldwide.

Further reading

- Article Databases on AWS

- Article NoSQL on AWS: Document-Oriented Databases

- Article Have you replaced IAM Users with AWS SSO yet?

- Tag elasticache

- Tag cloudfront

- Tag aws-architecture