Configure your CloudFormation managed infrastructure with Parameter Store and CodePipeline

Getting started with CI/CD to manage your AWS infrastructure is hard:

- You have to familiarize yourself with the available technologies.

- You have to create a Proof of Concept to show your team how it works.

- You have to convince your team to stop using the graphical Management Console.

The last part is usually the hardest. That’s why I was looking for a way to introduce CI/CD while still allowing manual changes using the graphical Management Console.

If you use CloudFormation to create resources for you, you should never make manual changes to the resources. Otherwise, you risk to losing the infrastructure during the next update run of CloudFormation.

Manual changes through Parameter Store

AWS Systems Manager (SSM) Parameter Store is a good place to store the configuration of your application. Parameters can be organized like files in a folder like structure. E.g., the parameter /application/stage/instancetype stores the value t2.micro. Parameter Store comes with a nice UI.

CloudFormation templates can be parametrized. A CloudFormation parameter can lookup the value from Parameter Store when you create or update a stack.

But how do you trigger a stack update when the value of the parameter in Parameter Store changes?

Luckily, Parameter Store parameter changes are published to CloudWatch Events. You can subscribe to those events and trigger a CodePipeline execution to update the CloudFormation stack. All of this managed by CloudFormation.

Read on if you want to learn how you can connect the pieces:

- Develop a CloudFormation template using the Parameter Store to start an EC2 instance

- Using CodePipeline to deploy a CloudFormation stack

- Listening to CloudWatch Events to trigger the pipeline on parameter changes

Simple CloudFormation template using the Parameter Store

First, you need the CloudFormation template that describes the EC2 instance. The instance type should be fetched from the /application/stage/instancetype parameter.

CloudFormation integrates with Parameter Store using parameters as well. Don’t get confused. CloudFormation parameters and Parameter Store parameters are two different things. You use a CloudFormation parameter of type AWS::SSM::Parameter::Value<String> and set the value to the name of the Parameter Store parameter (e.g., /application/stage/instancetype). CloudFormation will then, on each create or update of the stack, ask Parameter Store for the current value. A second CloudFormation parameter ParentVPCStack is used to reference a CloudFormation that contains the VPC.

|

The CloudFormation parameter can then be used as any other parameter. !Ref InstanceType returns the value of the parameter.

Resources: |

Done is the CloudFormation template spinning up a single EC2 instance with the instance type coming from Parameter Store. I removed some parts (# [...]) to focus on the important parts. You can download the full infrastructure.yaml template on GitHub.

Simple CodePipeline to deploy a CloudFormation stack

Now it’s time to take care of the deployment pipeline. You need an S3 bucket (ArtifactsBucket) to store the artifacts that are moved through the pipeline. I also added the CodeCommit repository (CodeRepository) to the template to store the project’s source code. Finally, CodePipeline and CloudFormation need permissions (PipelineRole) to invoke the AWS API on your behalf to create the resources described in the CloudFormation templates. Since you can create any resource with CloudFormation, you most likely have to grant full permissions to create a stack. In this example, it should be possible to restrict on certain EC2 actions, but you don’t necessarily know which API calls CloudFormation performs for you.

|

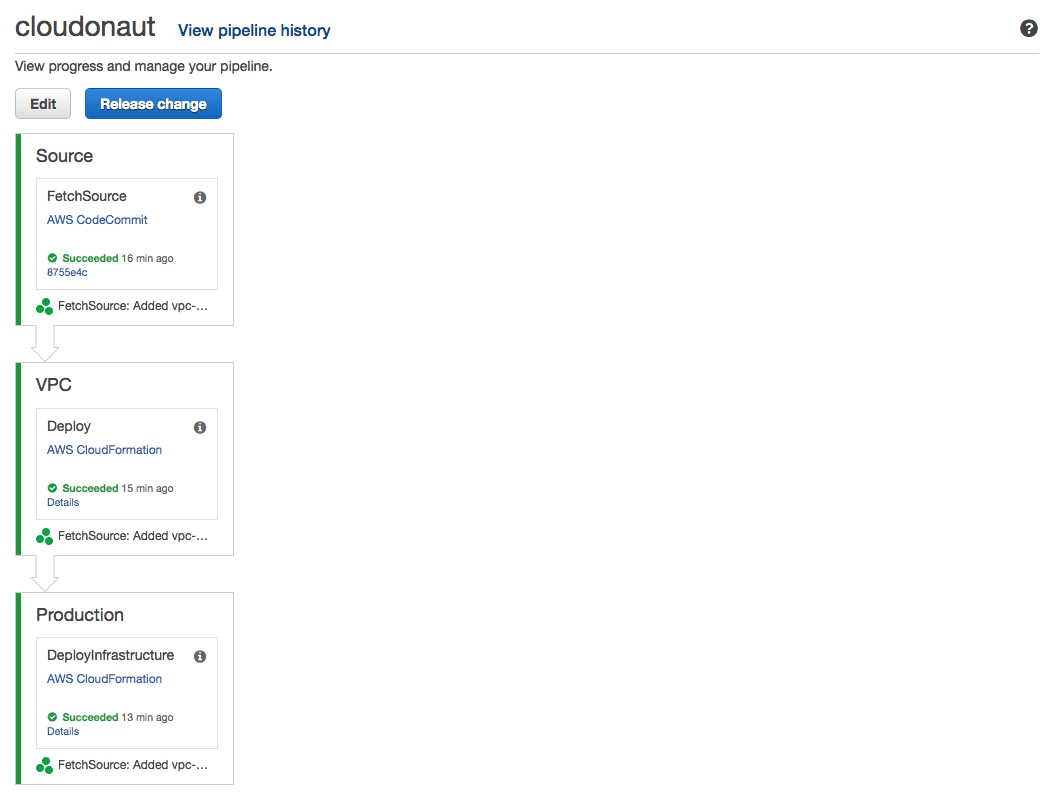

The pipeline itself can now be described. The following figure shows how the pipeline looks like.

In the first stage, the source code is fetched from the CodeCommit repository. After that, a VPC stack is created. The EC2 instance is later launched into the VPC that is created in the second stage. I reused the vpc-2azs.yaml template from our Free Templates for AWS CloudFormation collection to describe the VPC. That’s one of the big advantages of CloudFormation. Once you have a template, you can reuse it as often as you want.

Pipeline: |

There is one interesting concept that I need to explain. In the VPC stage, the VPC stack is deployed. The CloudFormation stack outputs are stored in a file called output.json and this file is part of the VPC artifact. You can use the VPC artifact later to get access to the stack outputs from the VPC stack.

In the third stage, the template containing the EC2 instance is used to create the infrastructure stack. You now pass two input artifacts to CloudFormation deployment, the Source containing the source code from CodeCommit, and the VPC containing the VPC stack outputs.

# continued template |

The infrastructure.json file wires the value from output.json together with the parameter of the infrastructure.yaml template.

{ |

The pipeline is mostly done. One last thing is missing.

Listening to CloudWatch Events

Last but not least, you define a CloudWatch Events Rule to trigger the pipeline whenever the Parameter Store parameter changes. And as always, you need to give AWS (to be more precise, CloudWatch Events) permissions to execute the pipeline.

PipelineTriggerRole: |

There is one more thing to talk about when talking about CloudWatch Events. CodeCommit does also publish an event if the repository changes. The following Event Rule executed the pipeline whenever the source code changes in the repository.

CodeCommitPipelineTriggerRule: |

You can download the full pipeline.yaml template on GitHub.

Setup instructions

Have you installed and configured the AWS CLI?

- Clone the example repository

git clone https://github.com/widdix/parameter-store-cloudformation-codepipeline.gitor download the ZIP file cd parameter-store-cloudformation-codepipeline/- Create parameter in Parameter Store:

aws ssm put-parameter --name '/application/stage/instancetype' --value 't2.micro' --type String - Create pipeline stack with CloudFormation:

aws cloudformation create-stack --stack-name cloudonaut --template-body file://pipeline.yaml --capabilities CAPABILITY_IAM - Wait until CloudFormation stack is created:

aws cloudformation wait stack-create-complete --stack-name cloudonaut - Push files to the CodeCommit repository created by the pipeline stack (I don’t use

git pushhere to skip the git configuration): COMMIT_ID="$(aws codecommit put-file --repository-name cloudonaut --branch-name master --file-content file://infrastructure.yaml --file-path infrastructure.yaml --query commitId --output text)"COMMIT_ID="$(aws codecommit put-file --repository-name cloudonaut --branch-name master --parent-commit-id $COMMIT_ID --file-content file://infrastructure.json --file-path infrastructure.json --query commitId --output text)"COMMIT_ID="$(aws codecommit put-file --repository-name cloudonaut --branch-name master --parent-commit-id $COMMIT_ID --file-content file://vpc-2azs.yaml --file-path vpc-2azs.yaml --query commitId --output text)"- Wait until the first pipeline run is finished:

open 'https://console.aws.amazon.com/codepipeline/home#/view/cloudonaut' - Visit the website exposed by the EC2 instance:

open "http://$(aws cloudformation describe-stacks --stack-name cloudonaut-infrastructure --query "Stacks[0].Outputs[0].OutputValue" --output text)" - Update the parameter value:

aws ssm put-parameter --name '/application/stage/instancetype' --value 't2.nano' --type String --overwrite(t2.nanois outside th Free Tier, expect charges of a few cents) - Wait until the second pipeline run is finished:

open 'https://console.aws.amazon.com/codepipeline/home#/view/cloudonaut' - Visit the website exposed by the EC2 instance:

open "http://$(aws cloudformation describe-stacks --stack-name cloudonaut-infrastructure --query "Stacks[0].Outputs[0].OutputValue" --output text)"

Clean up instructions

- Remove CloudFormation stacks

aws cloudformation delete-stack --stack-name cloudonaut-infrastructureaws cloudformation wait stack-delete-complete --stack-name cloudonaut-infrastructureaws cloudformation delete-stack --stack-name cloudonaut-vpcaws cloudformation wait stack-delete-complete --stack-name cloudonaut-vpcaws cloudformation delete-stack --stack-name cloudonautaws cloudformation wait stack-delete-complete --stack-name cloudonaut- Remove S3 bucket prefixed with

cloudonaut-artifactsbucket-including all files:open "https://s3.console.aws.amazon.com/s3/home" - Remove Parameter Store parameter:

aws ssm delete-parameter --name '/application/stage/instancetype'

Summary

I like to combine the ease of Parameter Store with the benefits of CI/CD. Parameter Store can be turned into a graphical user interface to configure your infrastructure. This is very handy if you work in a team where not everyone if familiar with the concepts of CI/CD. Introducing Parameter Store to your team simplifies things. You can use a CI/CD approach to deploy infrastructure while the team can still use a graphical user interface to control some parameters without bypassing the CI/CD pipeline. A few examples of parameters that I used in the past:

- The size of an auto-scaling group allows you to manually start or shutdown a fleet of EC2 instances through the pipeline

- The storage of your RDS database instance

- The number of nodes in an Elasticsearch cluster

- The instance type of EC2 instances managed by an auto-scaling group

By the way, parameter Store keeps a record of all changes to a parameter which is handy if you need to record changes to your infrastructure.

Unfortunately, CloudFormation does not support encrypted values from Parameter Store which would be awesome to manage secrets such as database passwords.

Further reading

- Article The Life of a Serverless Microservice on AWS

- Article AWS Advent has started: Deploy your AWS Infrastructure Continuously

- Article Delivery Pipeline as Code: AWS CloudFormation and AWS CodePipeline

- Article AWS Velocity Series: CI/CD Pipeline as Code

- Tag cloudformation

- Tag codepipeline

- Tag parameter-store