Protect AWS SDK calls with Bulkheads and Circuit Breakers

If you use one of the AWS SDKs to make queries to the AWS API you need to prepare for network unreliability. One of the AWS service that requires heavy SDK usage is DynamoDB (NoSQL database as a Service). I will use DynamoDB as an example in this post but this also applies to other AWS services like S3 and SQS that are used via SDKs.

Motivation

Calls to AWS services can be slow because of various reasons.

- The network can be slow.

- The AWS service can be slow.

- Your virtual machine can be slow.

Whatever the reason is, your users will experience longer loading times. If things go wrong the slowness of one service can propagate through your system. You basically exhaust all resources (e.g. connection pools) down the way. So in the end your whole system can become slow just because of one call to the AWS API is slowed down. This is of significant importance if you deal with a micro services architecture.

Literature

In his book Release it!, Michael T. Nygard mentions effective techniques to increase the stability of a system that makes calls to other services:

- Timeouts

- Circuit Breakers

- Bulkheads

You can use these techniques to protect your service while talking to other services that are not well-behaved.

Are AWS services well-behaved?

Yes they are! I want to highlight that AWS services are providing very high uptimes and low latency. The protection that we are going to create is not needed most of the time. Nevertheless if you use DynamoDB you can run into the situation where DynamoDB throttles your request by adding an artificial delay. This happens if your reads or writes exceed the provisioned capacity of the table. In this situation SDK calls will be slower than usual.

Timeouts

If your appointment doesn’t show up you will sooner or later stop waiting and continue do do something else than waiting. The problem with computers is that they don’t get bored if they wait. If you tell them to wait infinitely they are fine. But the user who wants to interact with your application is a human and get’s bored pretty soon.

Depending on the SDK you use you can set different timeouts. The Java SDK exposes four timeouts that you can define:

- The connection timeout determines the time to wait when initially establishing a connection before giving up and timing out.

- The socket timeout defined the time to wait for data to be transferred over an established, open connection before the connection times out and is closed.

- The request timeout defines the time to wait for the single request to complete before giving up and timing out.

- The client execution timeout defines the time to allow the client to complete the execution of an API call. This could include multiple requests in case of retries.

Let’s have a look at the defaults:

- connection timeout: 50 seconds

- socket timeout: 50 seconds

- request timeout: disabled

- client execution timeout: disabled

For DynamoDB the timeouts are much too high for simple GetItem, Query or PutItem operations. No user will accept a page loading for 50 seconds. Keep also in mind that while you wait for something you occupy resources that can’t be used for other requests.

I propose the following timeouts for DynamoDB:

final ClientConfiguration cc = new ClientConfiguration(); |

Now you will get a timeout after 2 seconds in total or if the connection can not be established within 500 milliseconds or when the connection does not transfer data within 1 second. Depending on your use case 2 seconds may still be too long.

Bulkheads

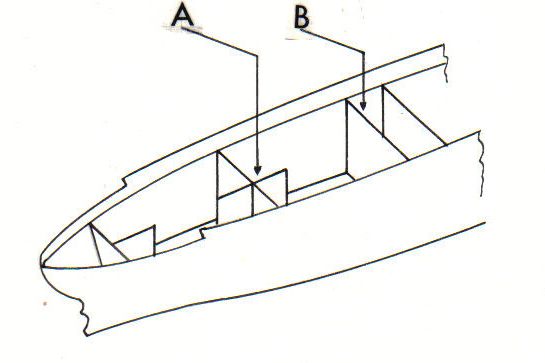

The idea of a bulkhead is that you don’t lose the whole ship/system if something goes wrong by separating parts of the ship/system.

Translated to our DynamoDB AWS SDK we will find one thing that is shared and should be separated more wisely: the connection pool. By default a client can make 50 connections in parallel.

Have a look at the following example:

final ClientConfiguration cc = new ClientConfiguration(); |

What’s the problem? Let’s go back to the scenario where one of the tables (e.g. table1) runs out of capacity. DynamoDB will throttle request to table1. If a request takes longer it will also block one connection longer. Therefore the overall throughput is reduced. The problem is that this also affects request to table2. While all 50 connections are used to wait for slowed down answers from table1 your application can also not talk with table2. Increasing the maximum connection limit also doesn’t help. It just takes a bit longer until you run out of connections. But what about creating a AmazonDynamoDBClient for every table? You get two separated connection pools, exactly what we need for a bulkhead.

final ClientConfiguration cc = new ClientConfiguration(); |

In the above code example it is very explicit what client is used for what requests. This gets a bit more tricky if you use dependency injection. Make sure that you understand what connection is used for what requests.

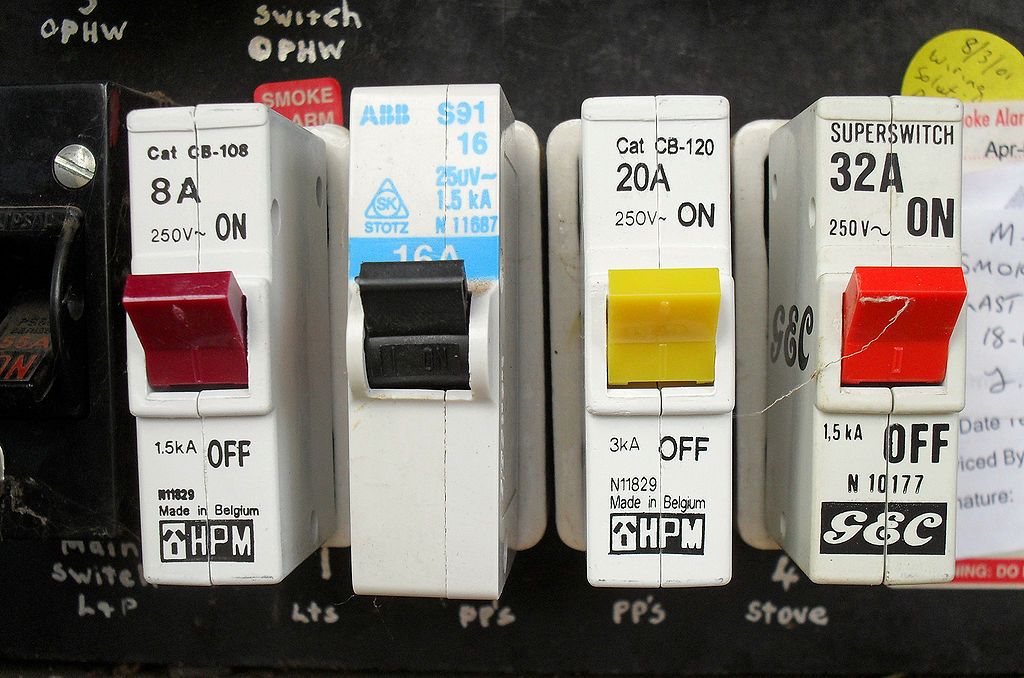

Circuit Breakers

A circuit breaker will cut the circuit if the current reaches a certain threshold that will not be reached under normal conditions. To prevent something from burning the circuit breaker cut the circuit and eventually saves your life.

Translated to the software world a circuit breaker can cut the normal path of your software when:

- runtime exceeds a threshold

- error rate exceeds a threshold

- resource consumption exceeds a threshold

Instead of stopping everything when the circuit is interrupted a software circuit breaker can fallback to another code path. And after some time the software circuit breaker sends a few requests down the normal path to see if it’s working again. If yes it switches back to the normal path.

Translated to our DynamoDB AWS SDK it makes sense to protect against high latencies during throttling by falling back to another code path. DynamoDB will not only throttle our requests if we exceed capacity, if we make much more requests that we should, it will also start returning errors. Luckily Netflix implemented a software circuit breaker called Hystrix. The following example demonstrates how you can use Hystrix and the DynamoDB AWS SDK together.

To implement a circuit breaker you need to:

- extend

HystrixCommand - implement the

run()method

The run() method represents the normal path of your application. As long as run() doesn’t take too long (2000 ms as specified) and doesn’t throw Exceptions too often (50 % of time per default) it will be invoked if you invoke the execute() function on your HystrixCommand.

public static class GetItemCommand extends HystrixCommand<Item> { |

final ClientConfiguration cc = new ClientConfiguration(); |

If you would like to provide a fallback implementation you can also implement/override the getFallback() method. In some cases you could fallback to just retry the request and hope for lower latency this time, query an in-memory cache or return static data. You could also disable a feature entirely (e.g. not display recommendations to your users) but continue to display a details page. Read more about Hystrix if you are interested.

Summary

- If you use the DynamoDB AWS SDK for Java it is highly recommended that you lower timeouts.

- To prevent one throttled DynamoDB table to effect other parts of your application I recommend that you create a

AmazonDynamoDBClientper table. - In case of failures like capacity exceeded exceptions or many exceeded timeouts a circuit breaker like Hystrix will cut the normal path and switch to another part to stop sending request at all.

Further reading

- Article High availability is a no-brainer: EC2 auto-recovery

- Article Building blocks for highly available systems

- Article Serverless image resizing at any scale

- Article A look at DynamoDB

- Tag dynamodb

- Tag sdk