Serverless in the Enterprise

Is Serverless ready for the Enterprise? I coached developers building their first Serverless applications for a large company recently and want to share my learnings and observations with you.

Do you prefer listening to a podcast episode over reading a blog post? Here you go!

Why?

Going Serverless enables you to build scalable and fault-tolerant systems on AWS. The layer of abstraction on top of Infrastructure-as-a-Service solves the following challenges out-of-the-box.

- Avoid idle resources and scale within minutes based on demand.

- Deploy changes without downtime or effects on in-flight requests.

- Provision every part of your cloud infrastructure in an automated way.

When?

In my experience, going the Serverless route is most valuable in scenarios with the following characteristics.

- The system is business-critical but used by less than 100 users.

- The system is not business-critical but processes a very high number of requests with unpredictable load peaks.

- Users access the system via the Internet.

- A single developer or small team is responsible for the system.

- The developers are familiar with Serverless or eager to learn about it.

People

The idea of a Serverless architecture will probably not go down well. Most of the arguments presented will be pseudo-arguments. You will notice sooner or later that this is a people problem.

Find a small project and a small team of highly motivated people to build the first Serverless application within your organization. Get support from management. Deliver a solid solution. Everything else will follow.

Skills

Building a Serverless application requires skills that are most likely missing in your organization.

Here is a list of what one needs to know to get started.

- Identity and Access Management (IAM)

- Lambda

- S3

- DynamoDB

- API Gateway

- CDK, CloudFormation, or Serverless Framework.

- Aurora Serverless

- AWS SDK

Starting from 0 is hard. Invest in training or hire experts. Skills are key to success!

Compute

Lambda is the obvious choice for the compute layer of a Serverless application. However, Lambda comes with a few limitations.

- Compute, memory, and networking resources are limited.

- Special requirements for CPU, GPU, memory can only be met with difficulty or not at all.

- A function invocation will timeout after 15 minutes. Therefore, you need to slice your problem into chunks that Lambda can process within less than 15 minutes - even the outliers.

Another limitation of Lambda is that running your code outside of the cloud is a challenge. Therefore, you need to invest in some tooling to increase the efficiency of the development workflow.

An alternative is Fargate. With Fargate running containers in the cloud becomes a no-brainer. All you need to do is to bundle your application into a container image and tell AWS to run that image for you. That simplifies the development workflow a lot and is also closer to the programming model for on-premises.

Some say Fargate is not 100% Serverless. But how cares about those theoretical discussions? The main difference is that we pay per hour - instead of paying per request with Lambda - and that we need to configure auto-scaling ourselves.

Fargate is easier to handle from a programmer’s perspective, as there is a long-running process. This gives more flexibility and is closer to what developers and architects are already used to.

Want to learn more about the differences between Lambda and Fargate? Check out Containers vs. Serverless: Thoughts About Your Cloud Strategy.

Lambda is the obvious choice. But consider Fargate (ECS) as well to decrease complexity for developers.

Network

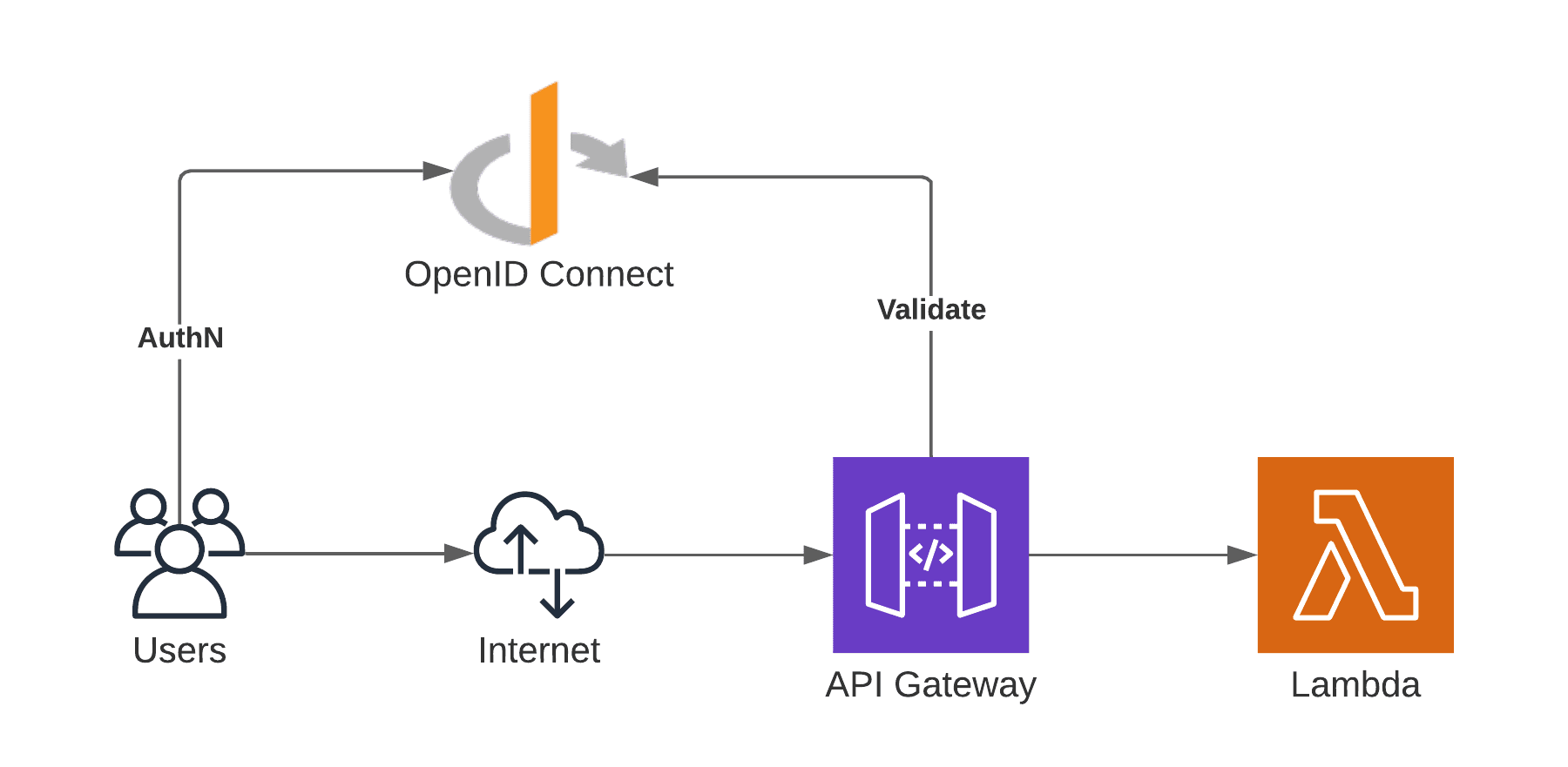

The network disappears under another layer of abstraction. The Serverless infrastructure handles authentication, authorization, and throttling.

The Serverless offerings from AWS are web services per excellence. All of them provide an Internet-facing API. That’s what you should do when building Serverless applications as well. The days of the Intranet are numbered.

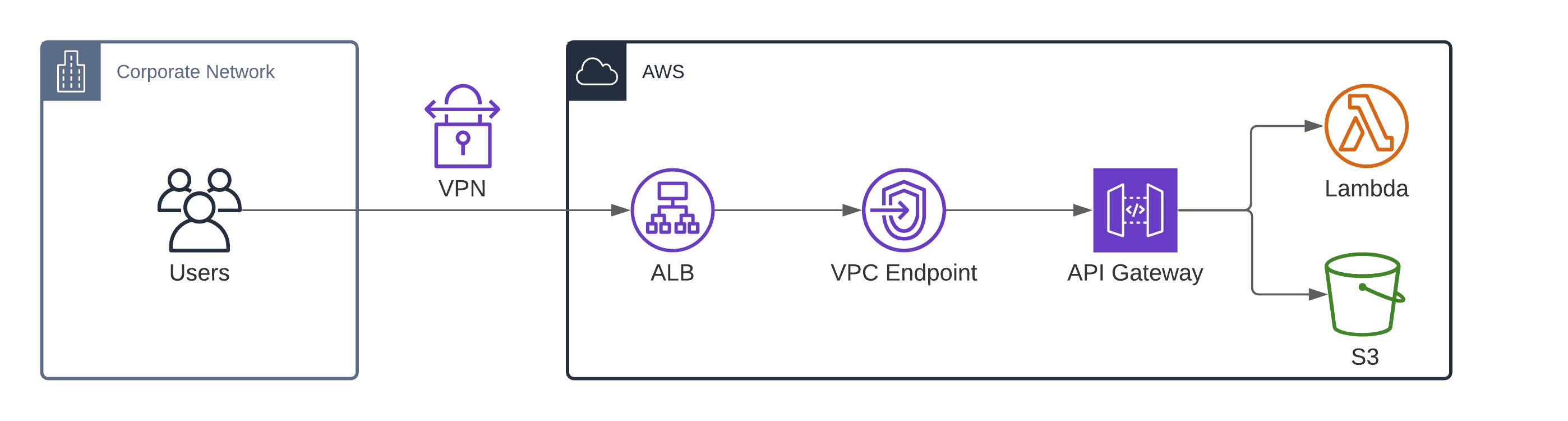

Yes, Serverless also works for hybrid cloud scenarios. But the complexity increases enormously. Check out Serverless Hybrid Cloud: Accessing an API Gateway via VPN or Direct Connect to learn more.

Even though it may be unfamiliar. Build internet-facing applications.

Database

The most popular database choices are Aurora Serverless (SQL) and DynamoDB (NoSQL).

Aurora Serverless provides a relational database that scales compute and storage layers automatically. As Aurora Serverless comes with a PostgreSQL or MySQL compatible interface, it is still similar to what engineers are used to from an on-premises environment.

Note that Aurora Serverless comes with a few limitations. We are all waiting for the next generation that AWS announced in December 2020 to become generally available.

Handling database connections with a Lambda function is tricky. The Data API mitigates the problem by providing a REST API sending SQL queries to the database.

DynamoDB scales horizontally, not vertically as Aurora Serverless does. Therefore, DynamoDB is the database of choice for workloads with high throughput. Also, DynamoDB bills per request which reduces idle resources to a minimum.

But, DynamoDB is different than a typical SQL database. Architects and developers will need some time to get their heads around DynamoDB’s quirks. Also, DynamoDB requires a well-defined data schema upfront. Modifying the data schema and access patterns may result in significant conversion work.

Whenever the data schema and query patterns are straightforward, go with DynamoDB. However, Aurora Serverless is a good alternative in scenarios where you do not know about the data structures upfront or where query patterns are complex.

API Gateway

I’ve compared the different types of API Gateways that AWS is offering already.

In my opinion, the API Gateway REST API is the best fit for the Enterprise because of the following reasons.

- High service maturity does not change quickly.

- Wide range of authentication integrations, including the option to build a custom authorizer.

- Supports tenant-based throttling and the WAF.

- Supports private endpoints only accessible from the VPC.

In general, the API Gateway REST API is a good fit for Serverless in the Enterprise.

Distributed System

In the good old days, the application and database ran on one machine. Maybe on two different machines. With Serverless, you are entering the world of highly distributed systems. Provisioning and maintaining the Serverless application’s infrastructure is simple, but writing the code becomes much more complex.

- There is no guarantee that Lambda executes your code only once. The same is true for delivering messages.

- Most often, there is no guarantee for delivering messages in the proper order.

- Modifying data might be eventually consistent. Therefore, your application might read stale data.

You need to plan for all of that. And it’s not always simple to do so.

Add general understanding of distributed systems to your goals for training and hiring developers and architects.

Summary

I see more and more large companies adopting Serverless architectures. The gap between a typical on-premises infrastructure and Serverless is huge. Therefore it will take time, energy, and money to cross the chasm. However, all projects that I’ve observed turned into a great success and were delivering value to the company. It is worth it!