Serverless: Invalidating a DynamoDB Cache

A cache in front of DynamoDB is boosting performance and saving costs. Especially true for read-intensive and spiky workloads. Why? Please have a look at one of my recent articles: Performance boost and cost savings for DynamoDB.

Caching itself is easy:

- Incoming request

- Load data from cache

- Query database if needed data is not available in cache

- Insert data to the cache

But as Phil Karlton said wisely:

There are only two hard things in Computer Science: cache invalidation and naming things.

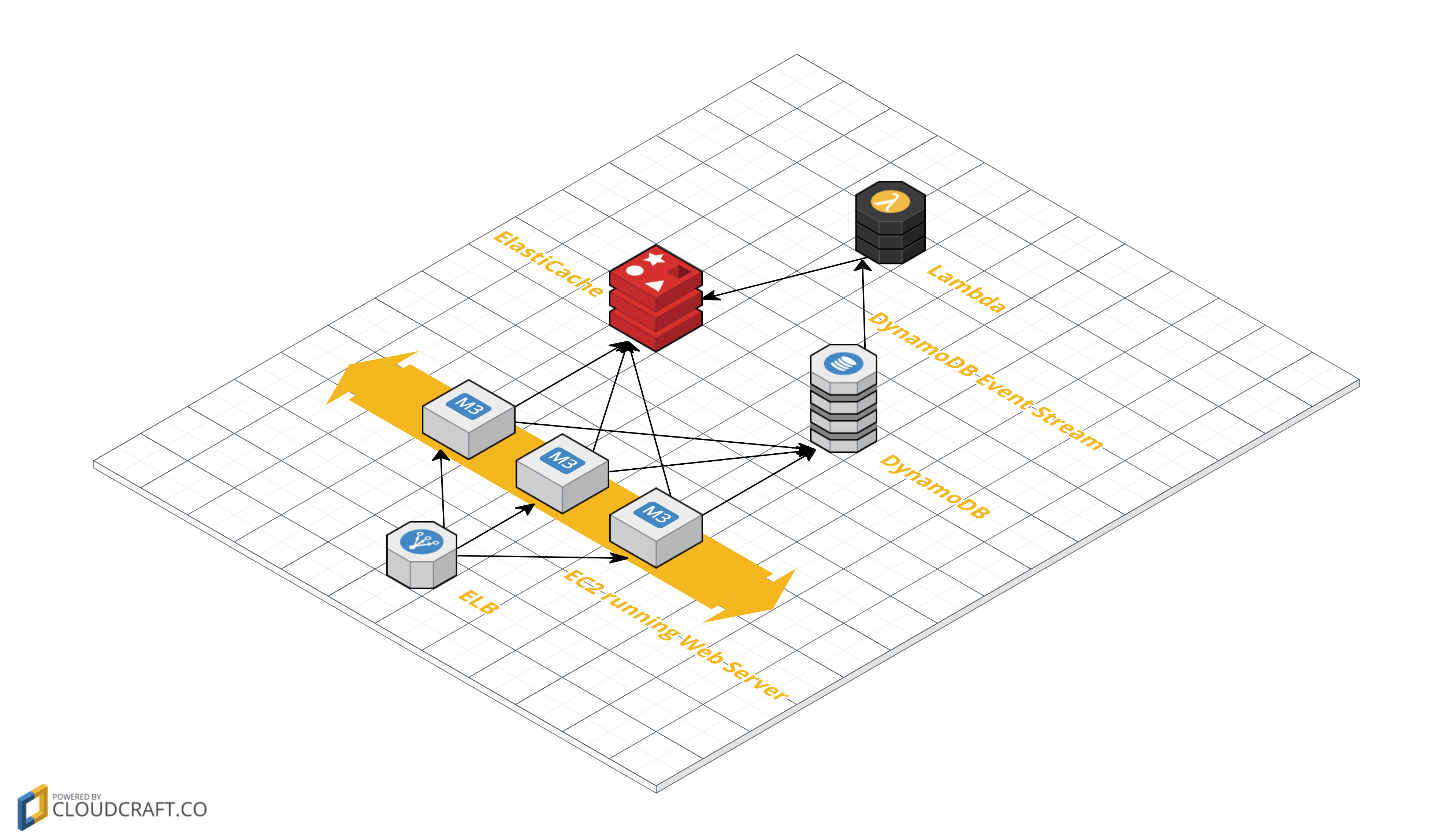

Probably true. Especially the statement about naming things. But setting up cache invalidation is not that hard when using the following building blocks: ElastiCache, DynamoDB, DynamoDB Streams and Lambda as shown in the following figure. You’ll learn how to implement a Lambda function doing cache invalidation in this article.

Combining DynamoDB Streams and Lambda

DynamoDB is publishing events for every change of an item to a DynamoDB Stream if needed. These events are perfectly suited for cache invalidation.

If you are into serverless, today is your lucky day!

- DynamoDB Streams are integrated with AWS Lambda.

- Lambda supports VPC which allows access to ElastiCache.

A typical event received by a Lambda function looks like this:

{ |

The function receiving such an event needs to:

- Loop over all incoming events.

- Decide whether a cached item needs to be updated or deleted.

- Update or delete a cached item by sending a request to Redis (or Memcached).

Just a few lines of code are necessary to implement these steps with Node.js:

var redis = require("redis"); |

Almost done, next step is to setup the whole infrastructure.

Wiring all the parts together

It’s time to create all the needed parts, as of the infrastructure:

- DynamoDB table and stream

- Security Group allowing access from Lambda to ElastiCache

- ElastiCache cluster

- mapping between DynamoDB stream and Lambda

- IAM role for Lambda

- Lambda function

The following snippet contains a CloudFormation template including all the needed parts.

{ |

Use this template to create your own environment by following these steps:

git clone https://github.com/widdix/lambda-dynamodb-elasticache.gitcd lambda-dynamodb-elasticache- Bundle files into ZIP file and upload to S3

- Click Next to proceed with the next step of the wizard.

- Specify the parameters for the stack.

- Click Next to proceed with the next step of the wizard.

- Click Next to skip the Options step of the wizard.

- Click Create to start the creation of the stack.

- Wait until the stack reaches the state CREATE_COMPLETE.

- Update

index.jswith the host of ElastiCache cluster created by CloudFormation. - Bundle files into ZIP file and upload to S3.

- Update stack, keep template, update

LambdaCodeKey. - Wait until the stack reaches the state UPDATE_COMPLETE.

Everything ready for testing! Insert, update and delete items from the DynamoDB table named cacheinvalidation. Watch CloudWatch Logs of Lambda to gain insight into cache invalidation.