Step Functions pitfall: The execution reached the maximum number of history events (25000)

AWS Step Functions is an execution environment for finite state machines. Lately, I was running into the error “The execution reached the maximum number of history events (25000).” when listing all objects in an S3 bucket page by page. This blog post will teach you why the error happens and how to avoid it.

Introducing Step Functions

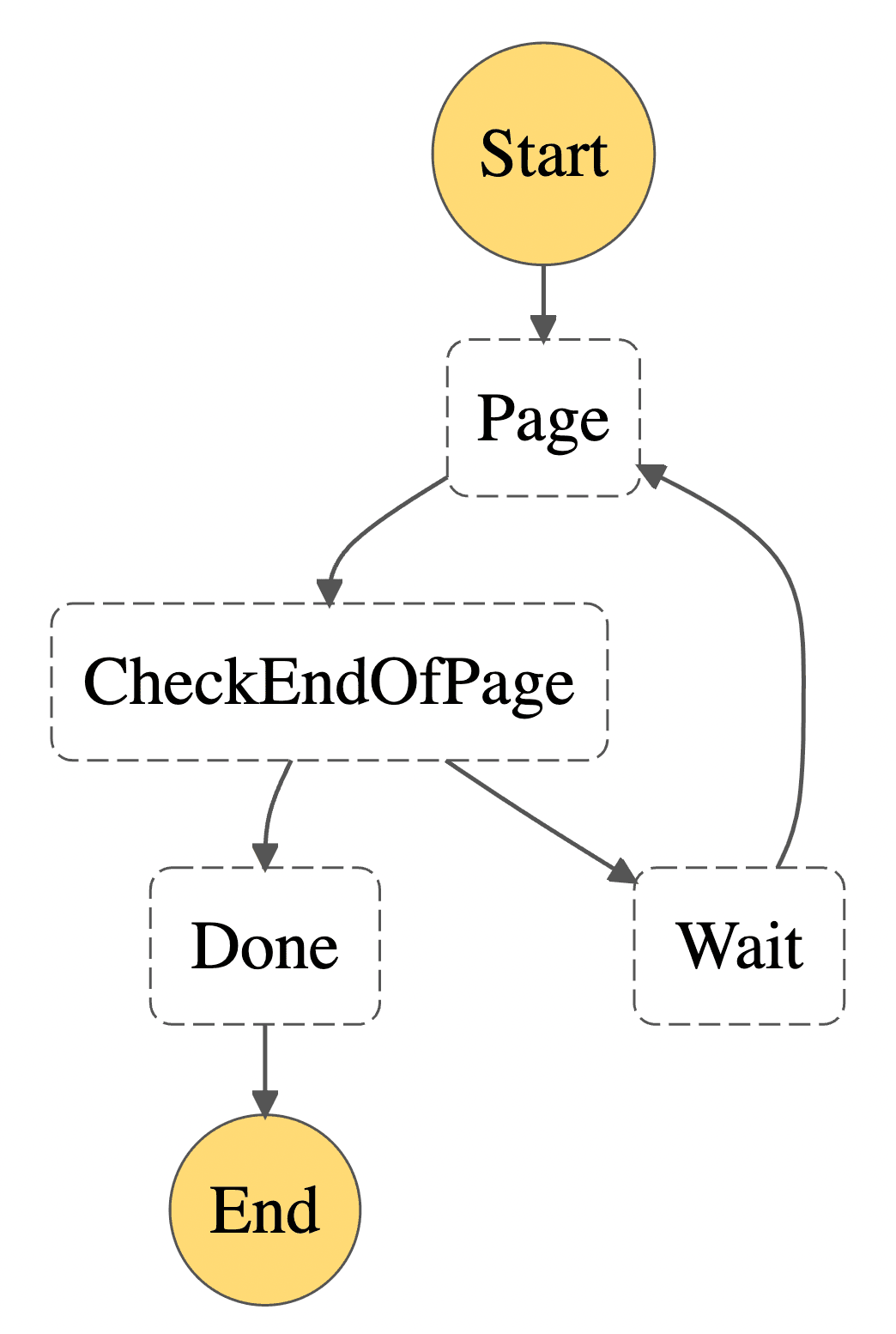

In Step Functions, a state machine is a collection of states. A state does some work (e.g., invoke a Lambda function, terminate the state machine, if/else statement, and many more) and points to the next state. When executing a state machine, you can hand over data used as the input into the first state. Each state gets input data and outputs data as well. The following figure shows four states:

- Run: Invokes a Lambda function to call the S3 API to list objects starting at

NextKeyMarkerfrom the input. Outputs the newNextKeyMarkeras well as theIsTruncatedresponse of the S3 API. Next state: CheckEndOfPage. - CheckEndOfPage: Checks if

IsTruncatedis set to true. Outputs the input. If yes, transition to Wait. If no, transition to Done. - Wait: Waits for a couple of seconds. Outputs the input. Transition to Run.

- Done: All objects are fetched. Successfully terminates the state machine. Outputs the input.

The pitfall

The number of state transitions allowed in the execution of the state machine is limited. But the limit is not well defined. Instead, Step Functions limits the number of history events per execution to 25,000. Wait? What are history events?

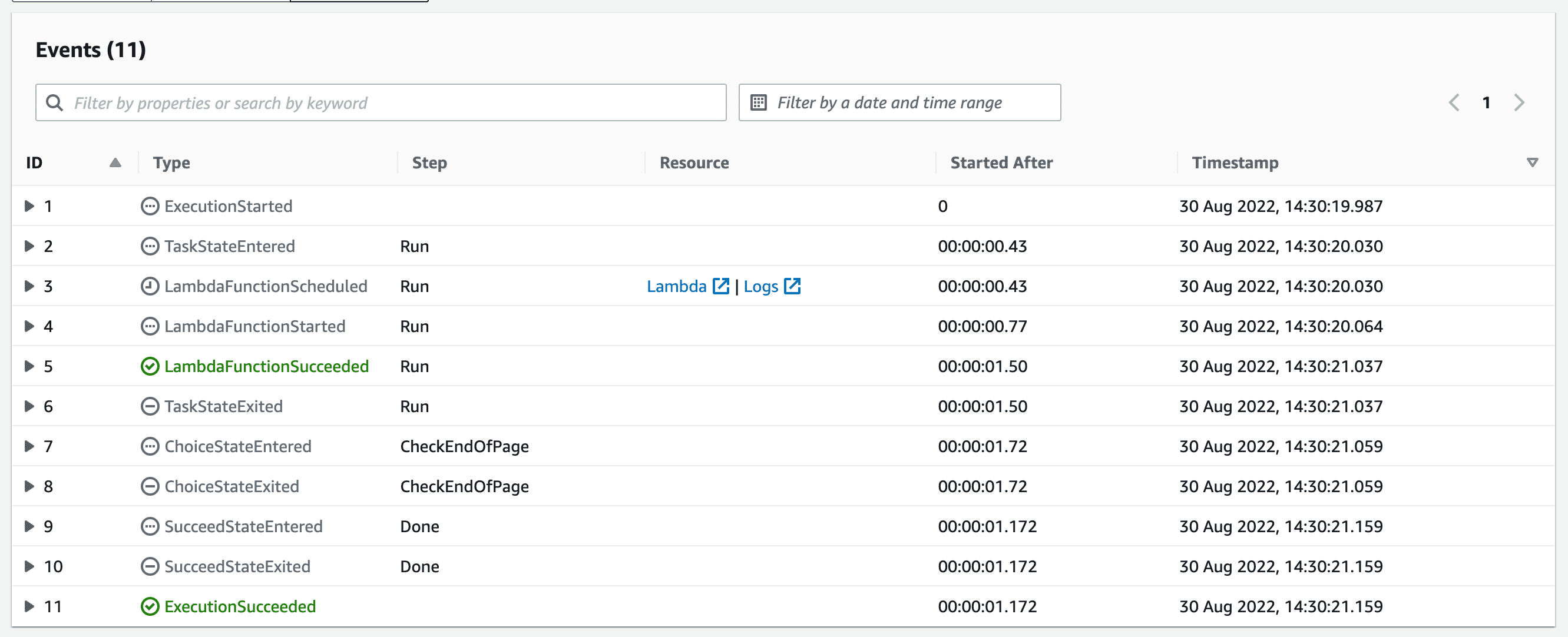

The official developer guide does not mention them. But you can see them in the UI, as the screenshot shows.

The execution listed the objects of an S3 bucket with a handful of objects. Only one page was available. But still, 11 history events are emitted. The Run state emits 5 history events, and CheckEndOfPage emits two. Wait was never reached, but it would emit two history events. For each page of S3 objects, 9 history events are emitted. I divide the maximum allowed number of history events by 9, and I get a maximum of 2,777 (25,000/9) pages I can fetch. The S3 API returns not more than 1,000 objects per API call. Doing the math again reveals that my state machine can list buckets with no more than 2.7 mio objects. My bucket was, of course, larger than that. The execution errored: “The execution reached the maximum number of history events (25000).”

Solving the issue

The 25,000 history events limit will not go away. We have to work around it. I suggest first understanding the relationship between the state types in your state machine and the number of history events emitted. I was stunned to learn that invoking a Lambda function emits 5 history events. I was looking for a table that tells me how many history events I can expect for a given state type. Unfortunately, such a table does not exist, so I created my own (please reach out to me to fill the ? gaps if your state machine uses these state types):

| State Type | Task Type | Expected number of history events |

|---|---|---|

| Choice | - | 2 |

| Fail | - | ? |

| Map | - | ? |

| Parallel | - | ? |

| Pass | - | ? |

| Succeed | - | 2 |

| Task | servicename |

? |

| Task | activity | ? |

| Task | function | 5 |

| Wait | - | 2 |

Remember that one history event is emitted at the beginning of the execution and one at the end.

Now that we understand how many history events we emit, we can choose between two strategies to solve the issue.

- Get more work done in a state.

- Start a new state machine execution before we reach the limit.

Get more work done in a state.

This was the strategy I implemented to list more S3 objects. Instead of making a single S3 API call to fetch 1,000 objects, I now call the S3 API 100 times and fetch up to 100,000 objects in one Lambda function execution.

Downsides:

- The Lambda timeout limit must be taken into account. In my case, listing 100,000 objets takes under 1 minute and is well below the 15-minute limit.

- Step Functions is great at retrying a state if something goes wrong. E.g., imagine one S3 API call fails with a 500 error. The state machine can be configured to retry the Lambda function execution. The more work you do in your state, the more work is retried. In my case, that’s okay because I only read a lot of data. If you perform expensive writes, that might be different.

Start a new state machine execution before we reach the limit.

This strategy is recommended by AWS and can be implemented like this.

Downsides:

- You can create an expensive infinite loop.

I hope this article will help you avoid the pitfall.