A pattern for Continuously Deployed, Immutable and Stateful applications on AWS

If you are faced with the challenge of running a stateful application on AWS, you will recognize that many building blocks no longer work as before.

Usually I preach my clients stateless systems. Stateless means that that state is managed by another system. On AWS, this can be DynamoDB, RDS, S3, or other storage services. Managing a stateless system is less complex than managing a stateful system. You can terminate single instances at any time without loosing data. This makes deployments of new software versions simpler. It also allows you to scale horizontally by adding and removing EC2 instances.

But what about stateful applications like MongoDB, Kafka, Elasticsearch, and GlusterFS?

In this post, I will explain you how you can deploy stateful applications on AWS without losing the ability to run rolling updates and provide a highly available system.

A pattern for stateful applications on AWS

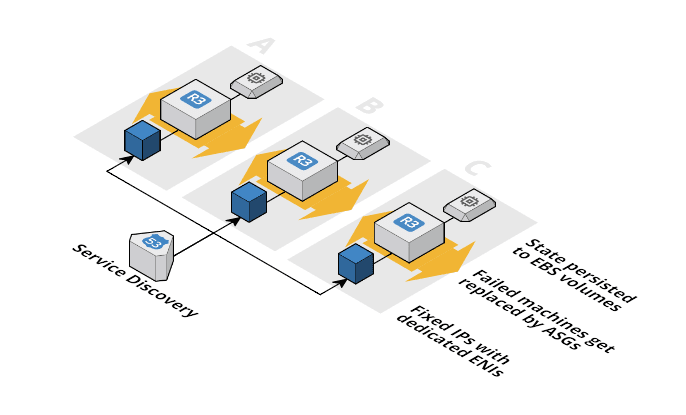

Every EC2 instance (aka node) that is part of the stateful cluster is supervised by a dedicated Auto Scaling Group (ASG). The ASG ensures that a node keeps running by comparing the current state with the desired state.

All the state is stored on EBS volumes dedicated to an ASG. The EBS volume is a standalone entity that is long lived and attached over the network.

Many stateful applications (like GlusterFS, MongoDB, ElasticSearch, Kafka, …) require or prefer fixed IP addresses for their nodes. A dedicated Elastic Network Interface (ENI) per node provides that fixed IP address.

Finally you need a place to register all the IP addresses of the nodes. So clients can connect to the stateful cluster. You can use a simple DNS entry with an A record for every node pointing to the IP address of the dedicated ENI.

The following figure demonstrated the idea.

The diagram was created with Cloudcraft - Visualize your cloud architecture like a pro.

Let’s look at how this can implemented on AWS.

Stateful Infrastructure as Code

I love automation and I prefer tools provided by AWS over self managed tools whenever possible. Therfore CloudFormation is a perfect fit here:

- You can fully automate your infrastructure deployment with CloudFormation

- Your infrastructure templates are versioned together with your code

- You can deploy the templates in your CD pipeline

- CloudFormation is fully managed by AWS and comes at no extra costs

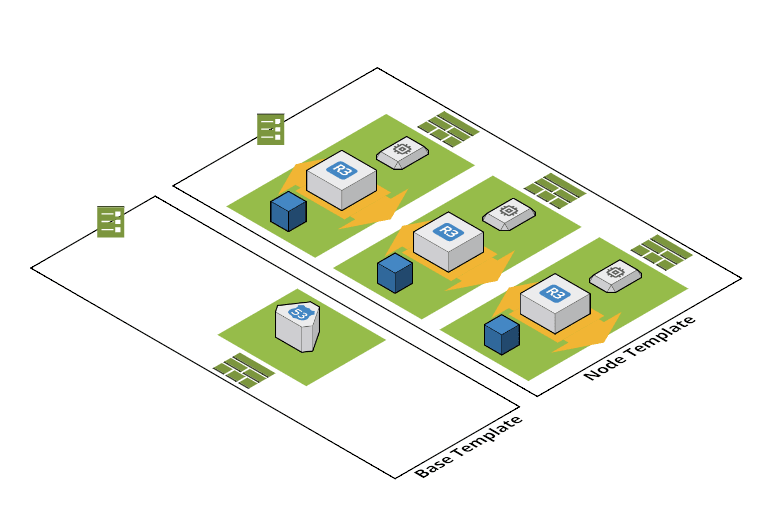

The previous figure can be divided into two responsibilities:

- Base Layer: Contains resources that are needed by all nodes

- Node Layer: Contains resources that are need by a single node

The following figure illustrates the two layers.

The diagram was created with Cloudcraft - Visualize your cloud architecture like a pro.

The base template only contains the Route 53 Record Set that is used by the nodes to advertise their IP addresses.

The node template contains a ASG, ENI and EBS Volume. Let’s look at the node template resources in more detail.

Auto Scaling Group (ASG)

The Auto Scaling Group observes a single EC2 Instance and does not do any scaling at all! Instead the ASG has two purposes.

- The ASG is used to automatically recover a failed EC2 Instance.

- Additionally, the ASG and CloudFormation’s Update Policy are used to perform an update of the EC2 Instance by terminating the old version and starting a new EC2 Instance with the new version. When a fresh EC2 Instance starts it attaches the EBS volume and the ENI with a small script injected via User Data.

Elastic Block Store (EBS) Volume

The EBS volume is attached and mounted during start-up of the EC2 Instance. The volume does not go away when the EC2 Instance is terminated for any reason.The EBS volumes are used to outsource the state of the applications. I know about the performance penalty compared to Instance Store, but I don’t trade performance for automation and maintainability in an asynchronous system that runs in the background without direct user impact. The benefit is that the data replication that needs to be performed when the node comes back online is very small when using EBS volumes. If you run on instance store the node needs to replicate the whole data set from another node which can take some time.

Elastic Network Interface (ENI)

Distributed systems like Kafka communicate over network protocols based on the IP protocol (TCP/IP or UDP). IP addresses are used to identify the source and destination of a packet. By default, the ASG assigns a random private IP addresses to the EC2 Instances when launching a new node. So it is very likely that the new node has a different IP address then the old one. The problem is, that the other nodes don’t know about that fact. They still try to contact the node on the old IP address. To bypass all problems regarded to that problem I decided to go with fixed private IP addresses. Therefore I create a standalone ENI for every ASG. During startup I attach the ENI as a second network interface (eht1) to the EC2 Instance.

Route 53 Record Set

All the EC2 Instances must be announced the clients of the application. An easy solution is to put all the private IP addresses into a DNS A record. The clients must make a request the the DNS server and get back the list of all available IP addresses. I can also add new nodes and append them to the list. Clients that newly connect will than find the updated DNS entry. Older clients must either get informed by the system itself (e.g. nodes discover each over via a gossip protocol and announce the changes to their clients) or must reload the node list from time to time. A DNS lookup like dig amazon.com will return the entries.

$ dig amazon.com

[…]

;; ANSWER SECTION:

amazon.com. 13 IN A 54.239.17.6

amazon.com. 13 IN A 54.239.25.200

amazon.com. 13 IN A 54.239.25.208

amazon.com. 13 IN A 54.239.26.128

amazon.com. 13 IN A 54.239.17.7

amazon.com. 13 IN A 54.239.25.192

[…]

Limitations and benefits

- I use the described architecture to run MongoDB, Kafka, Elasticsearch and GlusterFS clusters. I don’t run more than 9 machines in those clusters. My solution does not scale to much bigger clusters. You end up with an unmanageable amount of Auto Scaling Groups.

- I’m fully aware of the fact that EBS volumes are slower than Instance Store. But for some systems agility is more important than performance. E.g. systems that run asynchronously in the background.

- I replace all my EC2 instances at least once per week because of patches and Chaos Monkey likely terminates one node per cluster per day. Therefore I know that my solution works in an (artificially) unstable environment.

- I automatically deploy changes to the clusters in less than two hours. Each git push is potentially deployed to production but not before I run extensive smoke and performance tests in my staging environment.