Builder's Diary Vol. 3: Infrastructure Pipeline with GitLab and Terraform Cloud

Get insights into the day-to-day challenges of builders. In this issue, Rico Nuguid from our partner DEMICON talks about automating deployments with Infrastructure Pipelines based on GitLab and Terraform Cloud.

If you prefer a video or podcast instead of reading, here you go.

Do you prefer listening to a podcast episode over reading a blog post? Here you go!

How did you get into all things AWS?

I started my career as an IT consultant at IBM, where I was part of building the cloud division. Next, I co-founded Tandemploy, where we created a cloud-based HR-Software on Microsoft Azure. In 2020, I joined DEMICON as Business Unit Lead and Principal Cloud Solution Architect. At DEMICON, I deal almost exclusively with AWS. And over the last two years, I have become deeply familiar with Amazon’s cloud. All three cloud providers are similar, as the basic principles are the same everywhere. In detail, however, IBM Cloud, Microsoft Azure, and AWS differ enormously.

What is it like to work as a consultant at DEMICON?

While working as an IT consultant at IBM, I was on the road a lot as a consultant. I mainly worked at the customer’s site. Even if business trips are fascinating at the beginning, you lose the desire to stay in hotels over time. That’s different at DEMICON. At DEMICON, we foster a remote-first culture. Most of the time, I work from home. I like that very much, especially because I can create focus time to work undisturbed and concentrated.

But there are also project phases in which face-to-face collaboration with the customer or my colleagues is essential. In these cases, we meet on-site. For example, in one of the DEMICON offices or at the customer’s premises. Such short trips are a welcome variation in my everyday life.

Furthermore, I enjoy company-wide gatherings where all DEMICONIANs come together. We had a great summer party in Berlin recently.

Why Infrastructure Pipeline?

Rolling out infrastructure changes by running terraform apply from your local machine works fine, but only when you are the only one working on a project. When working together in a team, ensuring everyone uses the same runtime environment to execute the Terraform configuration is tough. For example, the whole team needs to use the same Terraform version. An Infrastructure Pipeline ensures that all changes are rolled out in the same way and solves this and many other problems.

It is also important to mention that when using an infrastructure pipeline, it is no longer necessary to grant engineers administrator access to AWS accounts. Instead, only the pipeline requires administrator access, and engineers get by with read-only permissions.

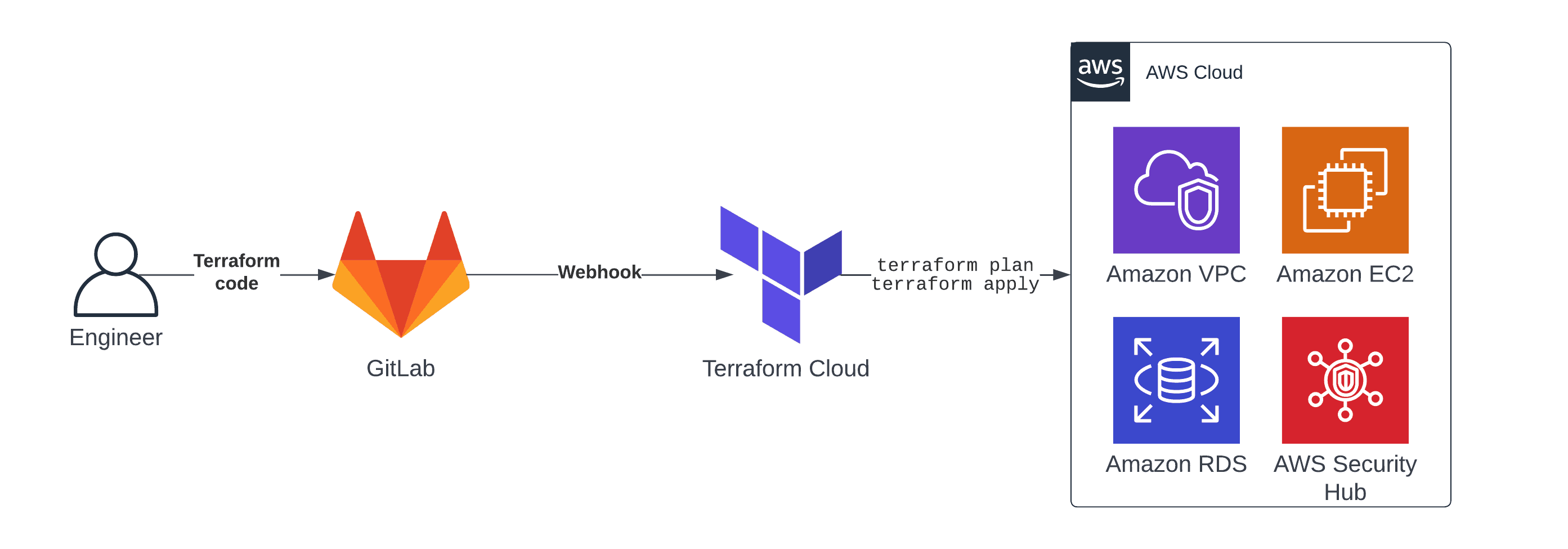

An Infrastructure Pipeline

As is often the case, there are several ways to solve a problem. One of my preferred approaches for an infrastructure pipeline consists of the following components.

- Terraform a declarative approach to define cloud infrastructure as code.

- GitLab to collaborate and version the Terraform configuration.

- GitLab Webhook triggers Terraform Cloud whenever someone pushes a change of the infrastructure code.

- Terraform Cloud executes the Terraform configuration and provisions cloud resources.

Why Terraform Cloud?

Terraform is a popular open-source tool to automate infrastructure on any cloud. On top of that, HashiCorp provides Terraform Cloud, a platform to automate the provisioning of cloud resources.

In my opinion, Terraform Cloud is an excellent service for the following reasons:

- Terraform Cloud manages the Terraform state simply and securely.

- Terraform Cloud controls access to different environments. For example, who is allowed to deploy to production?

- Terraform Cloud comes with a nice, clean UI, which for example, is helpful to inspect the results of

terraform planbefore executing the changes. - Terraform Cloud ensures security best practices before deploying to one of your AWS accounts.

How do you deploy multiple environments (test/prod)?

There is no one-size-fits-all solution. But I’m happy to share my favorite approach to deploying to multiple environments while re-using the same code.

First, separate environment parameters from the resources. To do so, I prefer using Terraform modules. A module is a collection of resources for a specific domain. For example, a module could manage the networking layer, also known as VPC. Typically, I use a GitLab repository for every module. There are also open-source Terraform modules out there. Then, all that is needed to get the infrastructure for a new environment up and running is to initiate and parameterize the modules. To do so, I’m using a live repository, a concept that became popular by terragrunt.

Second, I favor creating a branch for each environment, enabling us to efficiently manage the minor differences between the environments. For example, I’m starting a branch named test and a branch called prod.

Third, as I’m not a big fan of Terraform Cloud’s workspace variables, I recommend using locals to configure the parameters for each environment.

The following code snippets illustrate this lesser-known Terraform feature.

Create a Terraform configuration file named environment.tf to configure environment parameters. The following snippet shows how to specify the local value named bucket_name.

locals { |

This allows you to reference the environment parameter in your Terraform code using locals.bucket_name as shown in the following code snippet.

resource "aws_s3_bucket" "demo" { |

Those three approaches provide a clean and straightforward way to use the same Terraform code to deploy multiple similar environments.

Which open-source Terraform modules do you recommend?

I highly recommend the Terraform modules terraform-aws-modules maintained by Anton Babenko. Besides that, I’ve also used Terraform modules by cloudposse. Using open-source Terraform modules is great, as you do not have to reinvent the wheel repeatedly.

How to grant Terraform Cloud access to an AWS account?

Of course, no one wants to use static credentials for AWS authentication. Unfortunately, Terraform Cloud still needs to provide a way to use IAM roles out of the box. However, HashiCorp is working on an OpenID Connect integration which is available upon request already.

Besides that, I’ve been using the following approach in the past. GitLab supports OpenID Connect. Therefore, I used GitLab to fetch temporary AWS credentials. Next, I used the API of Terraform Cloud to pass those temporary credentials. Afterward, when Terraform Cloud is running terraform plan or terraform apply, it uses the temporary AWS credentials.

Further reading

- Article Builder's Diary Vol. 1: Successful Cloud Migrations

- Article Builder's Diary Vol. 2: Serverless ETL with Airflow and Athena

- Article Builder's Diary Vol. 4: Serverless Software Engineering

- Article Builder's Diary Vol. 5: ECS Anywhere

- Article Builder's Diary Vol. 6: Serverless and DevOps - a match made in heaven

- Tag terraform