Building with EC2: 10 Tips for the Successful Cloud Architect

Despite the Kubernetes and Serverless hypes, the vast majority of cloud workloads still happen on virtual machines. AWS offers the Amazon Elastic Compute Cloud (EC2) service, where you can launch virtual machines (AWS calls them instances). The EC2 service has evolved over 13 years. More performance, lower and less volatile latencies, and easier management are just some of the innovations of the last years. This blog post demonstrates how you can build modern architectures on EC2 and comes with ten tips to avoid the common pitfalls.

This is a cross-post from the Cloudcraft blog.

The Available Software

EC2 provides Windows and Linux instances. You can find all kinds of versions of Windows and many Linux distributions. Amazon Linux is Amazon’s in-house Linux distribution that comes with the best integration into the AWS ecosystem. If you use CentOS or RHEL already, Amazon Linux feels familiar to you.

Operating systems are made available as so-called Amazon Machine Images (AMI). Keep in mind that everyone (including you) can publish AMIs.

Tip #1: Only use AMIs published by AWS or trusted sources like well-known software vendors. Do not rely on the name of the images only. Ask the software vendor for the exact AMI IDs. AWS does not review the names! An attacker could create an image with a misleading name.

In summary, an EC2 instance is launched based on an AMI that contains at least an operating system.

The Available Hardware

If you dig deeper into the EC2 specs, you are confronted with a lesser-known unit (GiB) to specify available storage and memory sizes. One gibibyte (GiB) is 1,073,741,824 bytes while one gigabyte (GB) is 1,000,000,000 bytes. Therefore, 1 GiB > 1 GB. If you migrate existing workloads to AWS, you likely have to convert between the two units.

Tip #2: Google can convert Gib to GB. Search for “1 GiB to GB”, and Google will do the math for you.

You can get instances with up to 448 CPU cores, 24 TiB of memory, 100 GBit/s network throughput, and ~58 TiB of local disk space. If you need GPUs, you can get up to 16 GPUs with 192 GiB of memory as well. Unfortunately, you can not configure an instance with the hardware requirements you need. Instead, AWS provides instance types, and you have to select one that fits your needs. A few examples (prices are valid for the us-east-1 region):

| Purpose | Architecture | Instance Type | CPU Cores | Memory | MonthlyCost ($) |

|---|---|---|---|---|---|

| Compute-optimized | Intel | c5.large | 2 | 4 GiB | 61.20 |

| General-purpose | ARM | a1.large | 2 | 4 GiB | 36.72 |

| General-purpose | Intel | m5.large | 2 | 8 GiB | 69.12 |

| Memory-optimized | Intel | r5.large | 2 | 16 GiB | 90.72 |

| General-purpose | AMD | m5a.large | 2 | 8 GiB | 61.92 |

| General-purpose | AMD | m5a.xlarge | 16 | 16 GiB | 123.84 |

| General-purpose | AMD | m5a.16xlarge | 64 | 256 GiB | 1,981.44 |

Tip #3: Get the best overview of all available instance types in a given region here: ec2instances.info

If you stay in the same instance family (e.g., m5a), the costs are linear to the size.

| Family | Size | Factor | Monthly Cost ($) |

|---|---|---|---|

| m5a | large | 1 | 1*61.92 |

| m5a | xlarge | 2 | 2*61.92 |

| m5a | 2xlarge | 4 | 4*61.92 |

| m5a | 16xlarge | 32 | 32*61.92 |

The AWS offering includes CPUs by Intel, AMD (AMD64/x86-64 architecture), and ARM (ARM64 architecture). The following costs formula applies: ARM < AMD < Intel.

Tip #4: You can cut costs by 10% if you select an AMD instead of an Intel instance type while you can run the same applications. You can save more with ARM instances, but you have to check if your application runs on ARM.

If you need bare-metal performance, EC2 has you covered. An instance can be either a virtual machine or a bare-metal machine. If you prefer not to share a host with other tenants (AWS customers) for compliance reasons, you can run virtual machines in a dedicated fashion.

Tip #5: The network throughput of EC2 instances is a resource limit that is easy to miss. Unfortunately, AWS does not publish all the numbers that are required. You have to rely on network throughput data tables generated by the community.

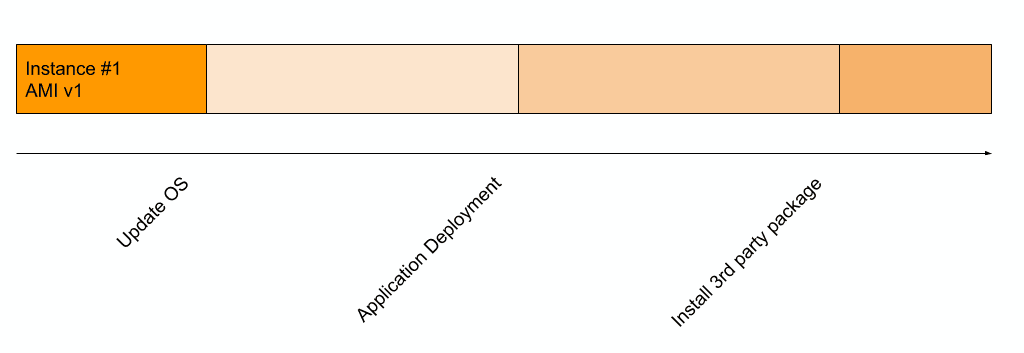

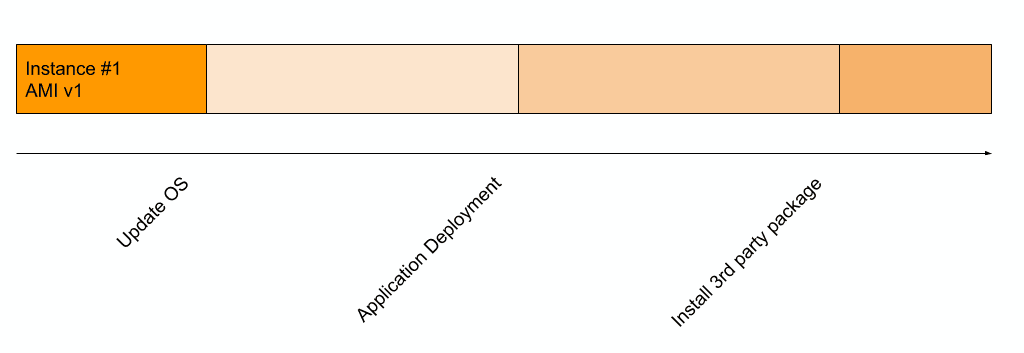

Mutable or Immutable Management of Instances

When it comes to managing EC2 instances, you have to pick one of two approaches:

A mutable EC2 instance is created once and then lives for many years. This comes with a significant disadvantage. If you manage more than one instance, sooner or later, the configuration of those instances will differ because someone forgets to run a command on the other instance. We call this configuration drift, which is the evil of all issues related to “but it works on this instance, why not on the other”.

The workflow: humans or scripts log on to the running instance (e.g., via SSH or RDP) and do their work. For example, apply OS updates, install new packages, modify configuration files. Deployments happen while the EC2 instance is running.

To reduce the chances of configuration drift, you should use a tool to apply changes to running instances instead of remote sessions.

Tip #6: AWS offers a suite of tools combined in the AWS Systems Manager (SSM) service to manage mutable instances. SSM covers patching during defined time windows, running commands on a fleet of instances, remote shells, software inventories, and much more. To deploy your software, I recommend AWS CodeDeploy.

An immutable EC2 instance is never changed after creation. This comes with a significant benefit: All your instances are precisely the same. No configuration drift is possible.

The workflow: You cannot change an instance. Instead, you launch a new instance based on a newer AMI and delete (AWS calls it terminate) the old one. So how do you get a newer AMI? You can build your own images. To do so, you launch a temporary instance based on an existing AMI (e.g., Amazon Linux 2). When the instance is ready, you log on and install the needed packages, add your application, create configuration files. Once you are done, you can create an AMI based on the EC2 instance. Once the AMI is created, you terminate the temporary EC2 instance. Does this sound like a lot of manual work? Luckily, there are tools to help us do so.

Tip #7: Packer by HashiCorp is the most popular option. With Packer, you only have to care about automating the setup procedure (e.g., a bash script) while Packer cares about the rest and spits out an AMI at the end.

A single instance is a single point of failure

If you aim for a highly available or fault-tolerant architecture, you need more than one EC2 instance.

If the host has an issue, your EC2 instance will become unavailable. AWS will not migrate your instance to another host by default. Another strong argument against depending on a single EC2 instance in your architecture: AWS promises an uptime of only 90% for a single EC2 instance.

Tip #8: If you run a single EC2 instance, enable auto-recovery to move the instance to another instance in case of issues.

Besides that, you also should spread your workload across Availability Zones (AZs). You can think of an AZ as an isolated data center. Two AZs are independent (location, power, cooling, connectivity, …). You indirectly control the AZ into which an instance is launched. You define the AZ by selecting a VPC subnet, which can only belong to one AZ. Therefore, you have to launch two EC2 instances in two different subnets that are created in separate AZs.

If you follow the immutable approach (discussed earlier in this post), you can make use of Auto Scaling Groups (ASGs) to launch N instances that are evenly spread across AZs. Don’t be confused by the name Auto Scaling Group. The number of instances will not be increased or decreased automatically for you. The benefit of ASGs is that they make sure that a desired number of instances is running at any time. In the rare case that an instance fails, the ASG will replace it within minutes.

Tip #9: If you run an ASG with N>=2 in multiple subnets (in separate AZs), you are fine in terms of availability. This approach only works for immutable instances.

If you follow the mutable approach, you are in trouble. You need to ensure that you can spin up a new instance to replace the failed one promptly. You also have to take backups of your instance (e.g., with AWS Backup).

There is one problem if you run more than one EC2 instance: How do clients connect to the instance? You now have two IP addresses. You could use DNS to help you, but the best solution is described in the next section.

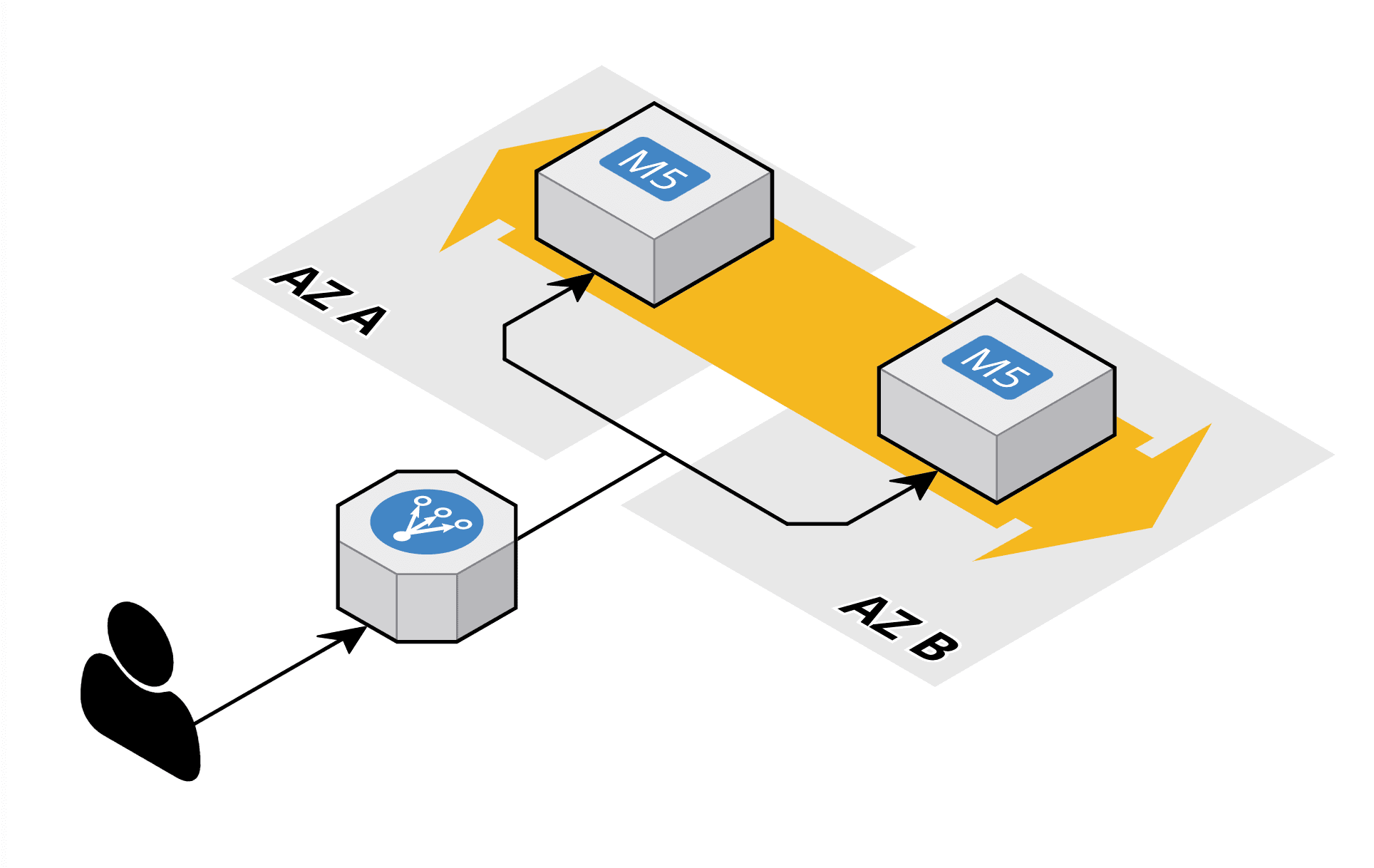

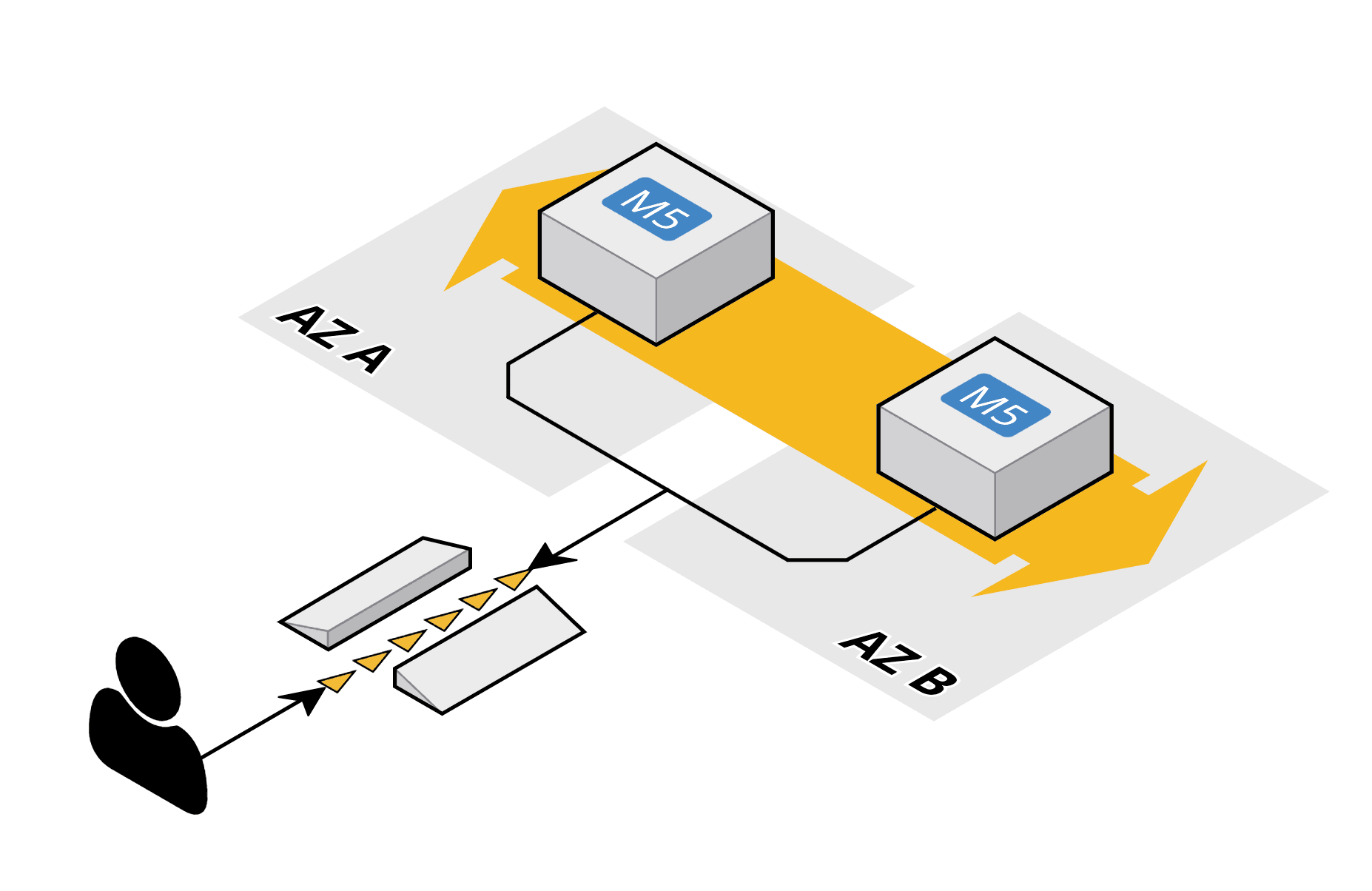

Decoupling with Load Balancer or SQS

AWS offers two ways to decouple clients from your EC2 instances. Load balancers can be used for synchronous decoupling. The client communicates with the load balancer, while the load balancer knows about the EC2 instances and forward traffic to them. The clue: load balancers offered by AWS are highly available by default. The following figure shows how a client connects to a load balancer, which connects to one of the instances. I’ve created the AWS diagram with Cloudcraft.

If possible you should decouple the clients from your EC2 instances in an asynchronous way using a message queue. The most convenient option is the Amazon Simple Queue Service. Again, the queues offered by AWS are highly available. The clue is that the client can continue to send new jobs even if all EC2 instances are down (e.g., for maintenance). The following figure shows how it works.

Tip #10: Always decouple your clients from your EC2 instances. If possible, use the asynchronous approach with SQS. Alternatively, use an Elastic Load Balancer for synchronous workloads.

Summary

EC2 instances are the core of any architecture on AWS. With the right tools and patterns, you can create highly available architectures that can be operated efficiently. Follow my ten tips to ensure that you avoid common pitfalls and utilize the powers of EC2.