Choosing the best way to scale EC2 instances on demand

Migrating workloads into the cloud — and specifically to AWS — comes with many advantages. You can operate workloads in new ways. When you only pay for what you use and add capacity within minutes, the world of auto-scaling opens up. When your workload is idling, you remove capacity and lower your expenses. When the workload is busy, you add capacity to keep the users happy. An excellent idea that works perfectly in theory. In practice, many pitfalls are waiting for you.

This is a cross-post from the Cloudcraft blog.

Read on to understand the options that AWS provides to help you scale EC2 instances in and out.

Vertical versus horizontal scaling

Vertical scaling refers to the practice of upgrading the hardware of a machine that runs your workloads. Instead of running on 16 cores, you can run on 32 cores. The problem here is that you can not add as many cores as you wish. Infinite core CPUs are not yet invented. Besides that, CPUs with a high core density are usually more expensive than many CPUs with a lower core count.

On AWS, it is not possible to change the hardware specs of a running EC2 instance. Vertical scaling always comes with a short downtime. You have to stop the EC2 instance, change the instance type, and start the EC2 instance again.

Horizontal scaling is about adding more machines, usually by using cheap commodity hardware.

Horizontal auto-scaling comes with important limitations:

- It only works when your workloads can be scaled horizontally by adding more EC2 instances. This usually requires that the EC2 instances run behind a load balancer or that requests are processed asynchronously from a message queue.

- You have to leverage Auto Scaling Groups to manage the EC2 instances.

Let’s have a closer look at Auto Scaling Groups.

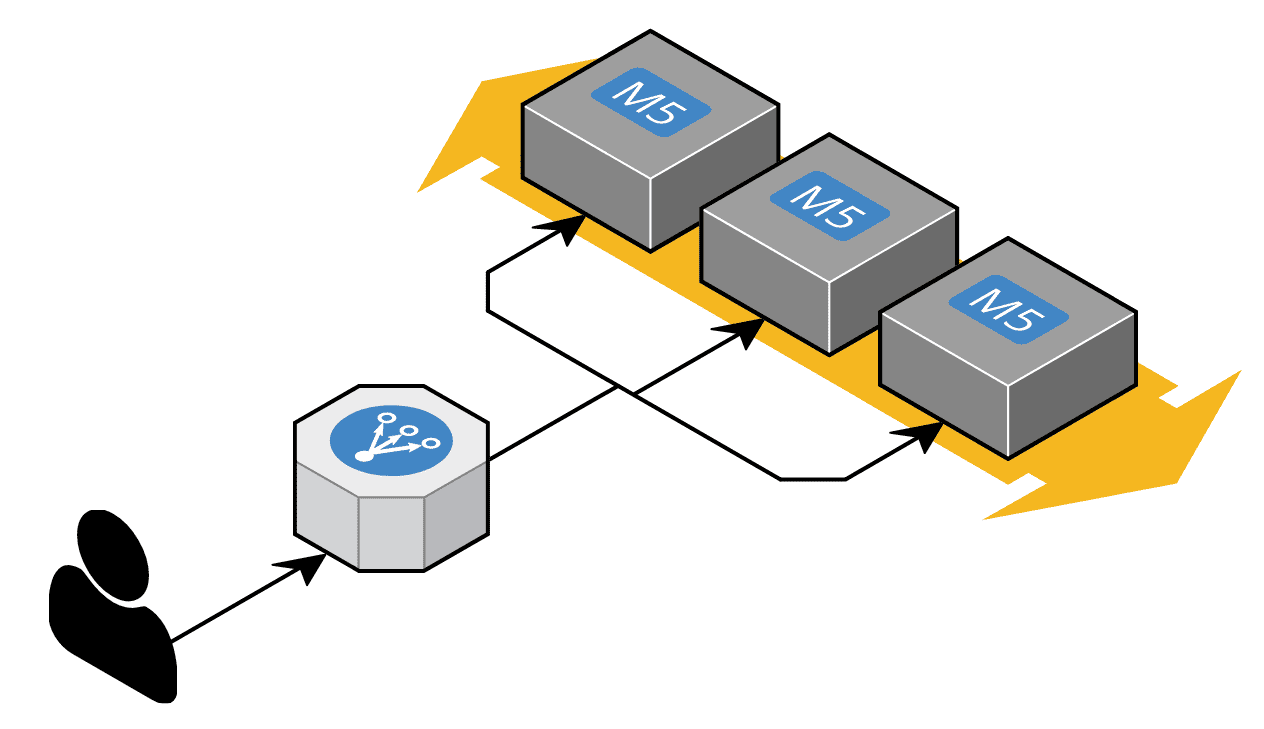

Auto Scaling Groups

The naming Auto Scaling Group (ASG) is misleading. The surprising fact is this: ASGs don’t provide auto-scaling capabilities. An ASG has one job: Keep the number of running EC2 instances in sync with a desired number of instances. The ASG itself does not modify the desired number of instances. An external trigger is responsible for adjusting the desired number of instances. By increasing the number, the ASG will add EC2 instances (scale-out). By decreasing the number, the ASL will terminate EC2 instances (scale-in).

I downplayed the capabilities of an ASG. ASGs are great and can do many things, but they will not auto-scale your workload. To do so, we need an external trigger to adjust the desired number of instances. AWS provides four options:

- Simple Scaling

- Step Scaling

- Target Tracking

- AWS Auto Scaling

Let’s have a look at each of them.

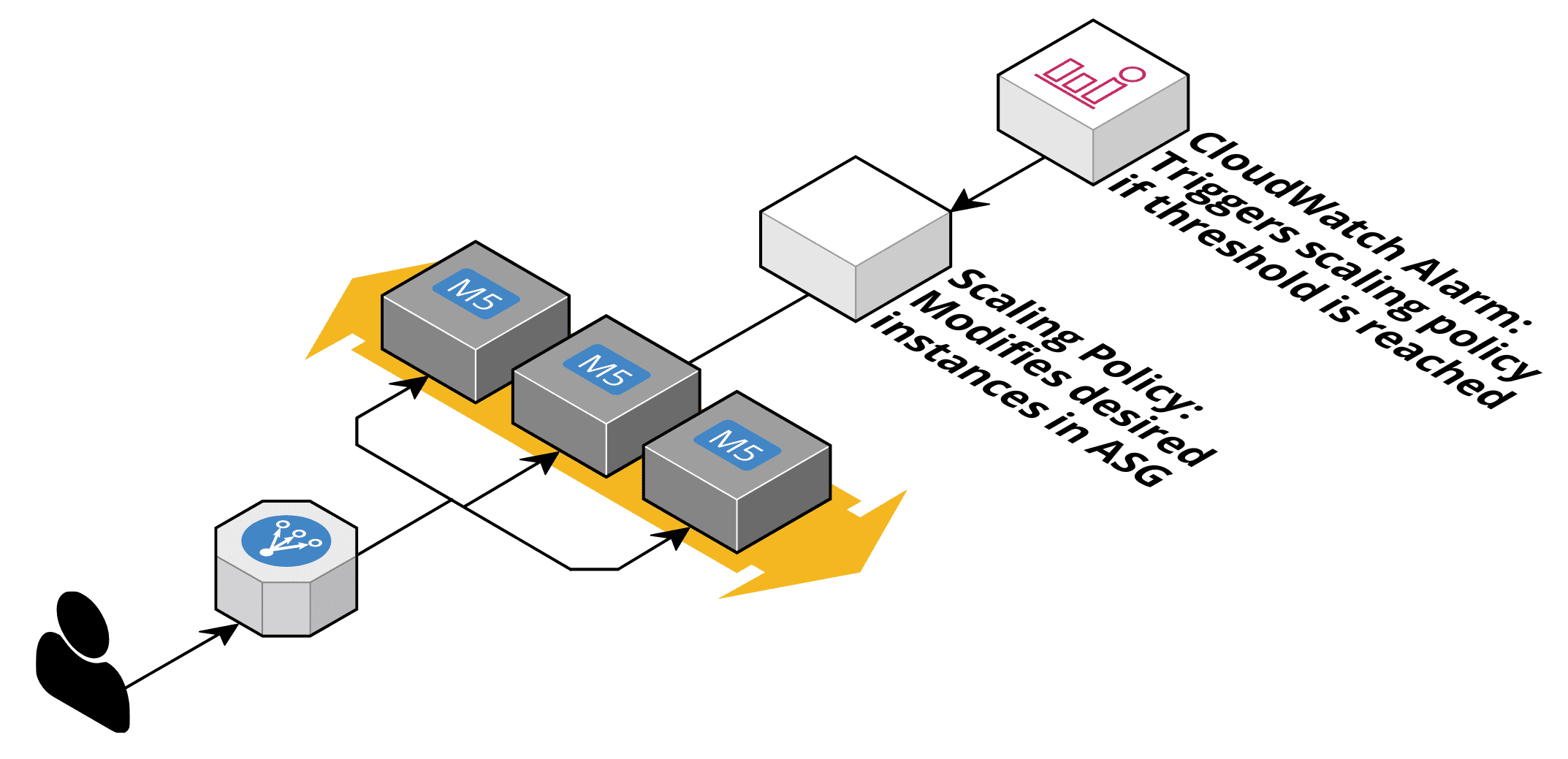

Simple Scaling

This option relies on a CloudWatch Alarm. The alarm watches a metric like network or CPU utilization or the requests per target behind a load balancer. If the metric reaches a predefined threshold, a scaling policy is triggered. The scaling policy defines:

- How many instances are added or terminated? You can specify an absolute or relative number.

- The pause between two scaling activities, aka cooldown. Remember that it takes minutes until newly launched EC2 instances impact the CloudWatch metric that you use to trigger the scaling policy.

A couple of pitfalls here:

- If you receive a sharp rise in traffic, the scale-out likely is too slow. Keep in mind that the cooldown limits how often a scale-out can happen. Usually, the cooldown is somewhere around 5-10 minutes.

- You have to choose a metric for scaling that represents the bottleneck of the architecture under load. If you scale based on CPU, but the bottleneck is the database connection pool size, you will run into issues. Load testing is required!

- Scaling takes time. Metric data is only reported every minute. CloudWatch alarms take time to fire as well. Last but not least, launching new EC2 instances and starting up your application also takes time. It is highly recommended to use AMIs that contain your application to eliminate installation times.

The next approach deals with a sharp rise in traffic.

Step Scaling

Step scaling also required a CloudWatch Alarm. But you can react differently based on how bad things are. You could add one instance if the CPU is utilized more than 60%. But if the CPU utilization grows to 70%, you want to add two instances. If it rises to 80%, you add four instances. If it grows to 90%, you add eight.

There is no cooldown as well. Instead, you can define an estimated instance warmup timespan. During this timespan, step scaling will not count the EC2 instance into the number of running instances.

A couple of pitfalls here:

- If your estimated instance warmup is too low, you will scale out more often than required. Keep in mind that it takes time until a newly launched instance affects your scaling policy (usually this around 5-10 minutes).

- The scaling metric pitfall of Simple Scaling still applies.

If the previous approaches look too cumbersome, Target Tracking might be for you.

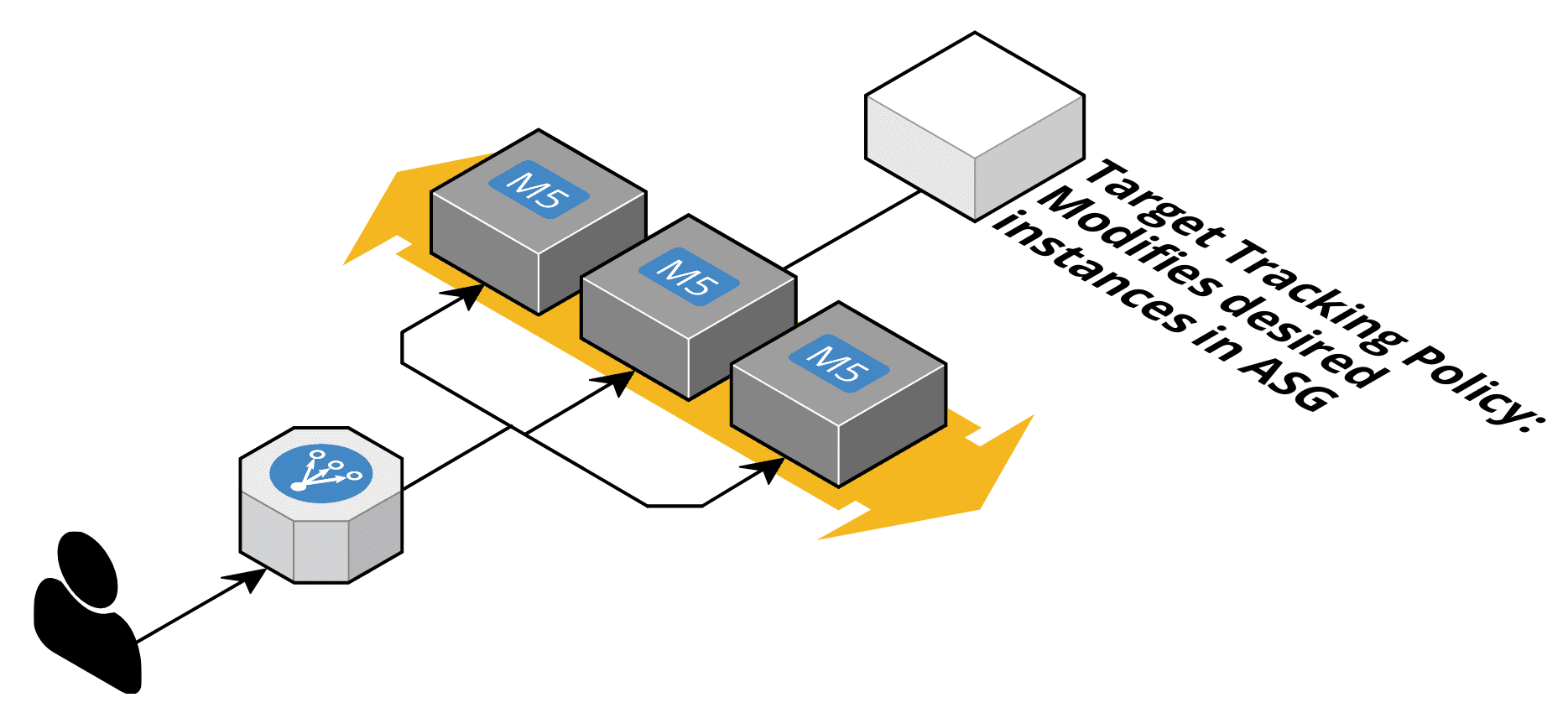

Target Tracking

With target tracking, you ask AWS to keep a metric within a specific range. For example, you can tell AWS to keep your CPU utilization inside an ASG at 50%. AWS will adjust the number of instances in a way to reach the target. No CloudWatch Alarm is required here. AWS will create alarms on your behalf.

The following metrics are supported by target racking out-of-the-box:

- Average CPU utilization of the Auto Scaling group.

- Average number of bytes received on all network interfaces by the Auto Scaling group.

- Average number of bytes sent out on all network interfaces by the Auto Scaling group.

- Number of requests completed per target in an Application Load Balancer target group.

You can also define custom metrics if you wish, but the scaling metric pitfall of Simple Scaling still applies.

AWS Auto Scaling

AWS Auto Scaling can be confusing. It also provides target tracking, which is called dynamic scaling, but t. The difference is that you also get predictive scaling. To do so, AWS Auto Scaling takes the past 14 days into account to predict the next two days. This works great for workloads that are less used on weekends or during the night. Black Friday and large end-of-month batch jobs will not be detected.

A couple of pitfalls here:

- Remember that only the past 14 days are used to predict future capacity needs.

Summary

No matter which option you choose, don’t forget to run extensive load tests to validate your configuration. Load testing is very time-consuming. You have to wait a lot to see how the system behaves while load patterns change.

| Simple Scaling | Step Scaling | Target Tracking | AWS Auto Scaling | |

|---|---|---|---|---|

| Mode | reactive | reactive | reactive | proactive |

| Requires predefined thresholds | ✅ | ✅ | ❌ | ❌ |

| Becomes more aggressive during steep rise | ❌ | ⚠️ | ✅ | ✅ |

We covered to scale based on demand. You can also use scheduled rules to add and remove capacity proactively.

Further reading

- Article Scaling Container Clusters on AWS: ECS and EKS

- Article 5 AWS mistakes you should avoid

- Article Running containers on spot infrastructure

- Tag ec2

- Tag cloudwatch

- Tag auto-scaling