Scaling Container Clusters on AWS: ECS and EKS

Containers are a powerful tool to streamline your development and deployment process. However, a container cluster - no matter if you are using ECS (Elastic Container Service), EKS (Elastic Kubernetes Service), or self-managed Kubernetes - increases complexity. You are not only managing virtual machines anymore, but you are also operating containers on top of those virtual machines. Luckily, AWS offers a few approaches to minimize the effort of providing the computing capacity for your container cluster.

- ECS with Cluster Auto Scaling

- ECS with DIY Auto Scaling based on CloudWatch Events and Metrics

- ECS on Fargate

- EKS with Cluster Autoscaler and Managed Node Group

- EKS on Fargate

I have taken a closer look at the different approaches. Read on to learn about differences and pitfalls.

Do you prefer listening to a podcast episode over reading a blog post? Here you go!

AWS Fargate

With Fargate, AWS offers a fully-managed and scalable container infrastructure. No need to manage virtual machines anymore. ECS and EKS launch containers on machines operated by AWS. Removing virtual machines from your architecture decreases complexity significantly. Therefore, I highly recommend using Fargate whenever possible.

However, there are a few reasons when using Fargate is not an option:

- Fargate (EKS) is only available in 8 of 22 commercial regions.

- Fargate (EKS) supports ALB as the only load balancer type.

- Fargate offers a maximum of 4 vCPU and 30 GB memory per container.

- Fargate does not provide specialized hardware (e.g., memory-optimized, storage-optimized, GPU, …)

- Pricing options to reduce costs are limited, especially for Fargate (EKS).

What to do when using AWS Fargate is not an option?

ECS with Cluster Auto Scaling

AWS announced Cluster Auto Scaling for ECS in December 2019. A huge improvement, as there was no built-in way to scale the EC2 instance for an ECS cluster automatically before.

To get started, you need to create a capacity provider associated with the Auto Scaling Group that manages the EC2 instances forming your ECS cluster. On top of that, Cluster Auto Scaling writes a CloudWatch metric named CapacityProviderReservation, a target tracking scaling policy continually tries to achieve a capacity reservation of 100 by scaling out or in.

It is important to mention that the lifecycle for ECS tasks has been extended with the additional state PROVISIONING. If there is not enough capacity within the cluster, an ECS task will stay in state PENDING. Next, the CapacityProviderReservation will cross the target of 100. Therefore, the target tracking scaling policy will increase the desired capacity of the Auto Scaling Group. A few minutes later, one or multiple EC2 instances will register at the ECS cluster.

Scaling in works the other way round. Overcapacity in the cluster will decrease the CapacityProviderReservation below the target of 100. Again, the target tracking scaling policy will kick in and reduces the desired capacity of the Auto Scaling Group.

This is where it gets tricky. The Cluster Auto Scaling sets the termination protection flag for all EC2 instances running at least one task (other than daemon tasks). Therefore, the Auto Scaling Group will not terminate an EC2 instance whenever this could cause a service interruption. The way the Cluster Auto Scaling scales in comes with two major problems:

- Cluster Auto Scaling does not mitigate fragmentation by moving tasks (a group of containers) to another EC2 instance. Therefore, you will waste a lot of money because the cluster does not scale in as much as possible.

- There is no way to roll out a new version of the ECS AMI. The termination protection breaks rolling updates of EC2 instances via CloudFormation.

Check out Deep Dive on Amazon ECS Cluster Auto Scaling when interested in more details.

In my opinion, those are two significant problems. That is why I came up with a do-it-yourself (DIY) auto-scaling approach.

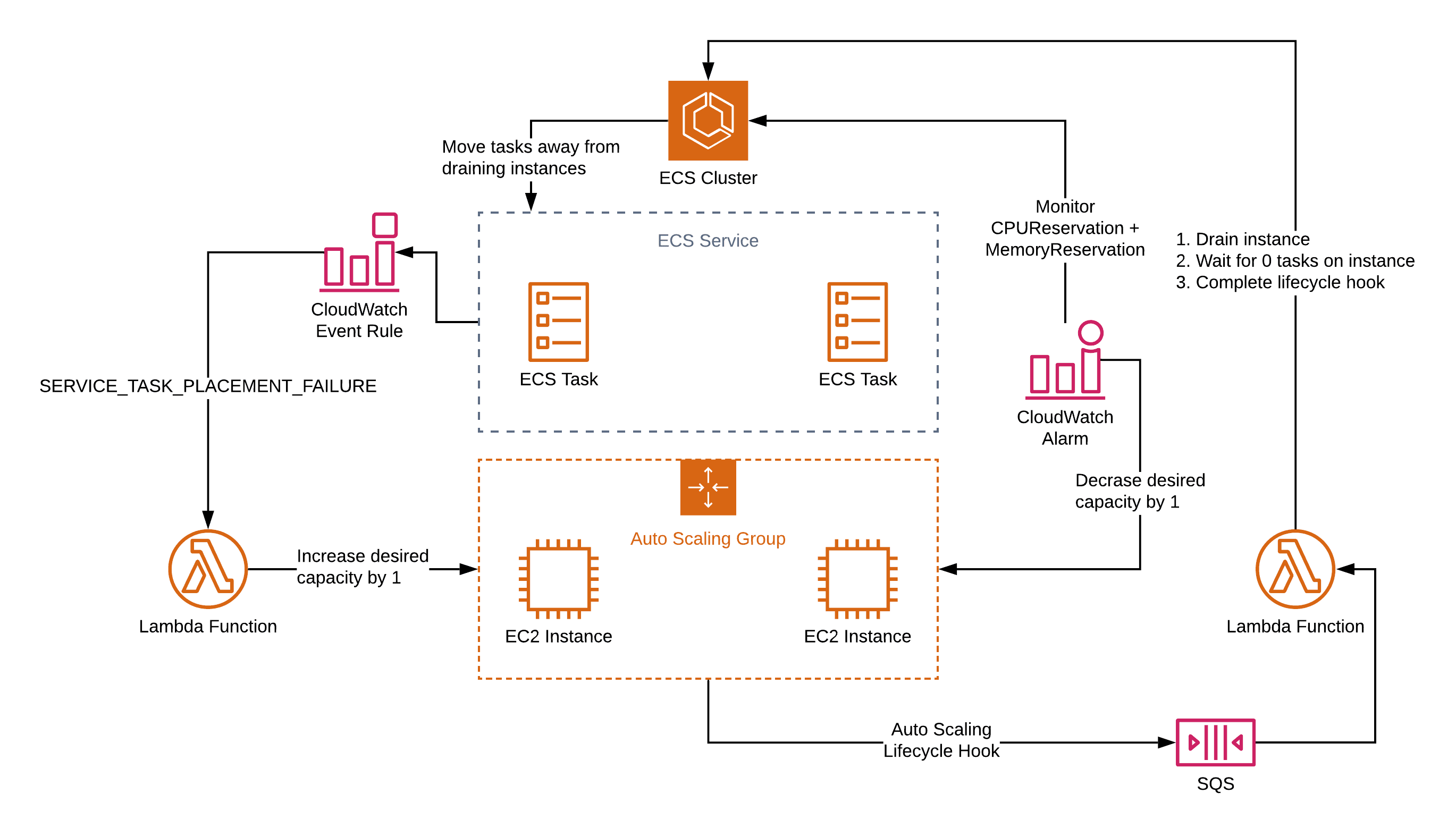

ECS with DIY Auto Scaling

Before Cluster Auto Scaling was a thing, Michael and I tried to come up with a solution to launch and terminate EC2 instances for an ECS cluster automatically. We came up with a solution but were never 100% happy with that. For example, because our solution was always overprovisioning a whole EC2 instance. Because I was frustrated with the limitations of ECS Cluster Auto Scaling - as described above - I revisited a do-it-yourself approach for scaling an ECS cluster automatically.

Based on some newish features, I came up with the following solution:

- The ECS service emits a CloudWatch event

SERVICE_TASK_PLACEMENT_FAILUREwhenever there is not enough capacity to start a new task. The solution uses theSERVICE_TASK_PLACEMENT_FAILUREevent to scale out the Auto Scaling Group immediately. - The CloudWatch metrics

CPUReservationandMemoryReservationindicate the cluster utilization and can be used to scale in the Auto Scaling Group. - The Auto Scaling Group launches and terminates EC2 instances on demand.

- An Auto Scaling Lifecycle Hook makes sure an EC2 instance is not terminated before all running containers have been moved to another instance.

Check out cluster-cost-optimized.yaml for an implementation example.

I have to mention that the DIY Auto Scaling comes with limitations as well:

- It only works for ECS services. The cluster will not scale out when creating a standalone task, as there is no CloudWatch event in this case.

- ECS does publish the

SERVICE_TASK_PLACEMENT_FAILUREonly once. In case that event is not triggering an increase of the desired capacity, for example, because of a Lambda failure, the cluster will get stuck. - You have to maintain a DIY solution adding a lot of complexity to your container infrastructure.

Having said that, the DIY Auto Scaling mitigates the problems of the built-in Cluster Auto Scaling:

- ECS will move tasks (containers) to other instances to avoid fragmentation. Therefore, the cluster scales in to a minimum, which is more cost-effective.

- Rolling out the latest version of the ECS AMI works fine with CloudFormation.

Unfortunately, there is no perfect solution for scaling an ECS cluster right now. Hopefully, the Cluster Auto Scaling will improve over time. Alternatively, I can think of a mixture of the built-in Cluster Auto Scaling with termination protection disabled and the Auto Scaling Lifecycle Hook from our DIY solution.

EKS with Cluster Autoscaler

AWS announced Managed Node Groups automating the provisioning and lifecycle management of EC2 instances for EKS clusters in November 2019. Kubernetes (K8s) itself comes with the Cluster Autoscaler, a tool that automatically adjusts the number of EC2 instances when one of the following conditions is true:

- there are pods that failed to run in the cluster due to insufficient resources,

- there are nodes in the cluster that have been underutilized for an extended period of time, and their pods can be placed on other existing nodes.1

By the way, the Cluster Autoscaler supports AliCloud, Azure, and BaiduCloud as well.

Besides a bunch of authentication and authorization configuration (IAM and RBAC), you need to deploy the Cluster Autoscaler into the kube-system namespace of your EKS cluster.

The Cluster Autoscaler respects the disruption budget of your application. The following snippet shows a disruption budget stating that there should be at least one pod running for the app redis-master.

apiVersion: policy/v1beta1 |

So far, I could not find any flaws when using the EKS Cluster Autoscaler in combination with a Managed Node Group.

Summary and Comparison

Fist of all, try to avoid having to scaling a container cluster at all. Use Fargate whenever possible. Using EKS, Managed Node Groups, and the K8s’s Cluster Autoscaler is the simplest way to manage the virtual machines for a container cluster. When using ECS, be aware that the built-in Cluster Auto Scaling will not scale in sufficiently and therefore cause unused overcapacity and overspending. Building an auto-scaling solution for ECS yourself is possible, but not optimal as well.

| ECS Cluster Auto Scaling | ECS DIY Auto Scaling | EKS Cluster Autoscaler + Managed Node Group | Fargate (ECS/EKS) | |

|---|---|---|---|---|

| Scale out/in automatically | ✅ | ✅ | ✅ | ✅ |

| Scale out delay. | ~3 min | immediately | immediately | immediately |

| Scale out for standalone task/pod | ✅ | ❌ | ✅ | ✅ |

| Move containers to optimize cluster utilization | ❌ | ✅ | ✅ | ✅ |

| Rolling update to change AMI | ❌ | ✅ | ✅ | ✅ |

Further reading

- Article EKS vs. ECS: orchestrating containers on AWS

- Article What's the best AWS Compute option for your project?

- Article Three ways to run Docker on AWS

- Tag container

- Tag ecs

- Tag eks