Designing asynchronous event systems with AWS IoT and Serverless Application Model (SAM)

An event system receives and processes events by following rules that are defined inside the system. All processing happens asynchronously. When an event is sent to the system, it is processed at some point in time, but you will not get an immediate response. Asynchronous processing has advantages if you want to build a scalable solution because it frees you from the burden of an immediate response. Instead you can queue them and process them as fast as you can. In this article, I will demonstrate how the AWS IoT service can be used to process events that have nothing to do with IoT. I will also use the new Serverless Application Model (SAM) to deploy the solution. Needless to mention that the solution will be serverless and highly cost effective for workloads up to 1 mio events per day.

How AWS IoT works

AWS IoT can do many things, but here I focus on messages, topics, rules, and actions.

A message is sent to a topic. In this case, a message is an event. AWS IoT can deal with JSON so I choose JSON as my data representation.

A topic has a name like event/buy and as you can see you can add a hierarchy by using up to 7 forward slashes (/).

A rule subscribes to a topic and triggers actions when a message is received. As simple rule can subscribe to the topic event/buy. But you can also use wildcards like event/+ or event/*. + is used for exactly one hierarchy while * matches to any number of hierarchies.

AWS IoT comes with many built-in actions. To mention just a few:

- Write the message to DynamoDB.

- Save the message as a file to S3.

- Send the message to SNS.

- Invoke a Lambda function to process the message.

So a message is sent to a topic. If a rule matches the topic’s name, it triggers the defined actions. That’s an event system, isn’t it?

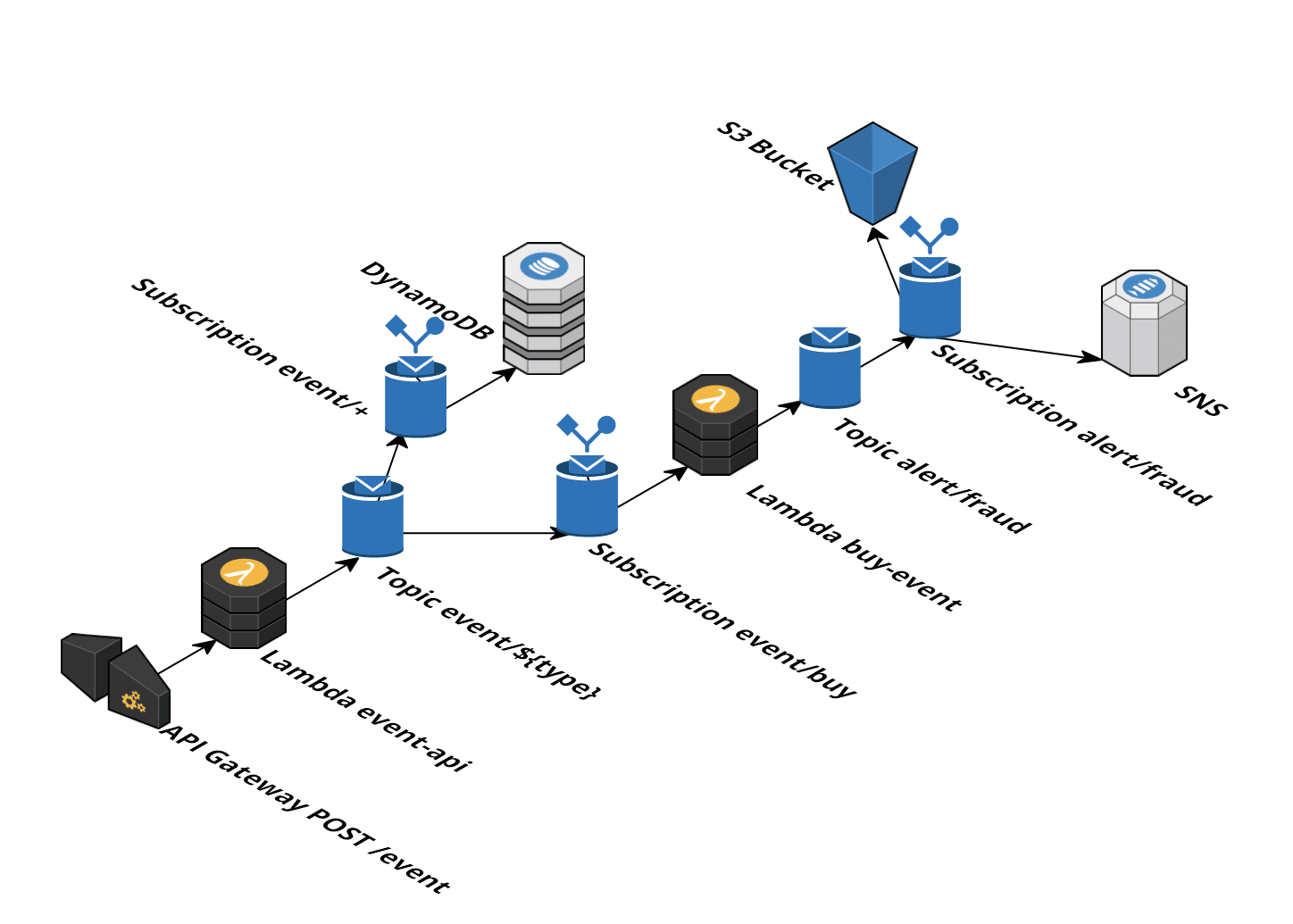

Architecture

The event system I design in this article can handle events that are generated at exchanges like buy and sell events. It is important to store all events on durable storage for archival. For some reasons buy events need to be checked for fraud. If a fraud event is detected, external systems must be notified. The following figure shows the architecture of the system.

The diagram was created with Cloudcraft - Visualize your cloud architecture like a pro.

Event Flow

- It all starts with a HTTP API (provided by API Gateway) that triggers a Lambda function for every HTTP

POSTcall/event. - The Lambda

event-apidoes some input validation. Depending on the payload the event is published on a topic likeevent/buy,event/sell, … - One rule subscribes to all

event/+topics with an action to write the message to DynamoDB. - Another rule subscribes only to the

event/buytopic and triggers thebuy-eventLambda for every message. - The

buy-eventLambda decides if the event is fraud or not. If it is fraud, it publishes the event to thealert/fraudtopic. - A rule subscribes to the

alert/fraudtopic and triggers two actions: Save message to S3 and send event to SNS.

Implementation

I use the brand new Serverless Application Model (SAM) for this example. SAM builds upon CloudFormation, so most of the interesting pieces happen inside file that I will name template.yml.

You can find the full source code on GitHub.

The first file defines Node.js dependencies that are needed in the Lambda functions.

package.json

{ |

REST API

This is how you define a APi Gateway with SAM.

template.yml

|

And here comes the implementation that will run inside Lambda. The name of the file must match with the Handler from above.

event-api-handler.js

; |

Event archival

Now it’s time to create a rule that inserts the events into a DynamoDB table.

template.yml

[...] |

Buy Event Lambda

This is a rule that subscribes to the event/buy topic and triggers a Lambda function for every message.

template.yml

[...] |

The is the implementation of the fraud detection that runs inside Lambda.

buy-event-handler.js

; |

Fraud archival

And here we define what happens whit messages that are published to the alert/fraud topic.

template.yml

[...] |

Shared caching library

And finally we have some shared code that is needed by both Lambdas. It creates a singleton of an AWS.IotData client with the needed variable endpointAddress.

cache.js

; |

You may be surprised how little code was needed. Most of the stuff functionality is provided by AWS IoT and we only need to configure it trough the CloudFormation template and the SAM extensions.

Deploy

Make sure to update the AWS CLI. Otherwise, you may not have support for SAM:

sudo pip install --upgrade awscli |

Select a region that supports the IoT service

export AWS_DEFAULT_REGION=us-east-1 |

Then create a artifacts bucket:

aws s3 mb s3://$USER-artifacts |

Clone the repository:

git clone git@github.com:michaelwittig/sam-iot-example.git |

Then install the Node.js dependencies:

npm install --production |

Then deploy the template using SAM:

aws cloudformation package --template-file template.yml --output-template-file template.tmp.yml --s3-bucket "$USER-artifacts" |

Done. You now have a running event system.

Usage

- Go to https://console.aws.amazon.com/apigateway/

- Select

sam-iot-example - Select

/event->POSTand clickTest - Fill the Request Body with:

{"type":"buy","price":123.45}and submit a few times - Go to https://console.aws.amazon.com/dynamodb/

- Select

Tables - Select the table that starts with

sam-iot-example-EventTable- - Click on Items, and you would see a few events

- Go to https://console.aws.amazon.com/s3/

- Select the bucket that starts with

sam-iot-example-archivefraudbucket- - You should see a few fraud events

Cleanup

Remove archived events from S3 Bucket by using the AWS Management Console. The name of the bucket starts with sam-iot-example-archivefraudbucket-

Then remove the stack:

aws cloudformation delete-stack --stack-name sam-iot-example |

Then remove the artifacts bucket:

aws s3 rb s3://$USER-artifacts --force |

Summary

- The Serverless Application Model (SAM) make deploying CloudFormation templates very easy. I like it:)

- Using topics to decouple your event system is very powerful. You can always add topic rules and actions if the business process changes or just someone else is interested in an event in the system. Keep the Lambda small. Better have more small Lambdas than few big ones.

- The event system is very cost effective for workloads up to 1 mio messages per day. If you want to process more than that, I recommend that you first calculate the costs and compare them to an architecture using Kinesis.

Further reading

- Article A look at DynamoDB

- Article My Opinion on Serverless

- Article Integrate SQS and Lambda: serverless architecture for asynchronous workloads

- Article The Life of a Serverless Microservice on AWS

- Tag lambda

- Tag serverless

- Tag iot