DynamoDB pitfall: limited throughout due to hot partitions

Is your application suffering from throttled or even rejected requests from DynamoDB? Even if you are not consuming all the provisioned read or write throughput of your table? You run into a common pitfall! Read on to learn how Hellen debugged and fixed the same issue.

Hellen is working on her first serverless application: a TODO list. She uses DynamoDB to store information about users, tasks, and events for analytics.

Problem

Today users of Hellen’s TODO application started complaining: requests were getting slower and slower and sometimes even a cryptic error message ProvisionedThroughputExceededException appeared.

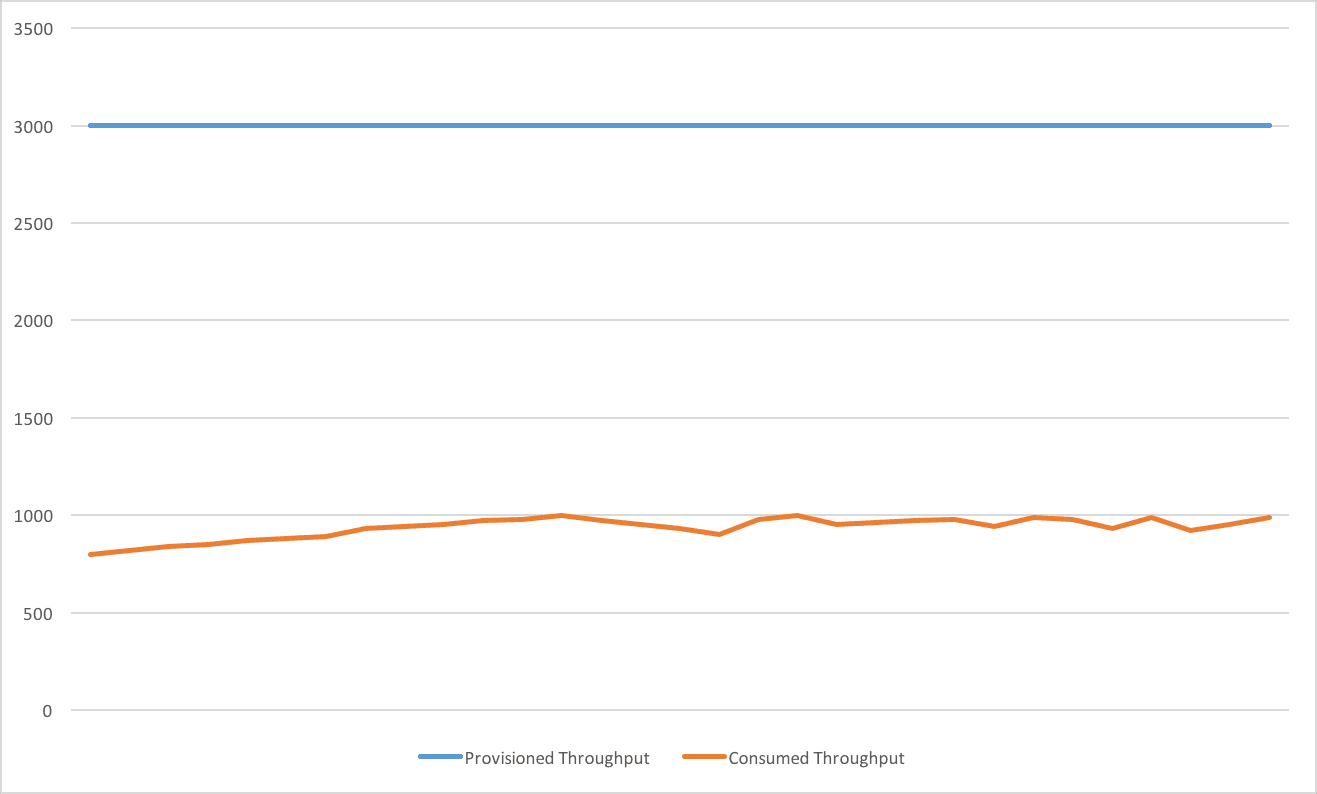

First Hellen checks the CloudWatch metrics showing the provisioned and consumed read and write throughput of her DynamoDB tables. Everything seems to be fine. The consumed throughput is far below the provisioned throughput for all tables as shown in the following figure.

Hellen is at lost. What is wrong with her DynamoDB tables? She starts researching for possible causes for her problem. Hellen finds detailed information about the partition behavior of DynamoDB.

Her DynamoDB tables do consist of multiple partitions. The number of partitions per table depends on the provisioned throughput and the amount of used storage.

MAX( (Provisioned Read Throughput / 3,000), (Provisioned Write Throughput / 1,000), (Used Storage / 10 GB)) |

Details of Hellen’s table storing analytics data:

Provisioned Read Throughput: 2,000 Units |

{

“Date”: “2016-11-21”, <- Partition Key

“Timestamp”: 1479718722848, <- Range Key

“EventType”: “TASK_CREATED”,

“UserId”: “UUID”

}

|

{

“Date”: “2016-11-21”,

“Timestamp”: 1479718722848, <- Range Key

“EventType”: “TASK_CREATED”,

“UserId”: “UUID” <- Partition Key

}

```

She uses the UserId attribute as the partition key and Timestamp as the range key. Writes to the analytics table are now distributed on different partitions based on the user. The application makes use of the full provisioned write throughput now.

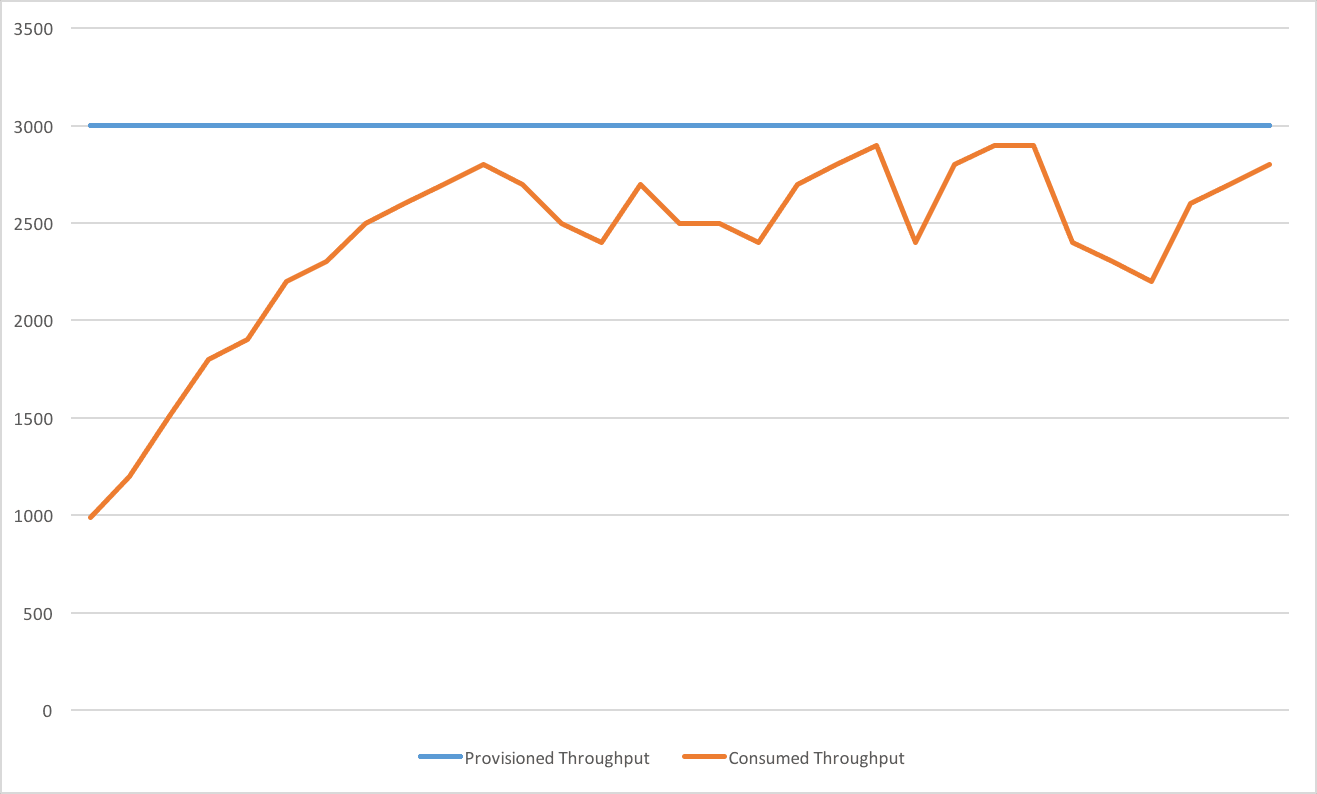

Hellen opens the CloudWatch metrics again. The write throughput is now exceeding the mark of 1000 units and is able to use the whole provisioned throughput of 3000 units.

Problem solved, Hellen is happy! No more complaints from the users of the TODO list.

Summary

Think twice when designing your data structure and especially when defining the partition key: Guidelines for Working with Tables. To get the most out of DynamoDB read and write request should be distributed among different partition keys. Otherwise, a hot partition will limit the maximum utilization rate of your DynamoDB table.

Further reading

- Article A look at DynamoDB

- Article My Opinion on Serverless

- Article Integrate SQS and Lambda: serverless architecture for asynchronous workloads

- Article The Life of a Serverless Microservice on AWS

- Tag dynamodb

- Tag serverless