How we built bucketAV powered by Sophos

This is the behind-the-scenes story of our latest product launch bucketAV powered by Sophos, a malware protection solution for Amazon S3. We share insights into building and selling a product on the AWS Marketplace.

Our story began in 2015 when we published an open-source solution to scan S3 buckets for malware. Because the open-source project was a huge success, we built and sold a similar solution on the AWS Marketplace. In 2019, we released bucketAV powered by ClamAV. Today, over 1,000 customers rely on bucketAV to protect their S3 buckets from malware and we are happy to announce bucketAV powered by Sophos.

For context, here’s an overview of how bucketAV works. bucketAV scans S3 objects on-demand or based on a recurring schedule.

- Create a scan job based on an upload event or schedule.

- Download the file from S3.

- Scan file for malware.

- Report scan result.

- Trigger automated mitigation.

Making data available worldwide

An anti-malware engine like Sophos relies on a database containing information about known threats. It is crucial to regularly update the database. Our customers run bucketAV in all commercial regions provided by AWS. Therefore, we need to distribute the data worldwide.

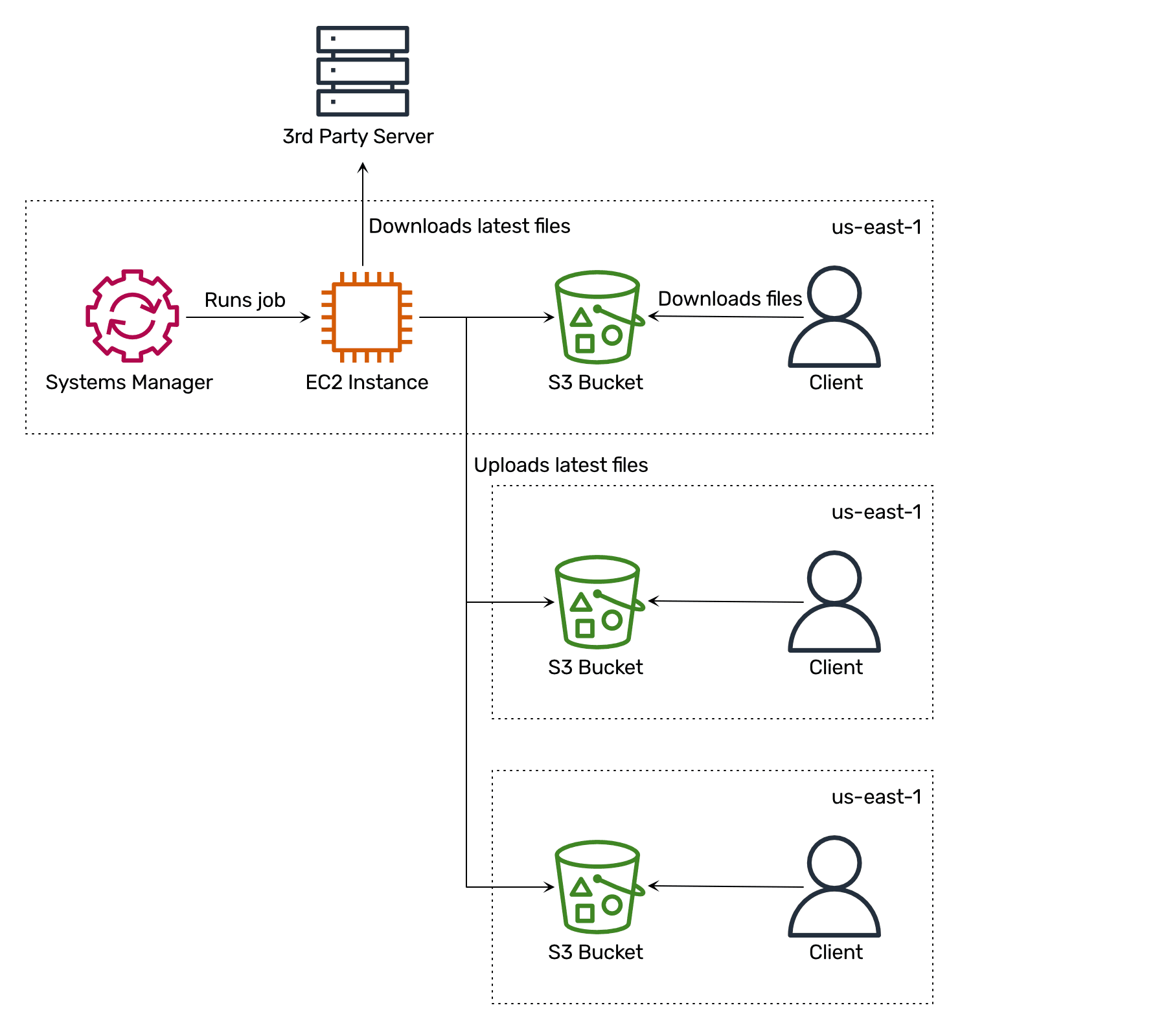

We came up with the following solution to make data available worldwide at low costs:

- We created S3 buckets in all commercial regions.

- We launched an EC2 instance in

eu-west-1. - We configured AWS Systems Manager to run a recurring job on the EC2 instance.

- The recurring job downloads the latest threat database.

- The recurring job uploads the latest threat database to all S3 buckets.

Why not use CloudFront or a single S3 bucket? Because when EC2 instances download data from an S3 bucket in the same region, we are not paying for the traffic. So distributing our data among S3 buckets in each region is the cheapest option.

Why not use S3 Cross-Region Replication (CRR)? First, CRR with replication time control costs $0.015 per GB. Second, CRR does not guarantee the replication order, which is essential in our scenario.

Metering and billing

bucketAV is a solution bundled into an AMI and a CloudFormation template. The AWS Marketplace supports different pricing models. Typically, you pay hourly for every EC2 instance launched from the AMI sold through the AWS Marketplace. However, for bucketAV powered by Sophos, we decided to use a different approach. We are charging for the processed data.

How does that work? Every hour, bucketAV reports the amount of processed data to the AWS Marketplace. To do so, bucketAV calls the AWS Marketplace Metering Service API from each EC2 instance. To make that work, each EC2 instance needs an IAM role granting access to aws-marketplace:MeterUsage. Besides that, each EC2 instance must be able to reach the API endpoint https://metering.marketplace.$REGION.amazonaws.com, which is not yet covered by a VPC endpoint, unfortunately.

But how to test usage-based pricing? While submitting a new product to the AWS Marketplace, AWS creates a restricted listing that is only accessible from your AWS accounts. We used that period to test and fix our metering implementation.

Optimizing performance

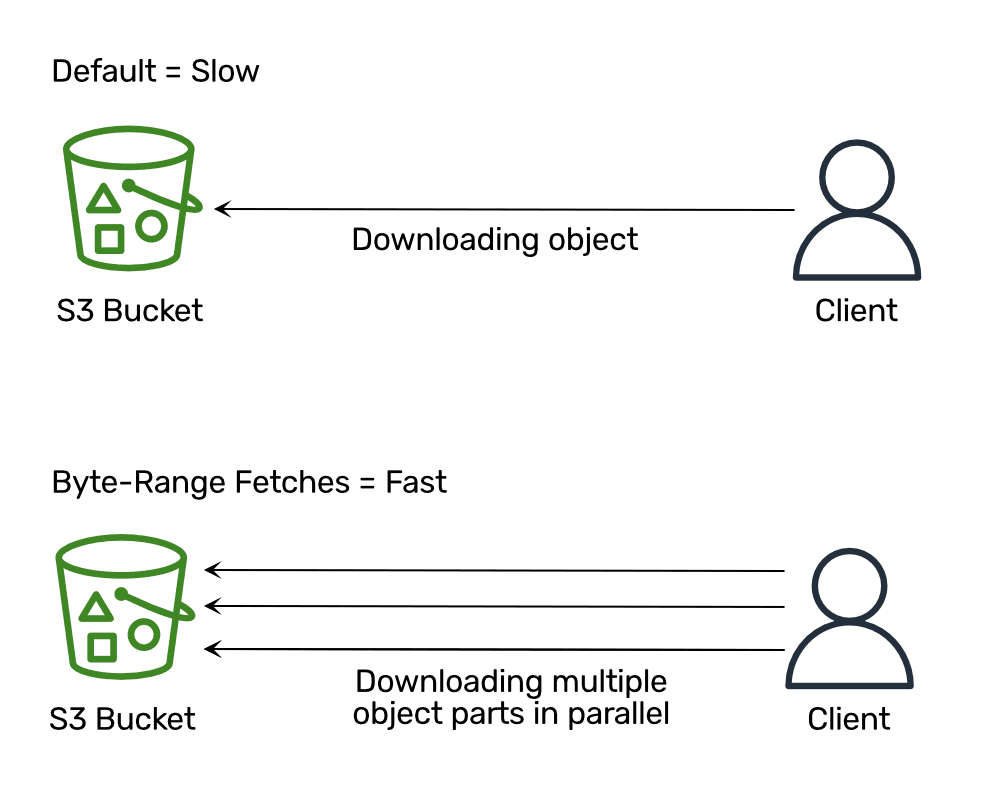

When running performance tests with bucketAV powered by Sophos, we noticed that while downloading files from S3 consumed most of the time, the EC2 instances did not reach the maximum network bandwidth. Especially when downloading files with a maximum file size of 5 TB, the EC2 instance was idling a lot. When debugging the issue, we found out that AWS recommends downloading files in chunks and parallel to provide maximum performance (see Performance Guidelines for Amazon S3: Use Byte-Range Fetches).

Unfortunately, the AWS SDK for JavaScript (v2 and v3) does not support byte-range fetches when downloading data from S3. We also could not find any up-to-date libraries, so we implemented and open-sourced our own implementation: widdix/s3-getobject-accelerator. The following example downloads an object from S3 by downloading four parts of 8 MB in parallel.

const {createWriteStream} = require('node:fs'); |

Besides that, we spent much time running performance benchmarks to compare different EC2 instance types. We discovered that a c5.large improves performance by 20% compared to an m5.large for our workload. Also, a c6i.large performed 30% better than a m5.large in our scenario. The lesson learned is that benchmarking different instance types pays off.

Reducing costs

Some of our customers scan a few MBs others scan TBs of data. As we use SQS to store all scan jobs, scaling horizontally by adding and removing EC2 instances is obvious. To do so, we are using an auto-scaling group. To reduce costs, we provide the option to run bucketAV on spot instances. However, an auto-scaling group does not support replacing spot instances with on-demand instances in case spot capacity is unavailable for longer.

But, we found a way to fallback to on-demand EC2 instances if spot capacity is unavailable:

- Create an auto-scaling group

spotto launch spot instances. - Create an auto-scaling group

ondemandto launch on-demand instances. - Scale the desired auto-scaling group

spotcapacity as usual. - Scale the desired capacity of the auto-scaling group

ondemandbased on the difference between the desired and actual size of the auto-scaling groupspot.

Check out Michael’s blog post Fallback to on-demand EC2 instances if spot capacity is unavailable for more details and code examples.

Terminating gracefully

As we are using auto-scaling groups and spot instances, there are three main reasons why an EC2 instance running bucketAV gets terminated:

- The auto-scaling group terminates an instance because of a scale-in event.

- The auto-scaling group terminates an instance during a rolling update initiated by a CloudFormation.

- AWS interrupts a spot instance.

In all these scenarios, we want to ensure bucketAV terminates gracefully. Most importantly, the instance should keep running to complete the currently running scan tasks or at least flush reporting and metering data.

We’ve implemented graceful termination by making use of auto-scaling lifecycle hooks.

- On each EC2 instance, bucketAV polls the metadata service for the current auto-scaling and spot state.

- In case bucketAV detects that the instance has been marked for termination, bucketAV shuts down gracefully.

- bucketAV waits until all running scan jobs are complete. During that time, bucketAV sends a heartbeat to the auto-scaling group.

- After all jobs are finished, bucketAV completes the lifecycle hook.

The following code snippet shows how to fetch the auto-scaling target lifecycle state from the IMDSv2. We use parts of our library s3-getobject-accelerator as the AWS JavaScript SDK v2 does not support IMDSv2 out of the box.

const {imds} = require('s3-getobject-accelerator'); |

imds('/latest/meta-data/autoscaling/target-lifecycle-state', IMDS_TIMEOUT, (err, data) => { |

And here is how you fetch the notification about a spot instance interruption from IMDSv2.

imds('/latest/meta-data/spot/instance-action', IMDS_TIMEOUT, (err, data) => { |

The following snippet shows how to configure an auto-scaling lifecycle hook with CloudFormation.

AutoScalingGroup: |

With the autoscaling:EC2_INSTANCE_TERMINATING lifecycle hook in place, the auto-scaling group will wait until someone, for example, the instance itself, completes the lifecycle action before terminating the instance. Sending a heartbeat is required to tell the auto-scaling group that the instance is still alive. The following code snippet shows how to send a heartbeat and complete the lifecycle option using the AWS SDK for JavaScript v2.

const AWS = require('aws-sdk'); |

Summary

That’s what we learned while building bucketAV powered by Sophos, a malware protection solution for Amazon S3.