Show Me Your Architecture Vol. 1: Scanning S3 buckets for malware

Through the AWS documentation, books like AWS in Action or AWS trainings you can gain theoretical knowledge. But beyond that, it is very valuable to learn directly from practice. In this series, we inspect real-life AWS architectures. We start with Andreas, who gives us an overview of the architecture for scanning S3 buckets for malware.

This is a cross-post from the Cloudcraft blog.

Do you like to share your architecture as well? Contact architecture@cloudcraft.co.

Who are you?

My name is Andreas Wittig. I’m the co-founder of bucketAV, an antivirus protection solution for Amazon S3. Besides that, I’m writing for the Cloudcraft and cloudonaut blogs. I’ve been designing and implementing AWS architectures since 2013 for a wide variety of consulting clients.

Which problem do you solve?

The challenge is to scan objects on S3 for malware. This includes scanning objects immediately after they have been uploaded to a bucket and scanning all the objects stored in a bucket based on a recurring schedule. The solution needs to be cost-efficient and scalable to work for scenarios with very little or large amounts of data.

What does the architecture look like?

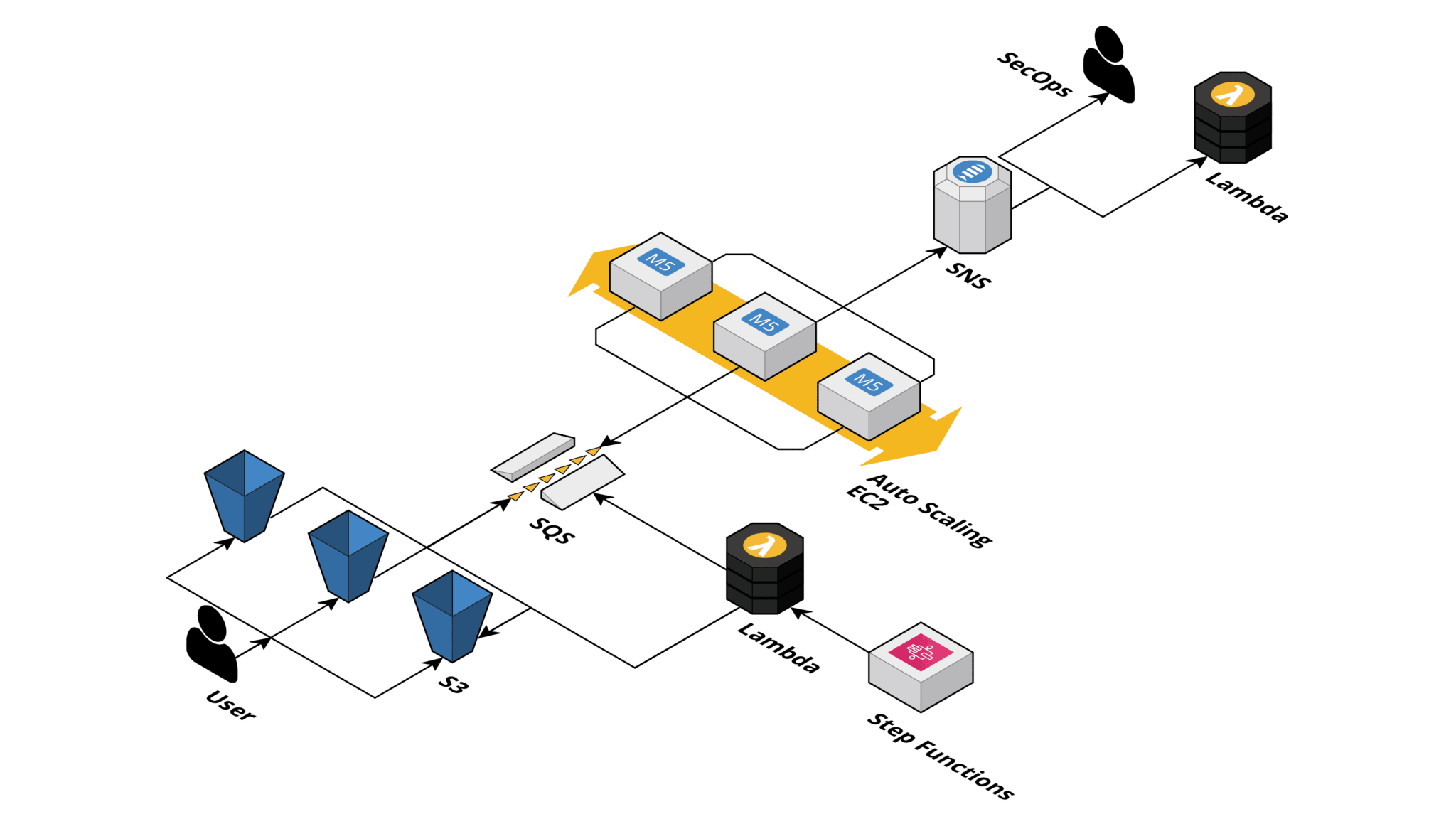

The architecture consists of the following building blocks:

- The S3 buckets are scanned for malware.

- An SQS queue to store and distribute the scan tasks to a fleet of workers.

- An Auto Scaling Group to increase or decrease the number of workers on-demand.

- EC2 Instances that are downloading and scanning objects.

- An SNS topic to publish and distribute results of scanned objects.

- A Lambda function to process the scan results.

- A Step Functions state machine to orchestrate scheduled scans, which requires listing all objects.

The SQS queue is a central part of the architecture. An S3 event notification is sent to the SQS queue whenever a user uploads an object to an S3 bucket. Also, a Lambda function sends messages to the queue to trigger scheduled scans.

First, the SQS queue decouples the EC2 instances from the senders – S3 event notifications and Lambda. The number of workers gets scaled based on the length of the queue, which allows to scale down to 0 when no objects need to be scanned. Also, the queue absorbs load peaks.

Second, the SQS queue ensures fault tolerance. After a worker scans an object, the message gets deleted from the queue. If a worker fails to scan an object, it does not delete the message from the queue. Therefore, SQS will re-deliver the message to retry. But, after five failed attempts, SQS will move the message to the dead-letter queue, triggering an alarm to inform an operator.

What other approaches did you consider?

We thought about using containers instead of virtual machines. However, from a cost perspective, that did not make much sense. An EC2 spot instance is by far the cheapest option to get access to compute capacity on AWS.

We also thought about using Lambda to scan objects for malware. We decided not to use Lambda because of the following reasons.

First, the malware database needs to be updated every time Lambda spins up a new execution environment. Doing so adds a lot of overhead.

Second, we were skeptical that we would be able to meet the 15-minute execution time limit in all cases, especially for downloading and scanning large files.

Third, there is no cheaper option to access compute capacity than spot instances.

What are the limitations of the architecture?

By default, an SQS queue does not guarantee delivering messages in order under any circumstances. That’s not a big deal in this scenario, as there is no dependency between the objects.

Also, SQS does not guarantee to deliver messages exactly once. In rare cases, a message might be delivered more than once. Again, that’s not an issue in this scenario because scanning an object multiple times does not modify the result.

Another limitation of the architecture is that scaling takes some time. For example, it will take about 10 minutes until the first EC2 instance scans an object after the Auto Scaling Group downsized to zero. That’s because of delays added by CloudWatch, Auto Scaling, the bootstrapping of an EC2 instance, and so on. Other approaches, like using Lambda functions, could reduce the latency.

How did the architecture evolve?

The architecture consisting of SQS, Auto Scaling, and EC2 did not change. However, we added additional features primarily by adding Lambda functions over the years.

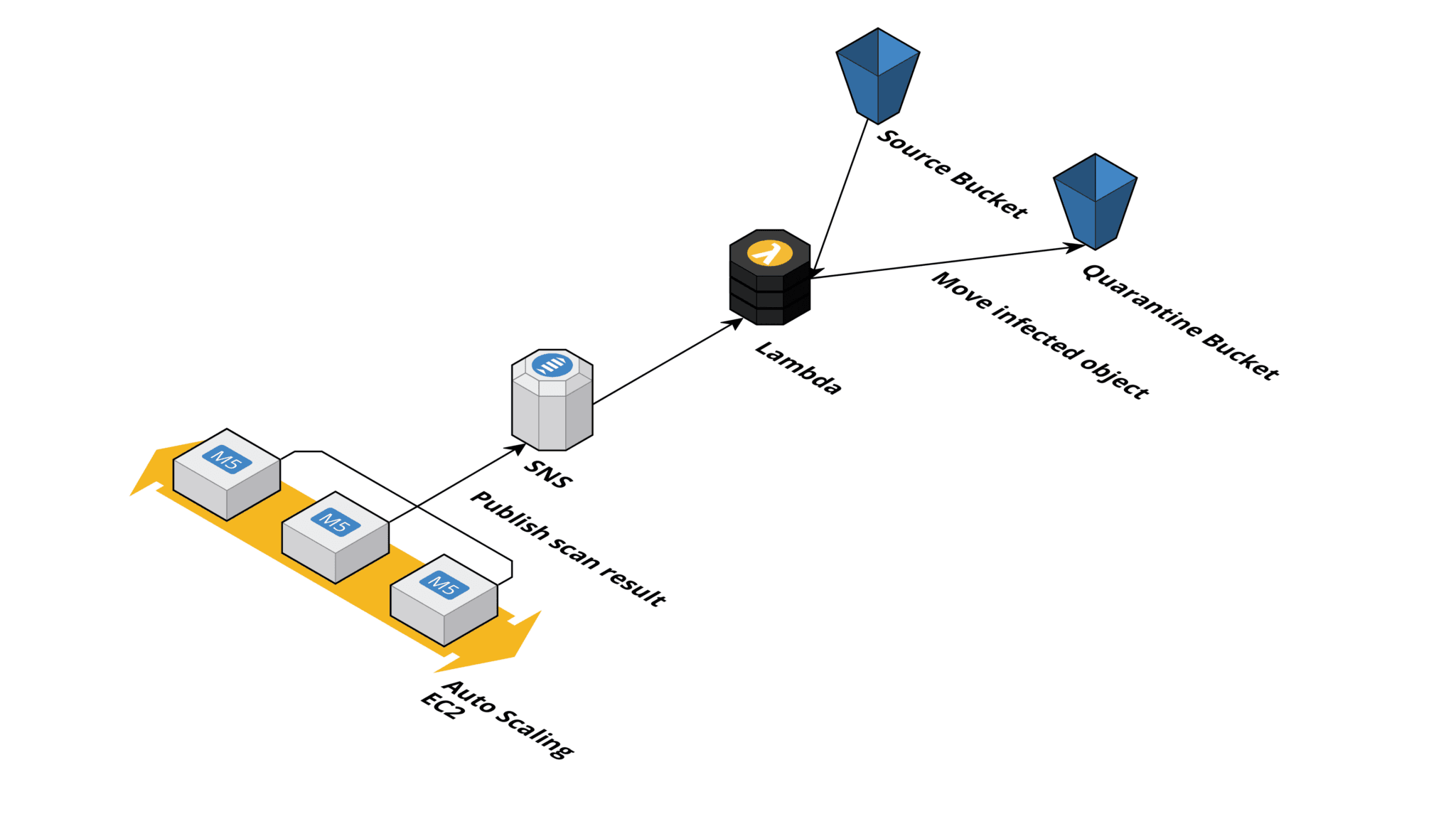

For example, to quarantine infected objects by moving them to a designated S3 bucket, we use a Lambda function subscribed to the SNS topic, which receives all the scan results.

What surprises did you encounter?

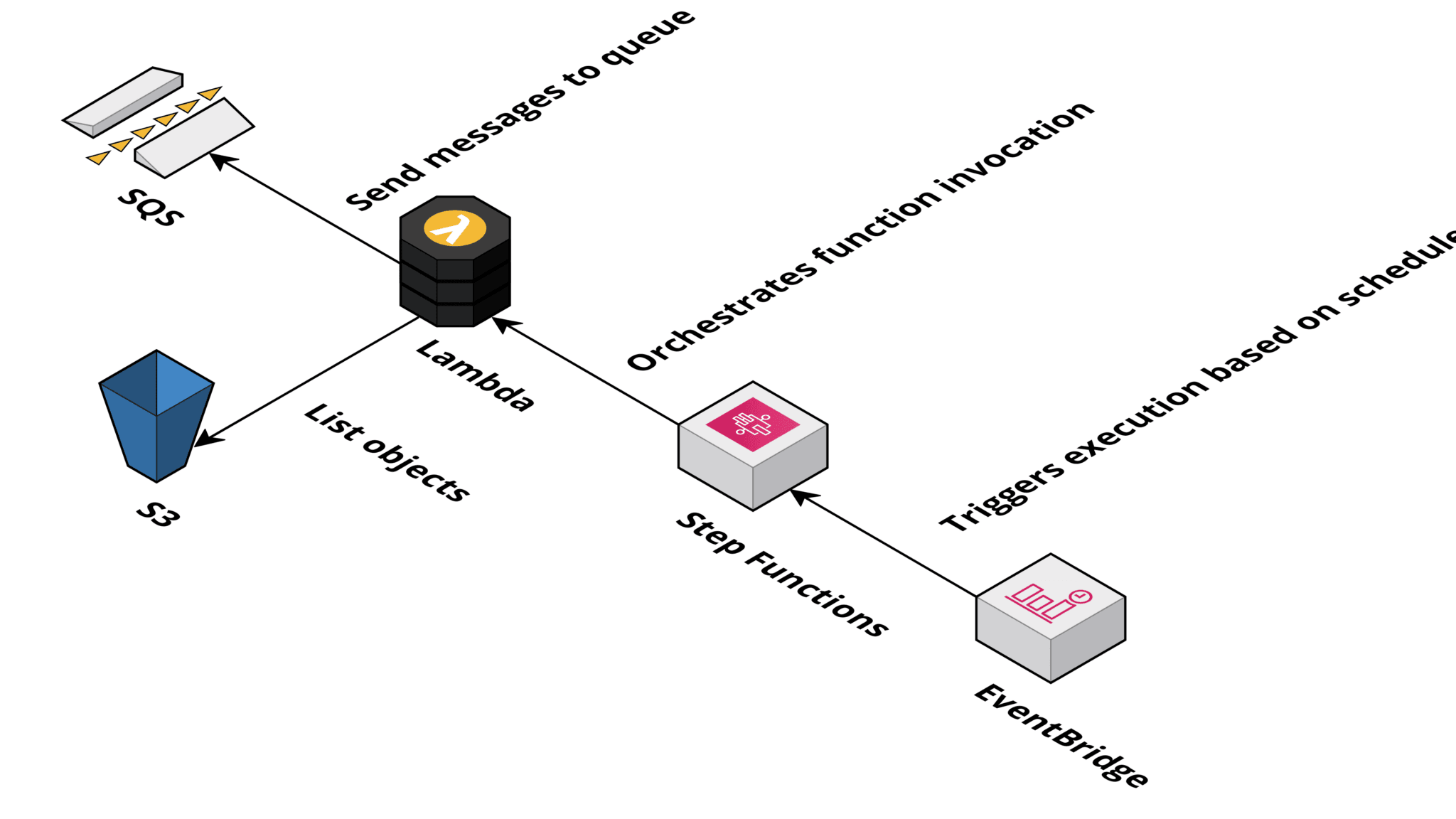

In the beginning, bucketAV scanned objects immediately after upload. But customers were asking about the possibility of scanning all objects in a bucket based on a recurring schedule.

Turns out that it is not that easy to implement this feature. The challenge is that a process needs to page through the list of objects. A simple task for buckets with 10,000 objects. But it gets tricky when dealing with buckets containing a vast amount of objects.

For example, you may want to throttle the maximum amount of objects scanned per minute when crawling through the buckets. So you need a way to orchestrate this process.

We decided to use Step Functions for orchestration. This also allowed us to use a Lambda function to list objects and send messages to the SQS queue. A solution that works very reliably.

Summary

Scanning S3 buckets for malware lead to a simple but powerful architecture consisting of S3, SQS, Auto Scaling, EC2, SNS, and Lambda. Additional features were added by using Lambda and Step Functions over the years. The architecture has proven to be cost-efficient, scalable, reliable, and is used by hundreds of customers worldwide.

Thanks for sharing your architecture with us, Andreas!

Did you learn something new by reading this Show Me Your Architecture volume? Then, please share this article with a friend or coworker. Also, please consider sharing your own story. Contact architecture@cloudcraft.co!

Further reading

- Article Review: App Runner - Simply containers on AWS!

- Article ALB vs. NLB: Which AWS load balancer fits your needs?

- Article Enabling S3 Versioning is not a backup strategy

- Tag ec2

- Tag s3

- Tag sqs