Serverless Big Data pipeline on AWS

Lambda is a powerful tool when integrating different services on AWS. During the last months, I’ve successfully used serverless architectures to build Big Data pipelines. And I’d like to share my learnings with you.

The benefits of serverless pipeline are:

- No need to manage a fleet of EC2 instances.

- Highly scalable.

- Paid per execution.

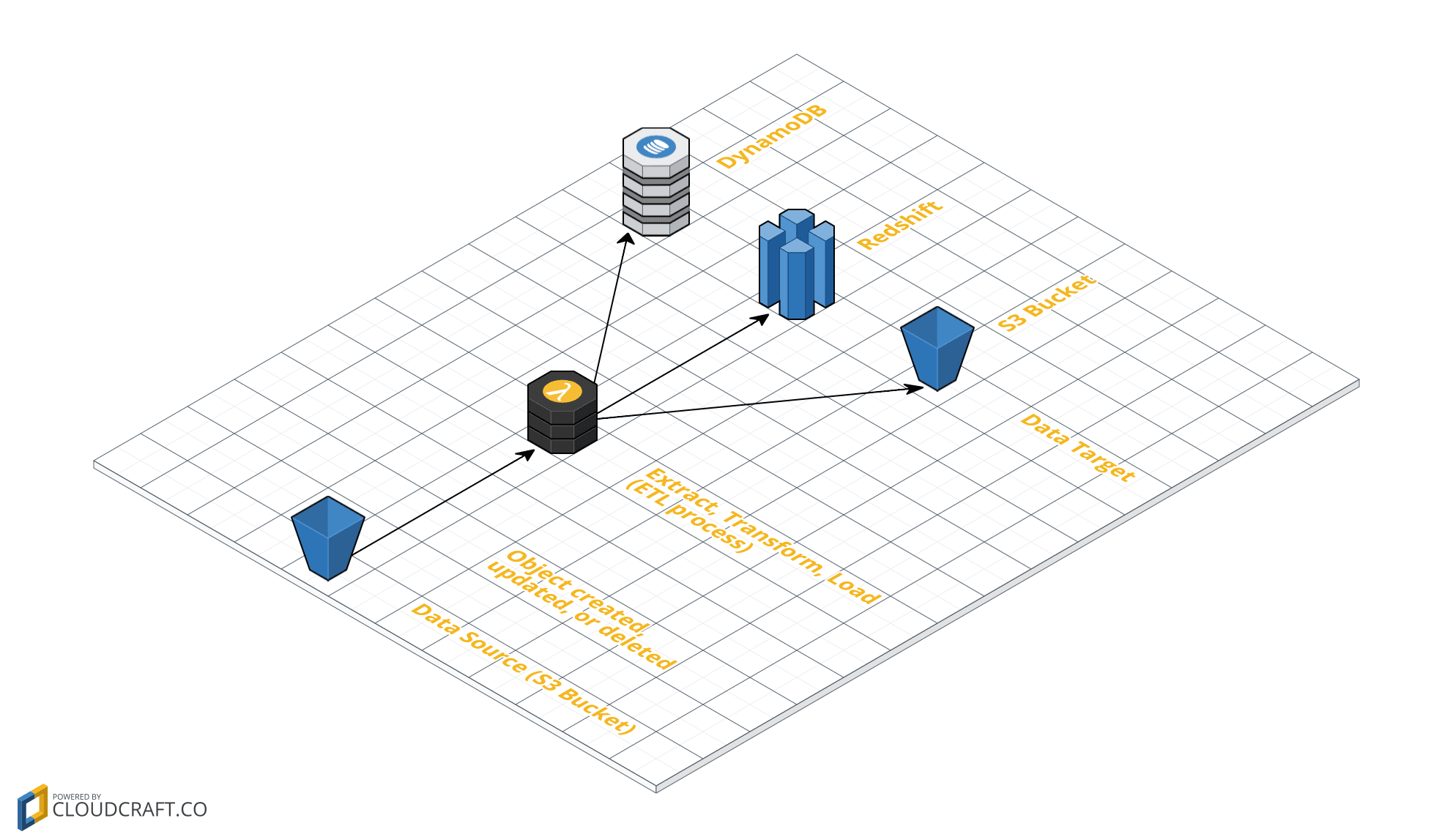

A Big Data pipeline is moving data between data sources and data targets. Often called an ETL process (extract, transform, load) as well. The following figure describes a typical serverless Big Data pipeline:

Use Cases

Using Lambda to implement your Big Data pipeline is especially useful if you need to transform or filter data during moving from data source to data target.

Typical use cases:

- Load CloudFront and ELB logs from S3, transform and filter data, insert into Elasticsearch cluster.

- Load business reports from S3, transform and filter data, insert into Redshift.

- Load event data from Kinesis stream, transform and filter data, store on S3 for further processing.

Other use cases are possible as well. Changed data (S3 and DynamoDB), external events, or a schedule (CloudWatch Event Rule) are able to trigger a Lambda function. A Lambda can access data sources and targets connected to the Internet or VPC.

Seems like there almost no limits?

Limitations

Lambda is a powerful tool, but compared to an EC2 instance there are limitations as well. Limitations when building a serverless Big Data pipeline:

- Maximum execution duration: 300 seconds

- Maximum memory: 1536 MB

- Ephemeral disk capacity: 512 MB

Real world example:

- Load CSV file from S3.

- Unzip data.

- Transform data.

- Zip data.

- Upload to S3.

About 800 MB of unzipped data. Implementing a Lambda function following the asynchronous model of Node.js is not possible as there is neither enough memory nor disk capacity to hold the unzipped data as well as the transformed data at once.

Solution: Data Streaming

Using streaming instead of linear execution allows you to extract, transform, and load data in chunks from the beginning to the end of the pipeline.

The following source code contains an example implementing a stream for the described scenario in Node.js:

- Load

csv.tgzfile from S3. - Unzip data.

- Split at the end of the line.

- Transform data.

- Zip data.

- Upload file to S3.

var AWS = require("aws-sdk"); |

This approach allows you to process data without hitting the memory or disk space limitations. Of course, the maximum execution duration of 300 seconds is still limiting the maximum throughput of your serverless data pipeline. If you are hitting the limit, you need to split your data into smaller chunks.