Performance boost and cost savings for DynamoDB

DynamoDB is offering a managed, scalable and highly available database. Compared to SQL databases a big advantage of DynamoDB is the ability to scale the read and write throughput. This allows you to scale the database along with your application layer, no matter if you are using EC2 behind ELB or API Gateway with Lambda.

You’ll learn how to use ElastiCache as caching layer in front of DynamoDB boosting performance and saving costs during this article.

Ups and downs

A typical project creating a cloud-native application starts with huge enthusiasm during the development process. DynamoDB allows the app to run fast and reliable with almost no operational efforts. But when real traffic hits the app throttled and rejected DynamoDB requests bring beads of sweat to on-call engineers. Increasing provisioned throughput capacity of DynamoDB is solving the issue. Everything seems to be fine until the first AWS bill including the charges for the newly provisioned throughput arrives. Another bitter pill: each call to DynamoDB is expensive from a performance perspective. Product and QA managers are starting to ask for performance improvements to increase customer satisfaction.

Avoid DynamoDB requests

The DynamoDB pricing model consists of two components:

- charges for the amount of data stored

- charges for the provisioned read and write throughput capacity

Very simple formula to save costs:

Less DynamoDB requests = Less provisioned throughput capacity = Cost savings |

The idea of avoiding requests to databases is not that new. Using caching systems to store popular requests to a database has been a popular pattern long before DynamoDB and Auto Scaling existed.

But using a caching system is still a very efficient way to avoid requests to DynamoDB, which allows you to decrease the provisioned throughput capacity and therefore decrease costs.

Modern caching systems are storing data in memory. That’s why a request to the caching system will add latency than requesting data from DynamoDB, where it is stored on SSDs.

Distribute DynamoDB requests among partitions

To be able to scale horizontally DynamoDB is storing objects distributed among multiple partitions. The partition key of an object points to one of these partitions. The number of partitions per table is defined as follows:

Number of partitions = Max((Used Storage in GB / 10 GB), (Provisioned Read Throughput Capacity / 3000), (Provisioned Write Throughput Capacity / 1000)) |

DynamoDB adds partitions to your tables as needed in the background. Important to know: the total provisioned throughput is divided evenly among all partitions.

Total Provisioned Throughput / Number of Partitions = Throughput Per Partition |

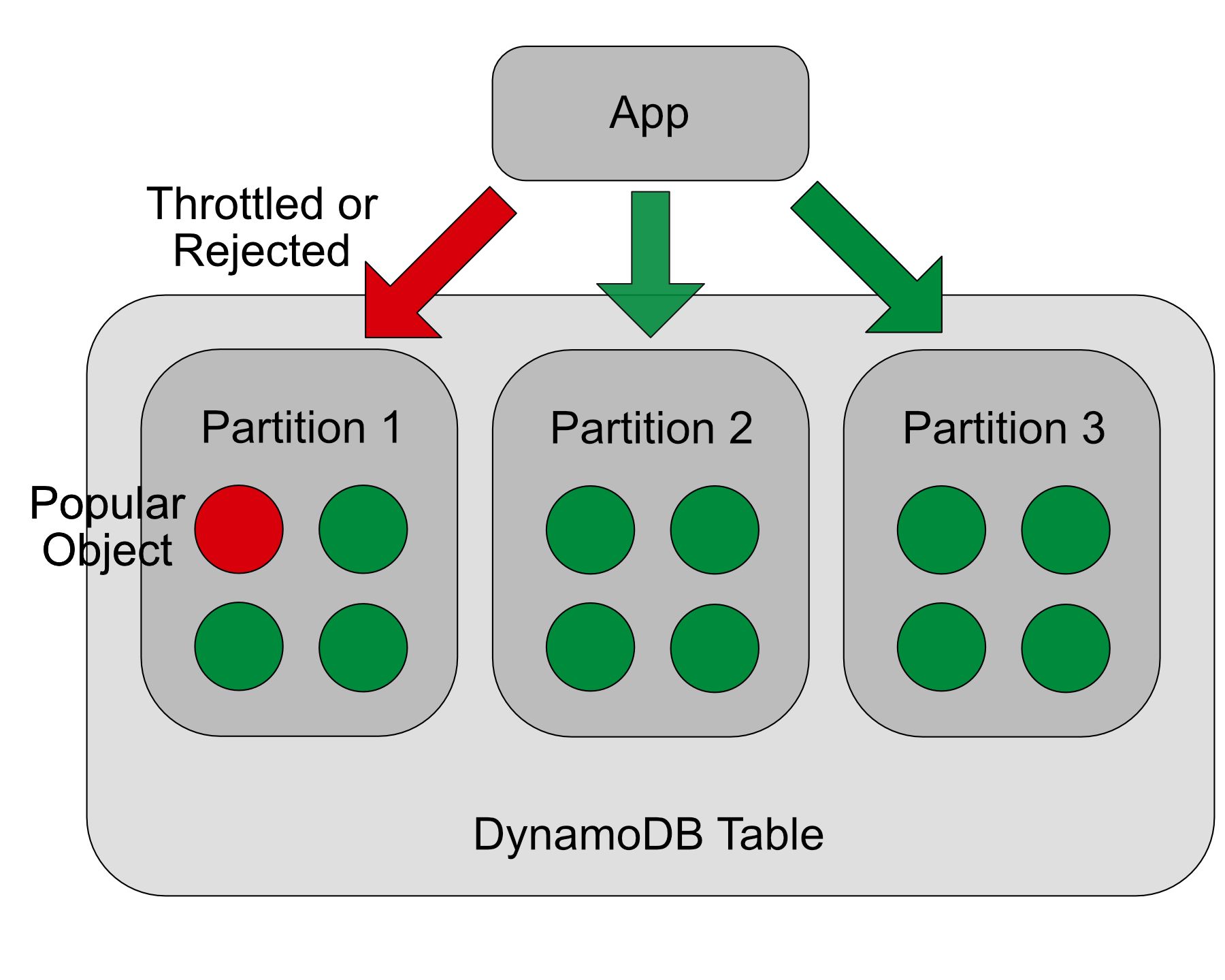

Assuming you have provisioned a read throughput capacity of 9000 units your DynamoDB table consists of three partitions. You are able to use 3000 units to read data from each partition. Not a problem, if your read requests are hitting different objects on all three partitions.

But what if the majority of requests are hitting the same object as illustrated in the following figure? In this case, the maximum read throughput will be 3000 read units instead of the provisioned 9000 read units.

Sometimes it is possible to design the data structure of your application in a way that it becomes very unlikely that the majority of requests will hit the same partition.

But there are scenarios where it is impossible to distribute read requests among many different partition keys.

- Storing a product catalog on DynamoDB. A popular product is requested by 90% of all customers.

- Storing blog posts on DynamoDB. The newest blog post is trending on Hacker News and is generating a huge amount of read requests.

Using DynamoDB in these scenarios is probably uneconomic as you would need to provision huge read throughput capacity without really using it.

Sounds frustrating? But there is a simple solution avoiding DynamoDB requests in general and distributing DynamoDB requests among partitions: placing a caching system in front of DynamoDB to catch requests hitting the same object and partition over and over again.

ElastiCache for the win!

AWS is offering in-memory stores allowing you to cache read requests to DynamoDB and other database systems: ElastiCache. ElastiCache is a managed service providing two different engines: memcached and Redis. The service is comparable to RDS: no need to maintain EC2 instances, choose the size of cache nodes and cache clusters, pay a small fee on top of plain EC2.

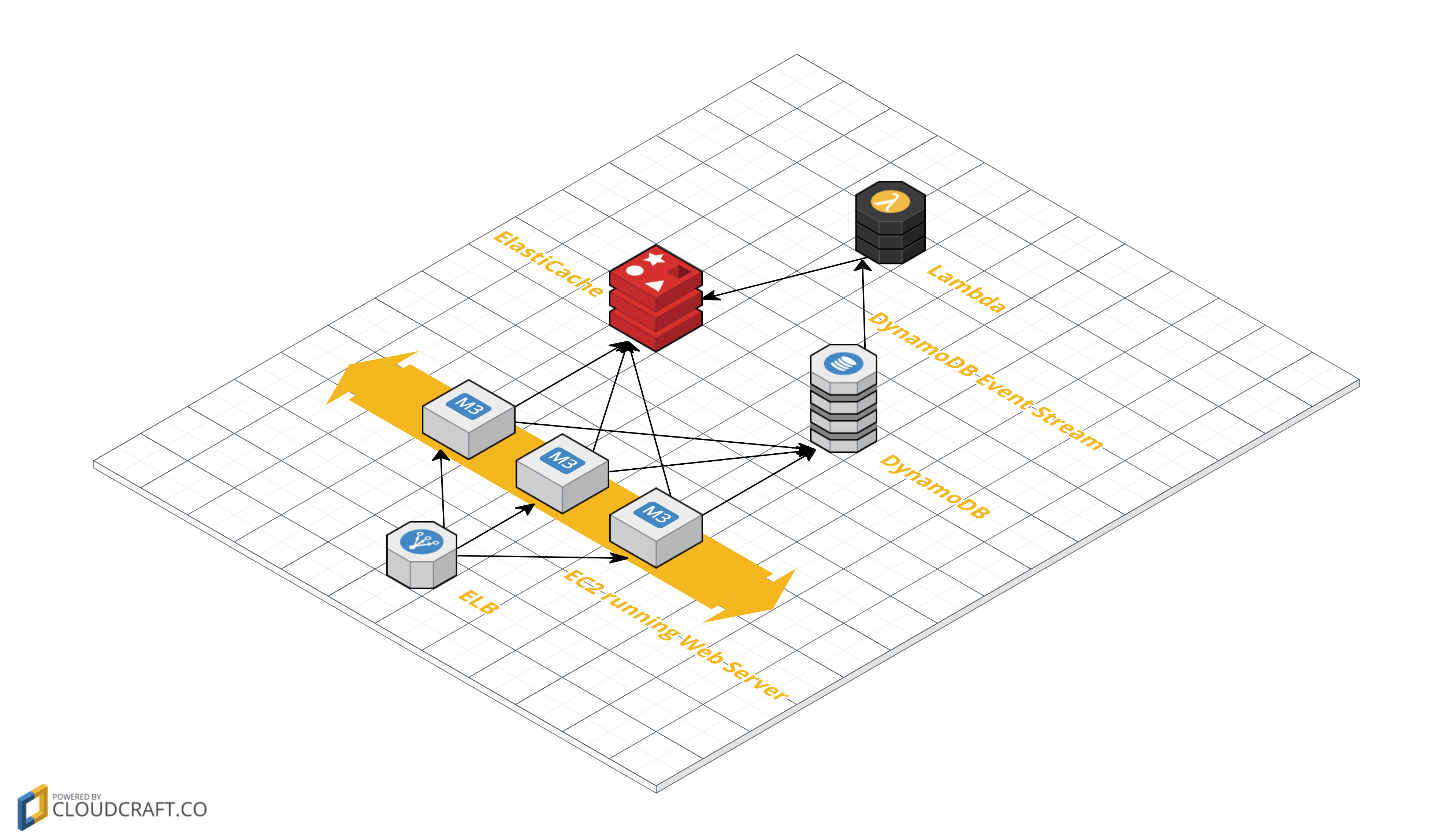

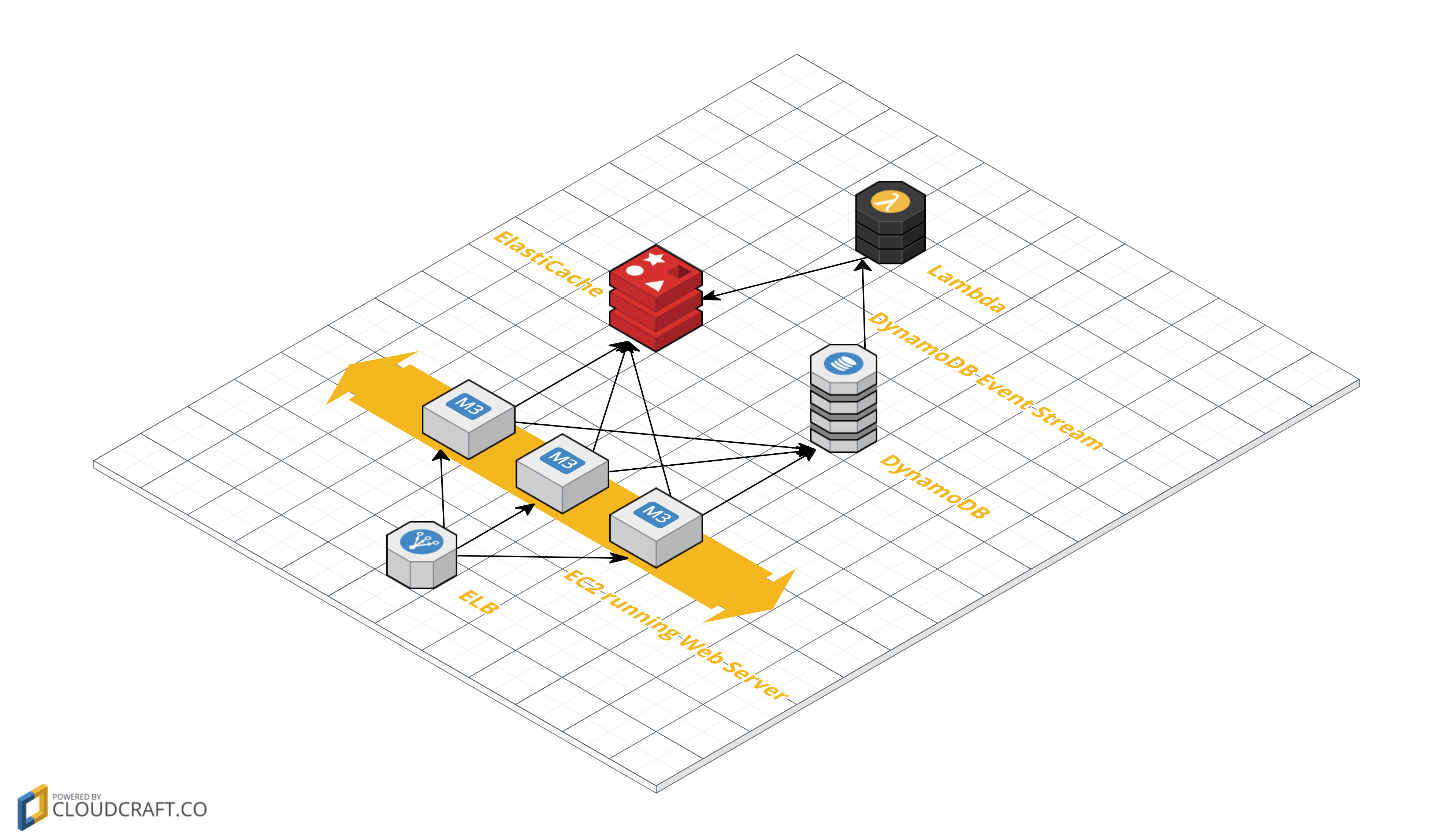

The following figure shows a typical scenario making use of ElastiCache to cache requests to DynamoDB including:

- ELB acting as a load balancer forwarding requests to EC2 instances

- EC2 instances running the application requesting data from ElastiCache and DynamoDB

- DynamoDB storing the data used by the application

- ElastiCache caching read requests to DynamoDB

- Lambda to invalidate and update the cache whenever an object stored in DynamoDB is created, updated or deleted

Phil Karlton said:

There are only two hard things in Computer Science: cache invalidation and naming things.

Sure, naming things is hard. But setting up cache invalidation is not that hard when using the following building blocks: ElastiCache, DynamoDB, DynamoDB Streams and Lambda. Stay tuned for my next blog post covering exactly this topic!

Further reading

- Article Introducing the Object Store: S3

- Article Antivirus for S3 Buckets

- Article Serverless image resizing at any scale

- Article 3 simple ways of saving up to 90% of EC2 costs

- Article Serverless: Invalidating a DynamoDB Cache

- Tag dynamodb

- Tag elasticache