The Life of a Serverless Microservice on AWS

In this post, I will demonstrate how you can develop, test, deploy and operate a production-ready Serverless Microservice using the AWS ecosystem. The combination of AWS Lambda and Amazon API Gateway allows us to operate a REST endpoint without the need of any virtual machines. We will use Amazon DynamoDB as our database, Amazon CloudWatch for metrics and logs, and AWS CodeCommit and AWS CodePipeline as our delivery pipeline. In the end, you know how to wire together a bunch of AWS services to run a system in production. This post is a summary of my talk The Life of a Serverless Microservice on AWS which I gave at DevOpsCon 2016 in Berlin.

The Life

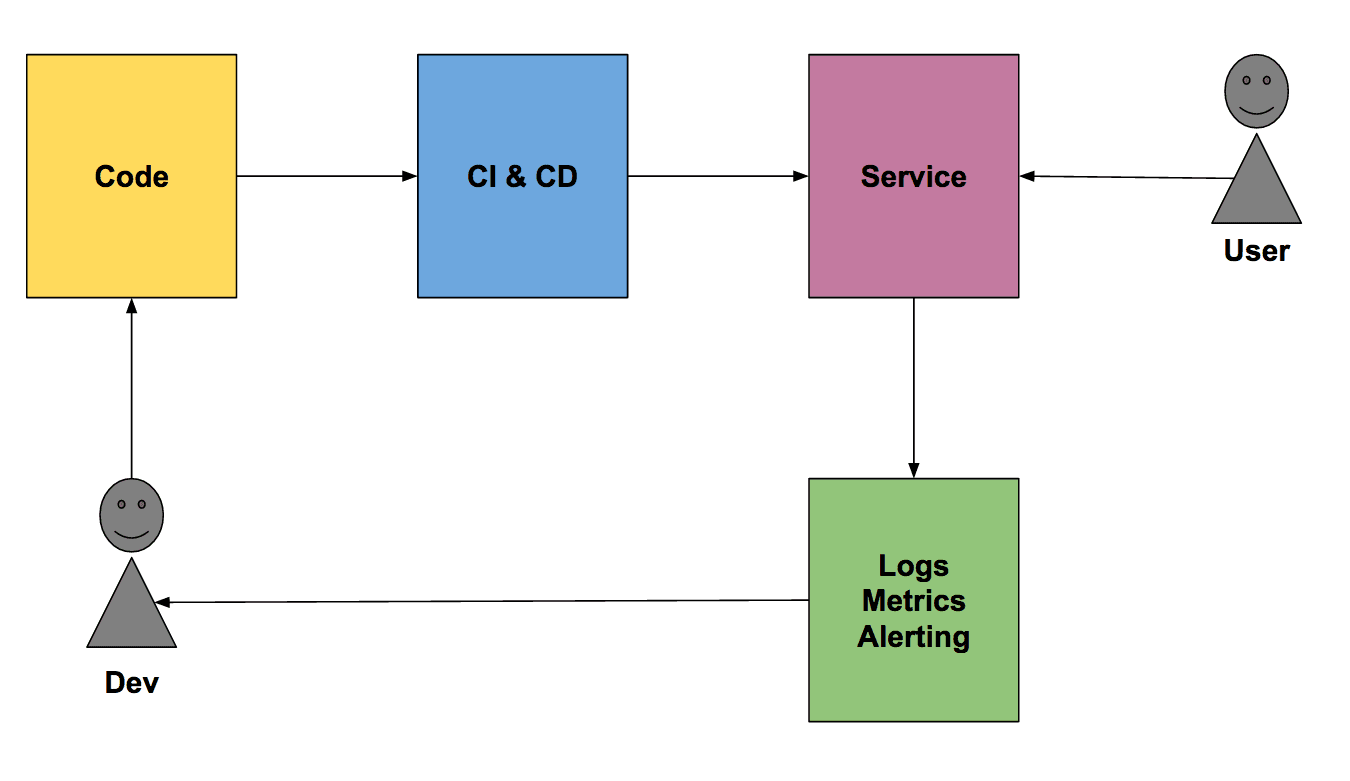

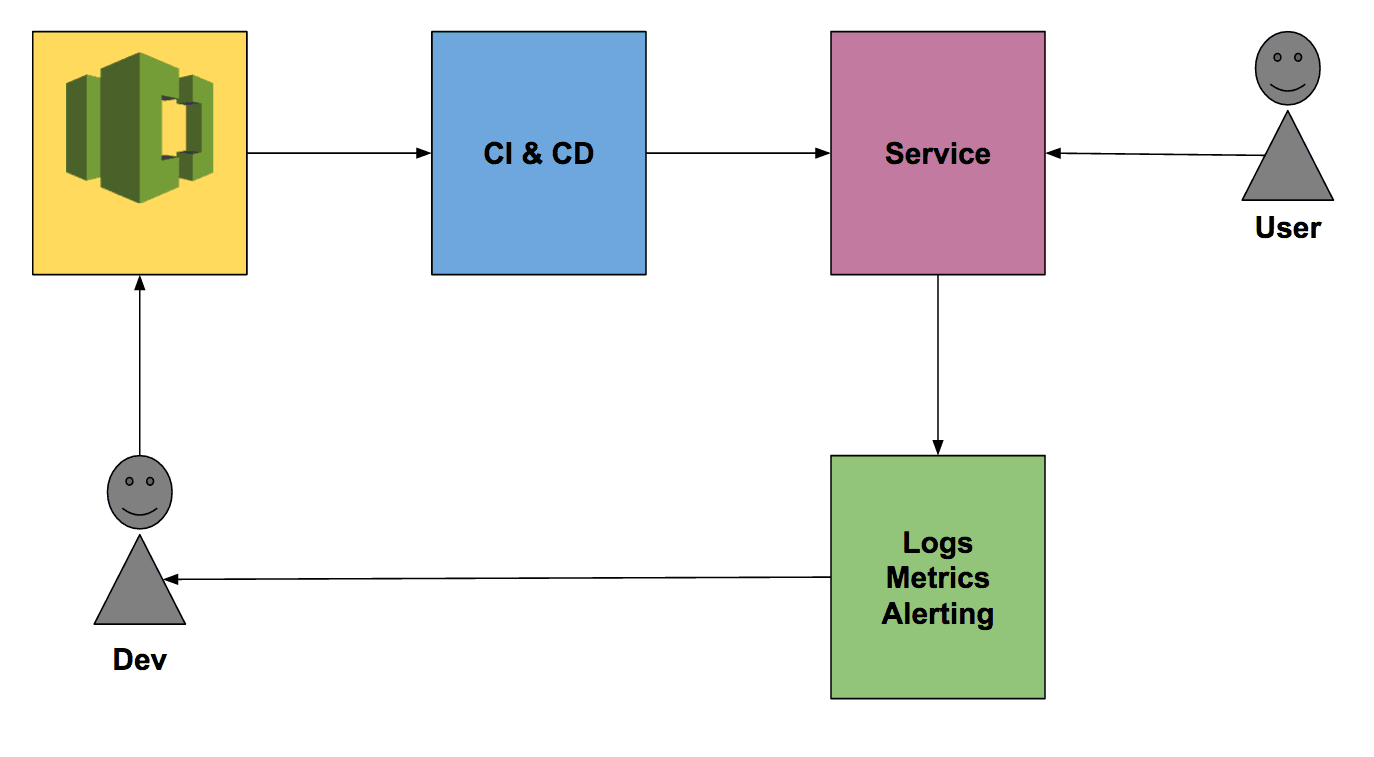

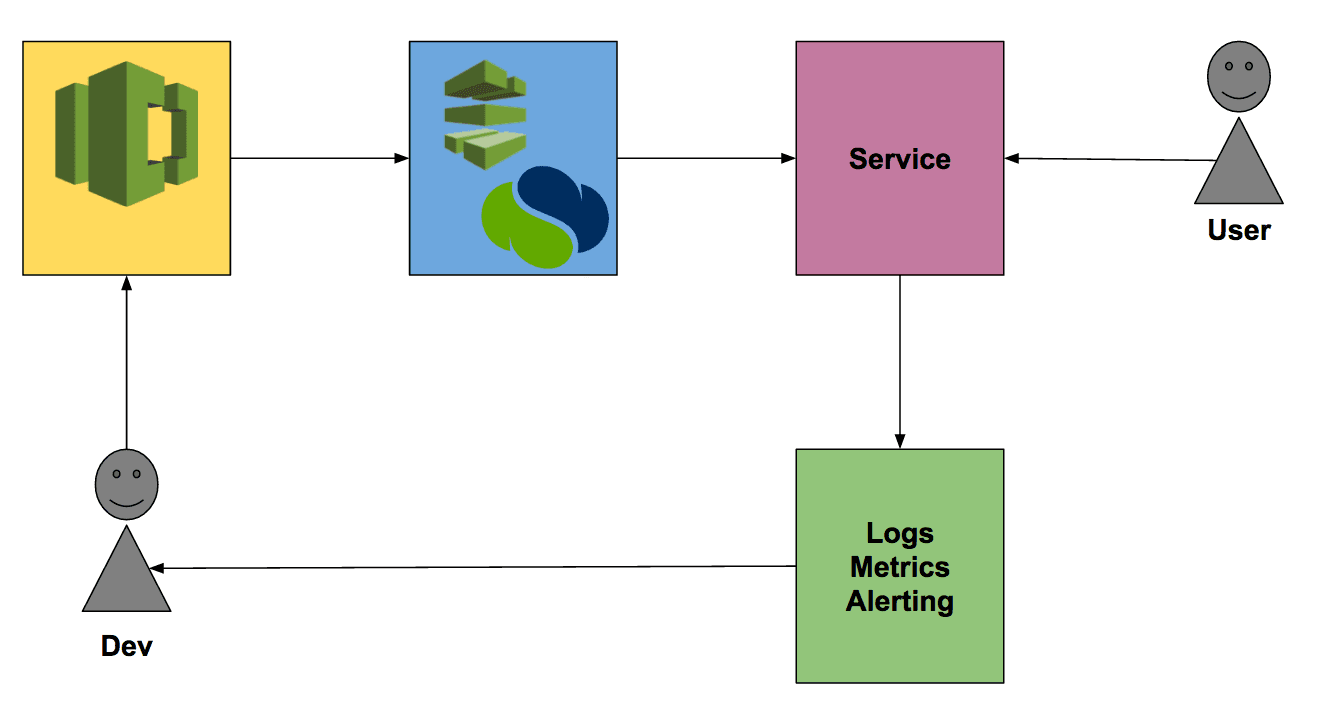

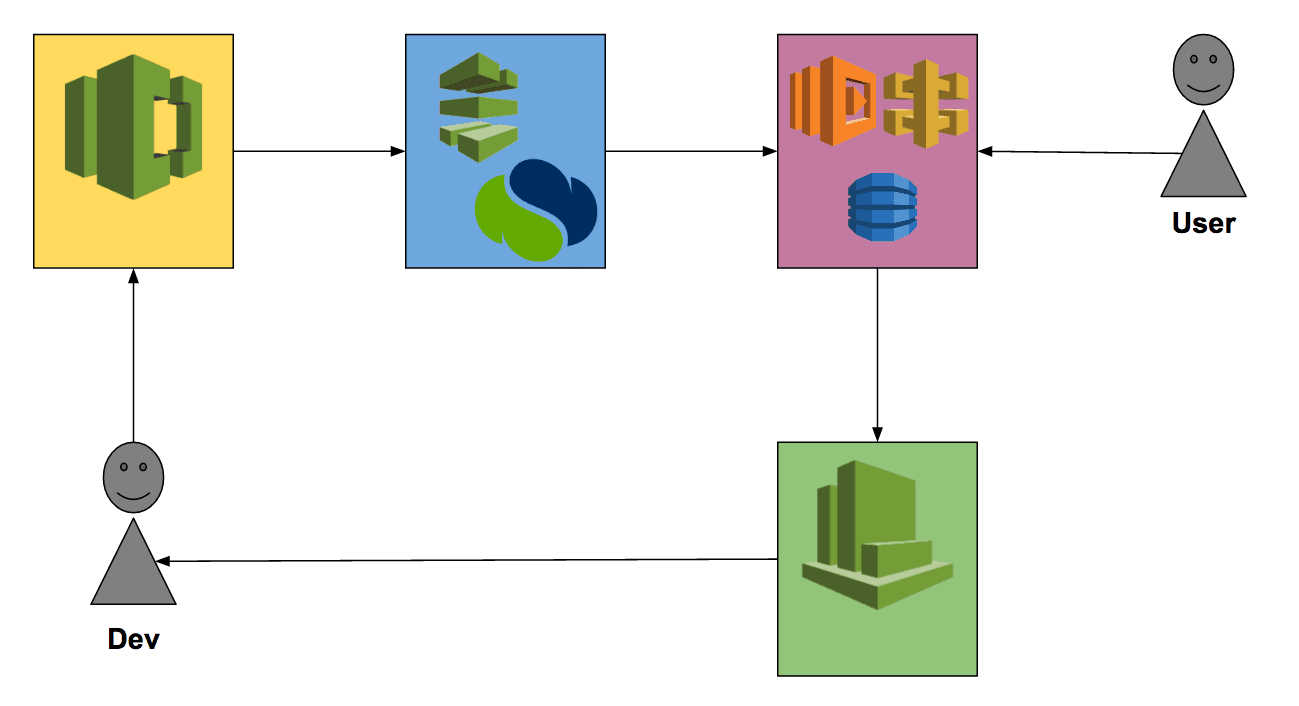

My idea of the Life of a Serverless Microservice on AWS is best described by this figure:

A developer is pushing code changes to a repository. This git push triggers the CI & CD pipeline to deploy a new version of the service which our users consume. The load generated on the system produces logs and metrics that are used by the developer to operate the system. The operational feedback is used to improve the quality of the system.

What is Serverless?

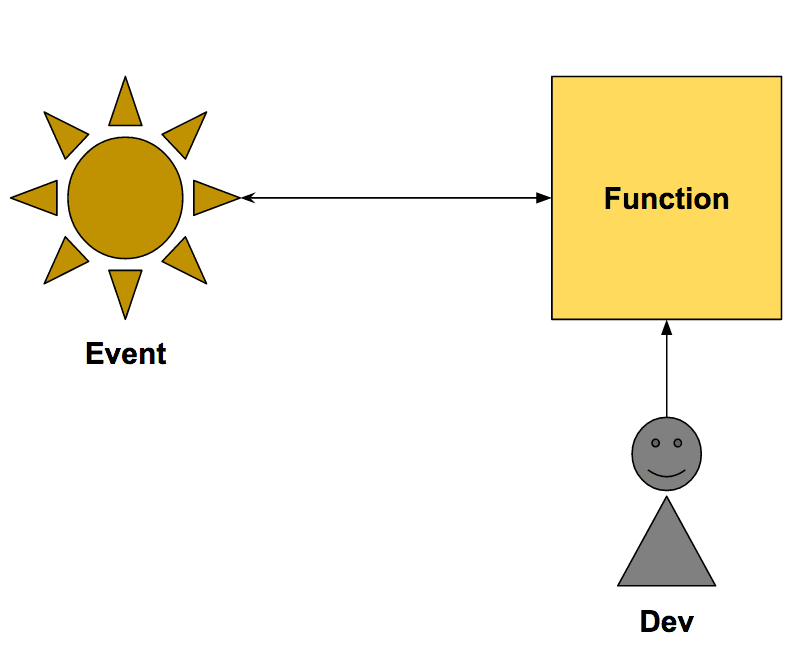

Serverless or Function as a Service (FaaS) describes the idea that the deployment unit is a single function. A function takes input and returns output. The responsibility of the FaaS user is to develop the function while the FaaS provider’s responsible is to execute the function whenever some event happens. The following figure demonstrates this idea.

Some possible events:

- File uploaded

- E-Mail received

- Database changed

- Manual invoked

- HTTP API called

- Cron

The cool thing about Serverless is that:

- you only pay when the function is executed

- no under/over provisioning

- no boot time

- no patching

- no SSH

- no load balancing

Read more about Serverless Architectures if you are interested in the details.

What is Microservice?

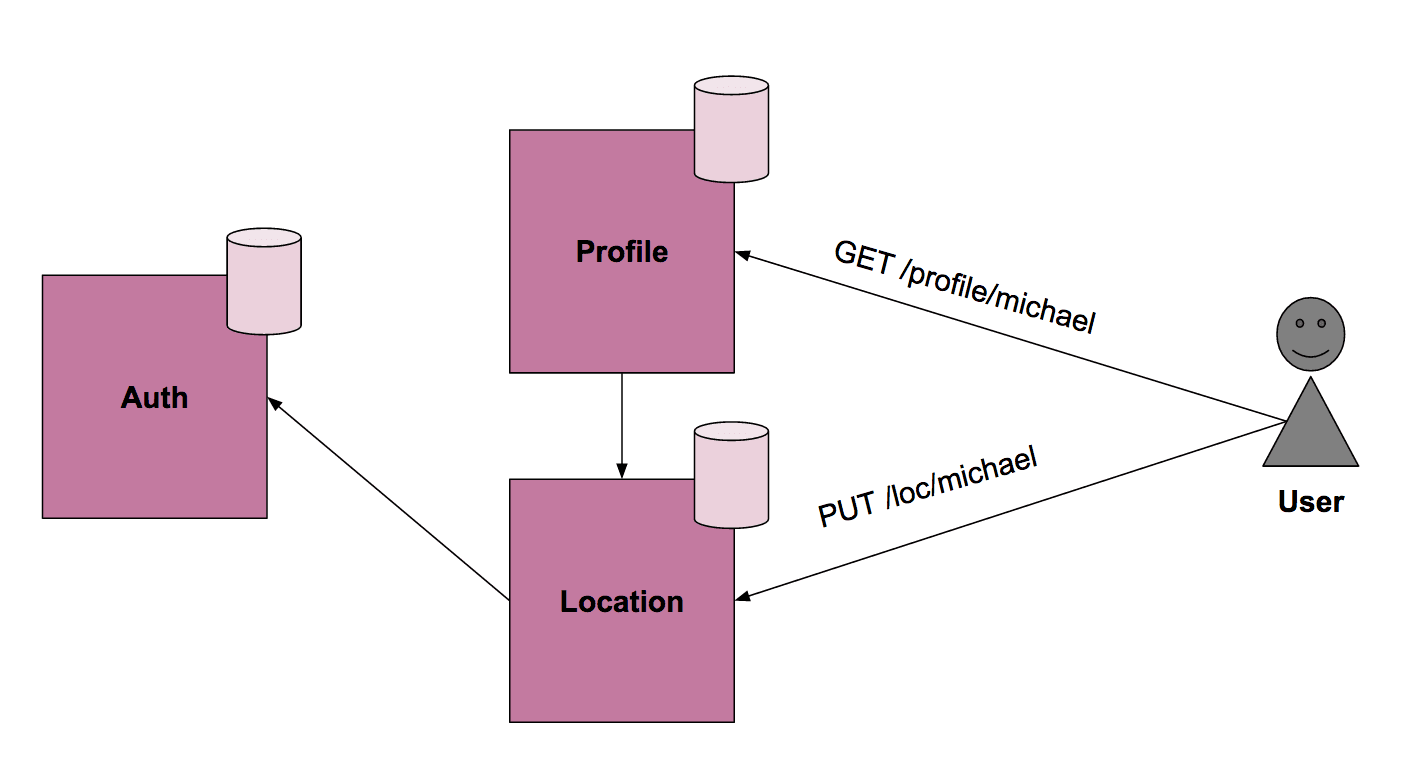

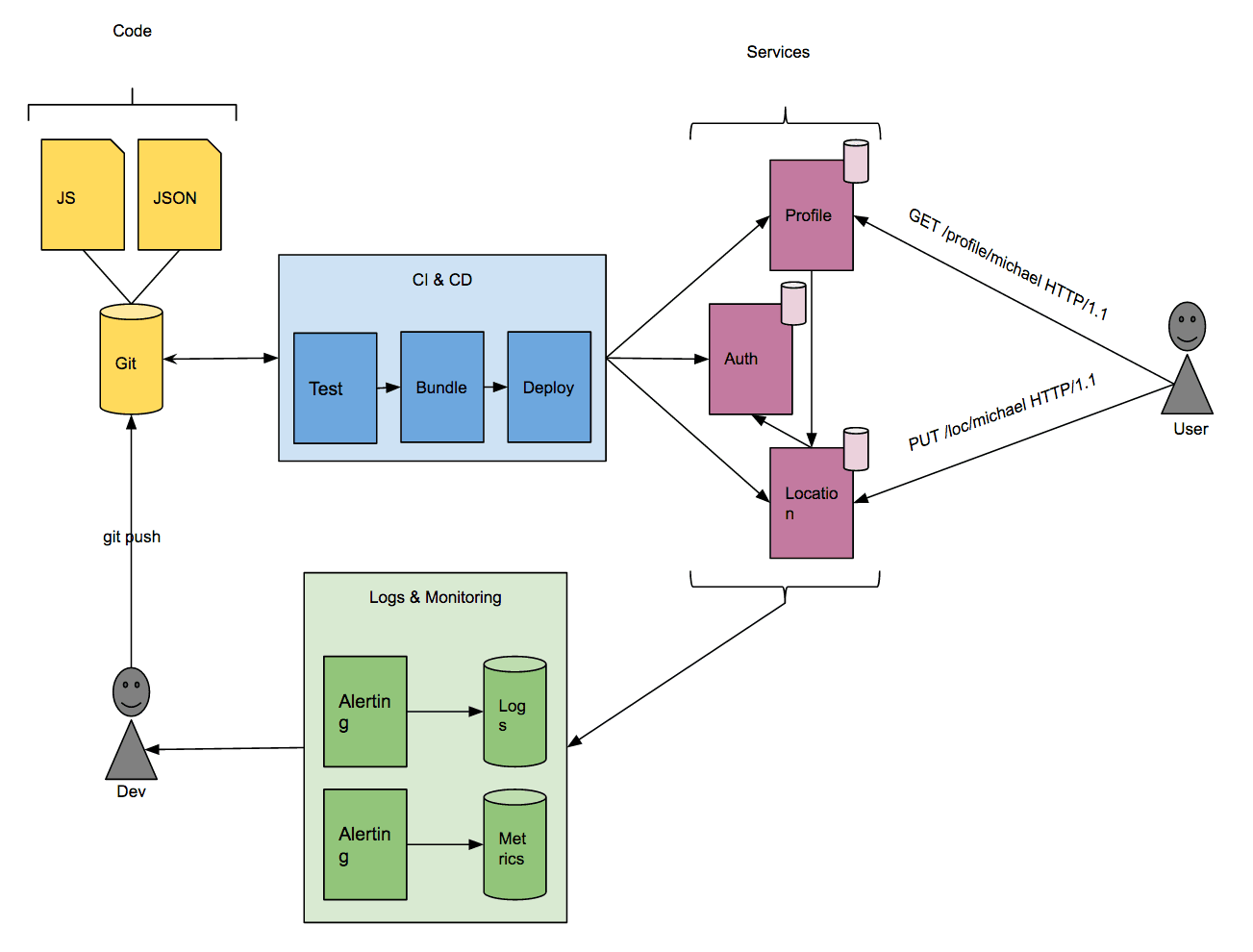

Imagine a small system where users have a public visible profile page with location information of that user. The idea of a Microservice Architecture is that you slice your system into smaller units around bounded contexts. I identified three of them:

- Authentication Service: Handles authentication.

- Location Service: Manages location information via a private HTTP API. Uses the Authentication Service internally to authenticate requests.

- Profile Service: Store and retrieves the profile via a public HTTP API. Makes an internal call to the Location Service to retrieve the location information.

Each service get’s its own database and services are only to communicate with each other over well-defined APIs, not the database!

Let’s get started!

The source code and installation instruction can be found at the bottom of this page. Please use the us-east-1 region! We will use services that are not available in other AWS regions at the moment.

Code

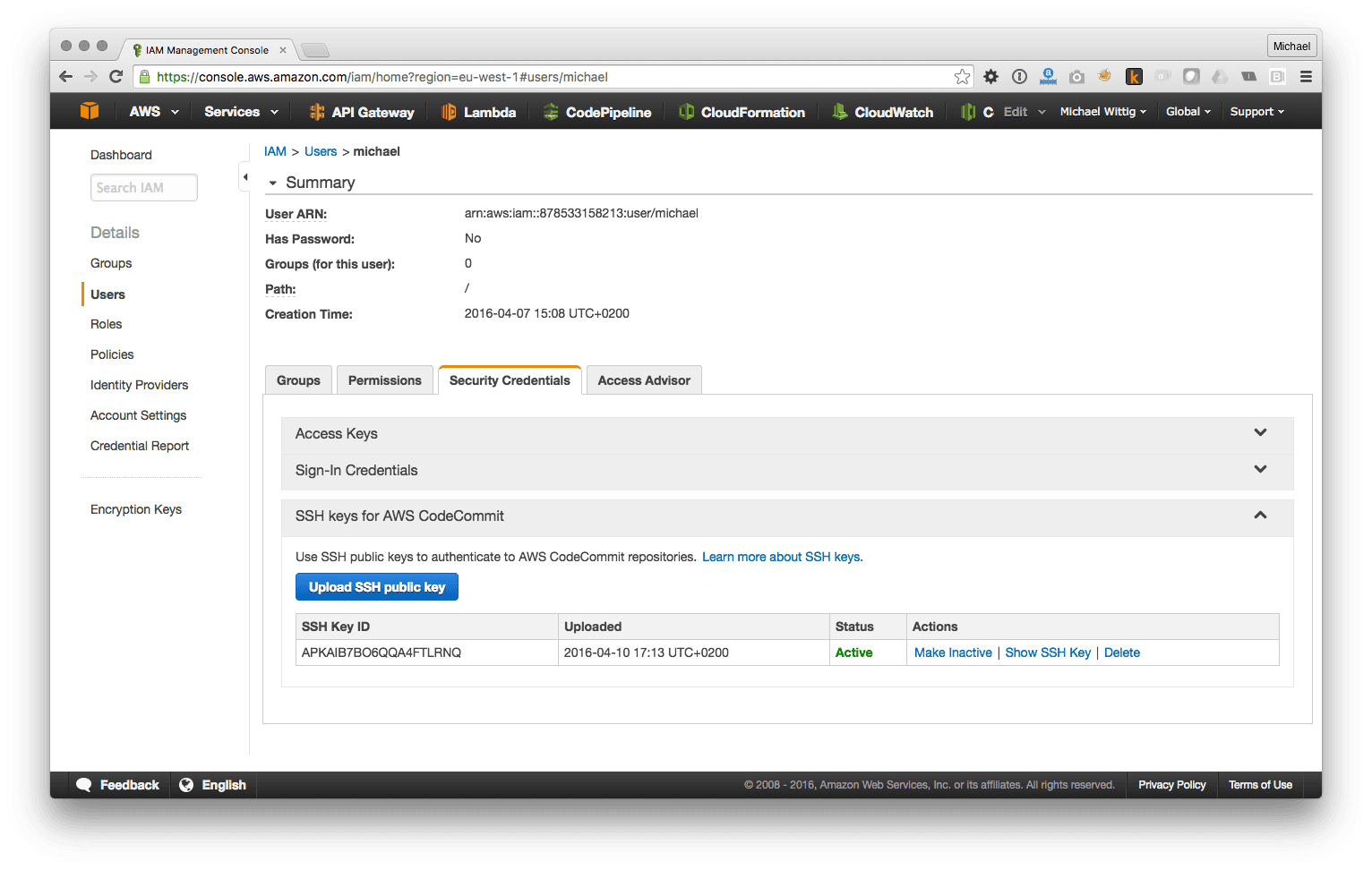

AWS CodeCommit is a hosted Git repository that uses IAM for access control. You need to upload your public SSH key to your IAM User as shown in the following figure:

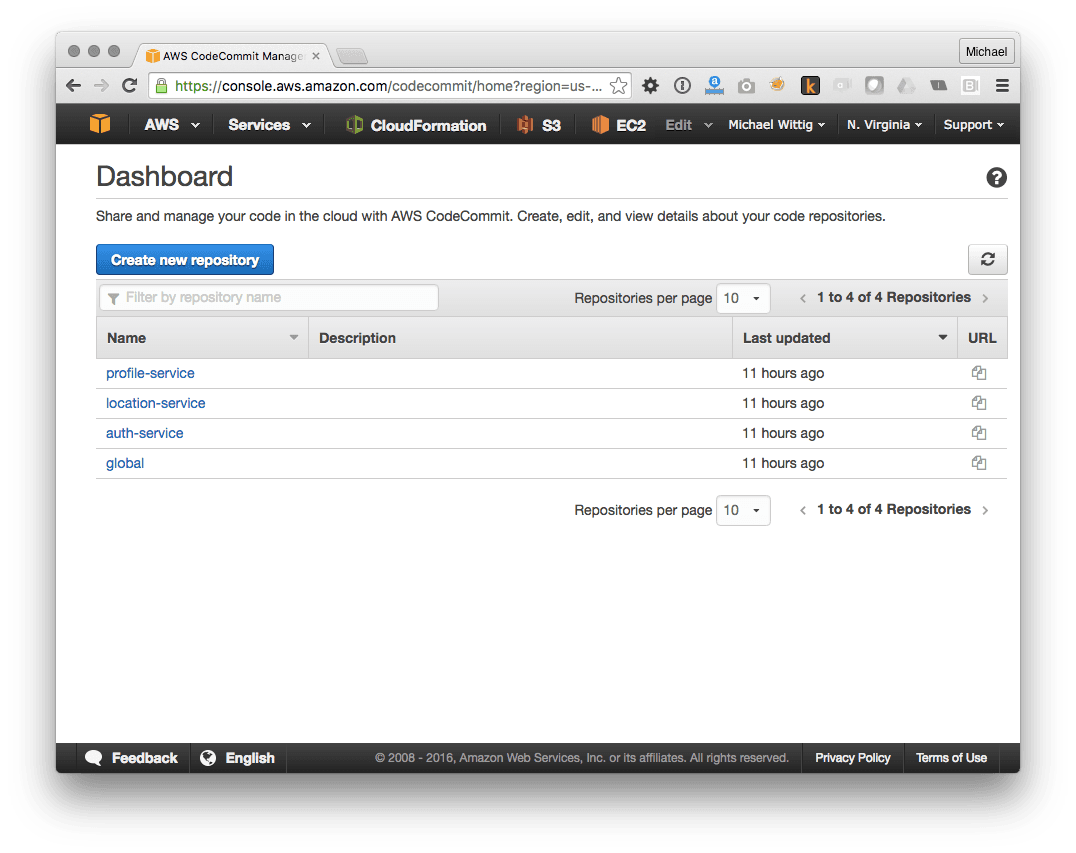

Creating a repository is simple. Just click on the Create new Repository button in the AWS Management Console,

We need a repository for each service. You can then clone the repository locally with the following command. Replace $SSHKeyID with the SSH Key ID of your IAM user and $RepositoryName with the name of your repository.

git clone ssh://$SSHKeyID@git-codecommit.us-east-1.amazonaws.com/v1/repos/$RepositoryName` |

We now have a home for our code.

Continuous Integration & Continuous Delivery

AWS CodePipeline is a service to manage a build and deployment pipeline. CodePipeline itself is only responsible triggering integrations to do things like:

- build

- test

- deploy

We need a pipeline for each service that:

- Downloads the sources from CodeCommit if something changes there

- Runs our test and bundles the code in a zip file for Lambda

- Deploys the zip file

Luckily CodePipeline has native support for downloading sources from CodeCommit. To run our tests we will use a third party integration to trigger Solano CI to run our tests and bundle the source files. The deployment step is implemented in a Lambda function that triggers a CloudFormation stack update. A CloudFormation stack is a bunch of AWS resources managed by CloudFormation based on a template that you provide (Infrastructure as Code). Read more about CloudFormation on our blog.

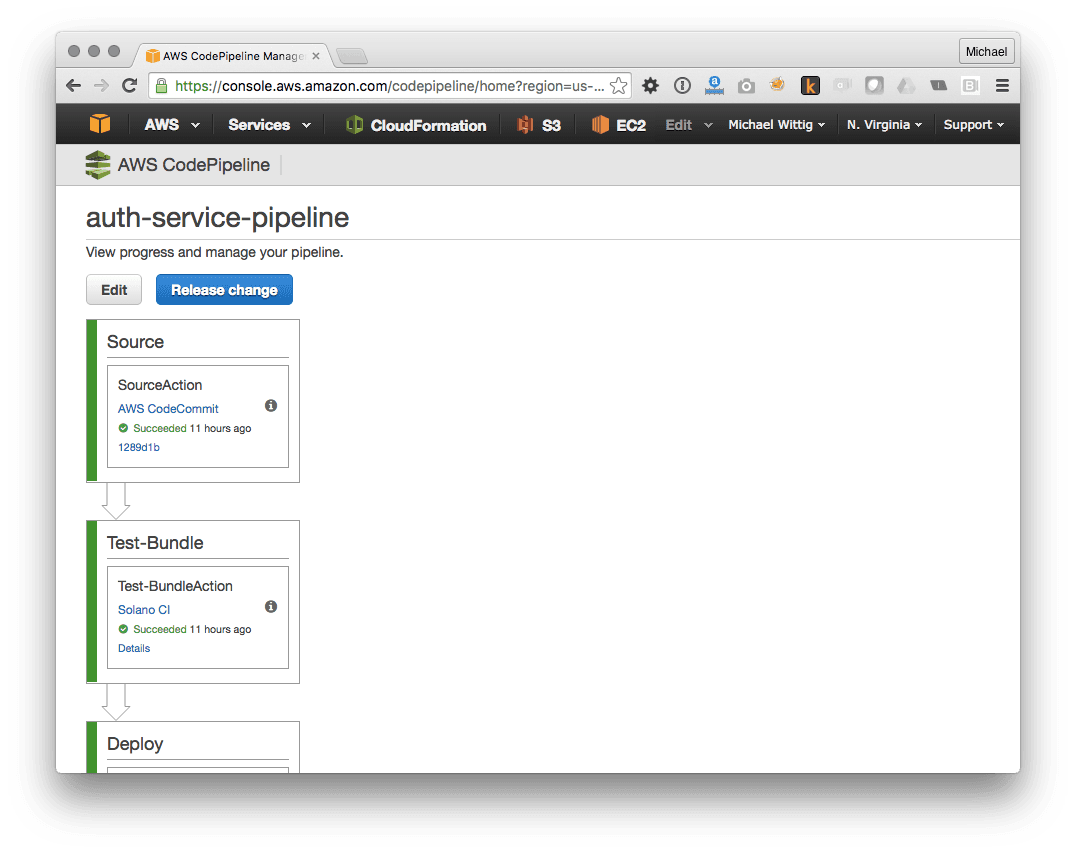

The following figure shows the pipeline:

The cool thing about CloudFormation is that you can define the pipeline itself in a template. So we get Pipeline as Code.

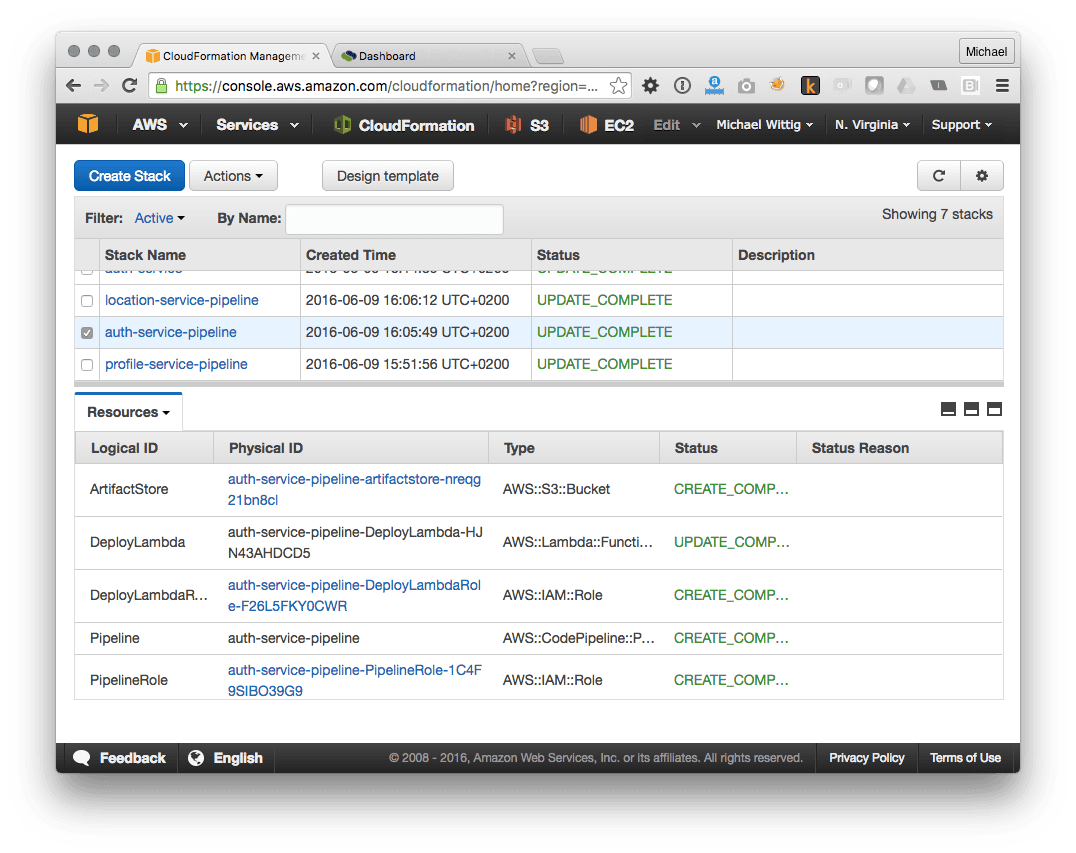

The CloudFormation template that is used for service deployment describes a Lambda function, DynamoDB database, and an API Gateway. After deployment you will see one CloudFormation stack for each service:

We now have a CI & CD pipeline.

Service

We use a bunch of AWS Services to run our Microservices:

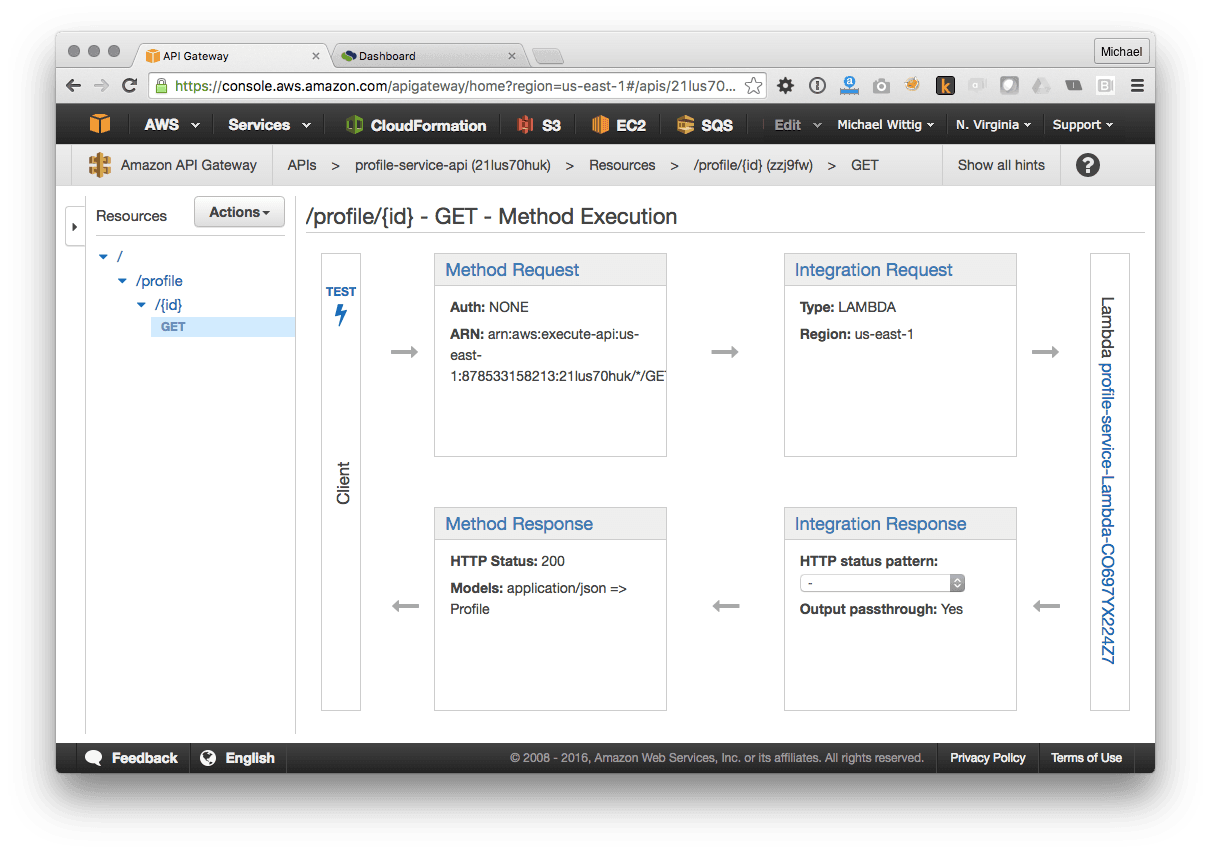

Amazon API Gateway

API Gateway is a service that offers a configurable REST API as a Service. You describe what should happen if a certain HTTP Method (GET, POST, PUT, DELETE, …) is called on a certain HTTP Resource (e.g. /user). In our case, we want to execute a Lambda function if an HTTP request comes in. API Gateway also takes care of mapping input and output data between formats. The following figure shows how this looks like in the AWS Management Console for the Profile Service.

API Gateway is a fully managed service. You only pay for requests, no under/over provisioning, no boot time, no patching, no SSH, no load balancing. AWS takes care of all those aspects.

Read more about API Gateway on our blog

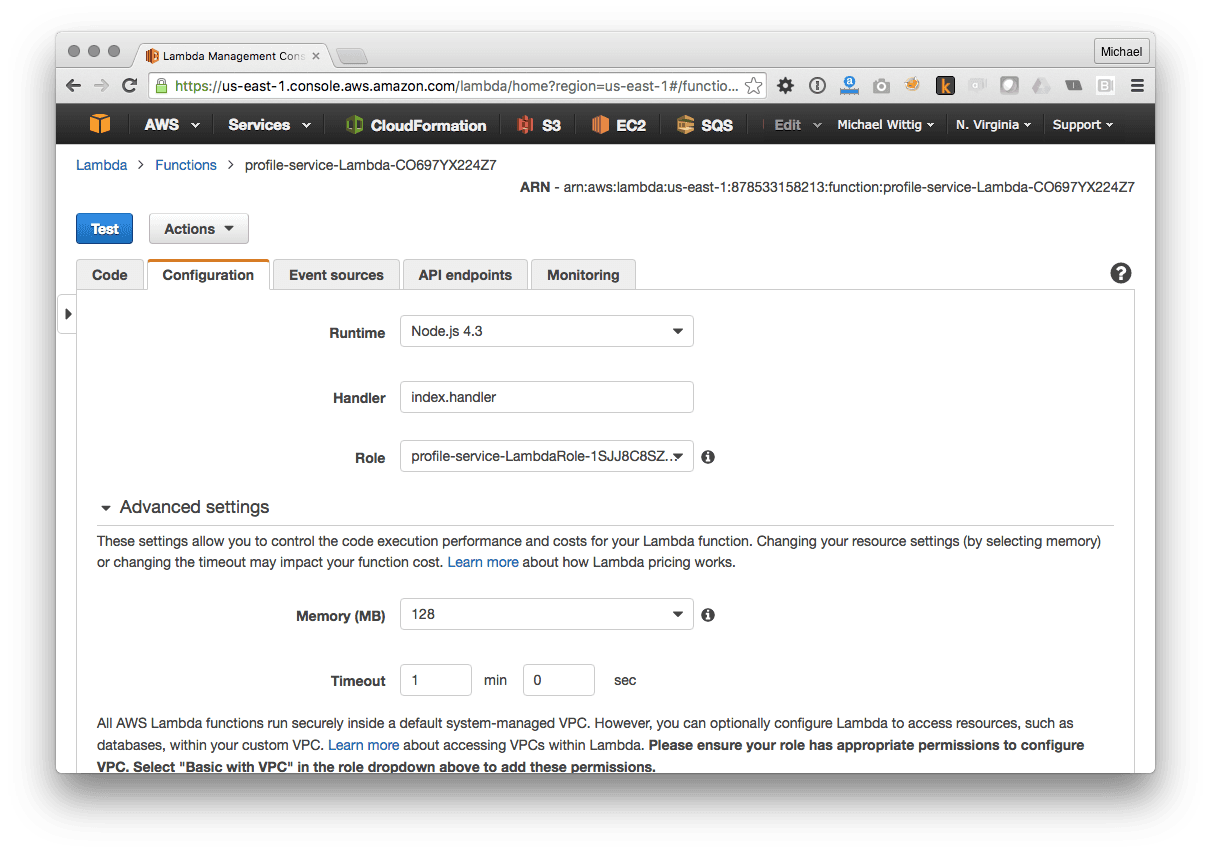

AWS Lambda

To run code in AWS Lambda you need to:

- use one of the supported runtimes (Node.js (JavaScript), Python, JVM (Java, Scala, …)

- implement a predefined interface

The interface in abstract terms requires a function that takes an input parameter and returns void, something or throws an error.

We will use the Node.js runtime where a function implementation looks like this:

exports.handler = function(event, context, cb) { |

In Node.js the function is not expected to return something. Instead, you need to call the callback function cb that is passed into the function as a parameter.

The following figure shows how this looks like in the AWS Management Console for the Profile Service.

AWS Lambda is a fully managed service. You only pay for function executions, no under/over provisioning, no boot time, no patching, no SSH, no load balancing. AWS takes care of all those aspects.

Read more about Lambda on our blog

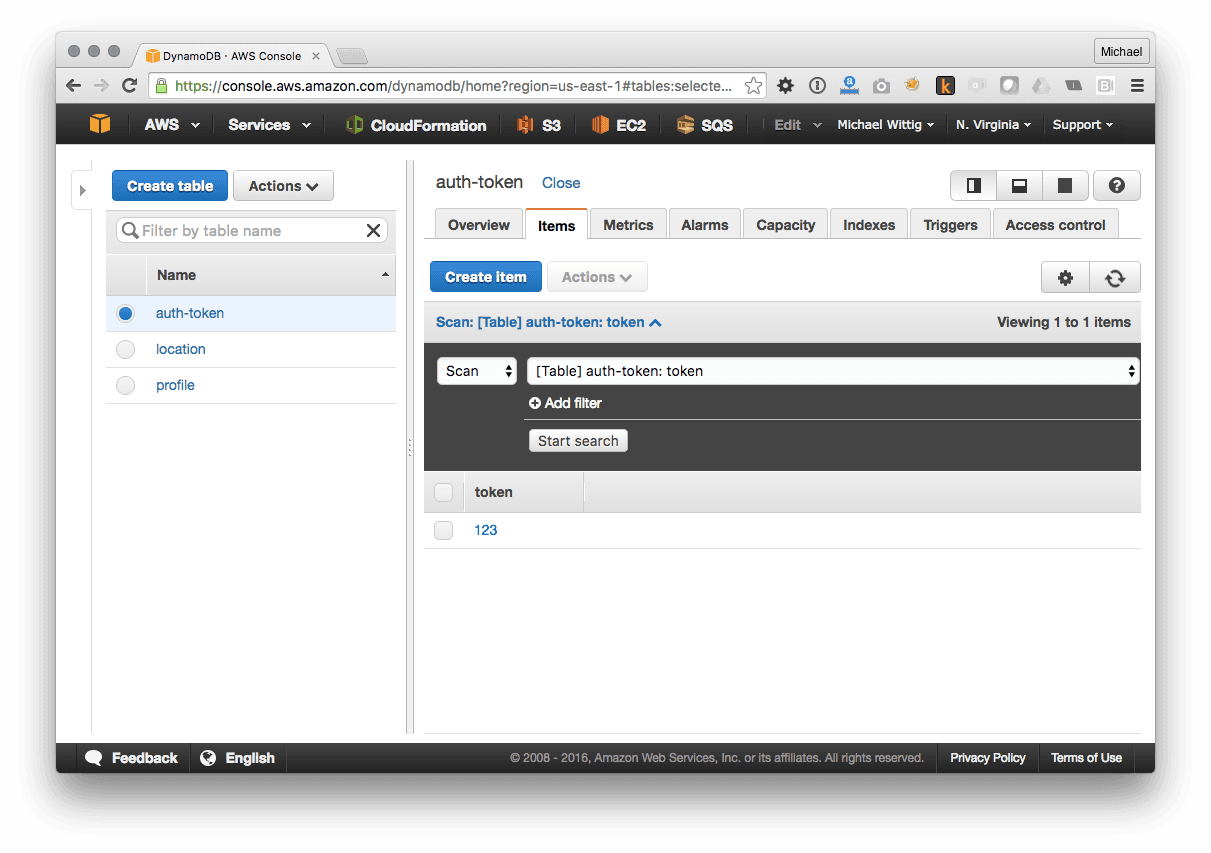

Amazon DynamoDB

DynamoDB is a Key-Value-Store or Document-Store. You can lookup values by their key. DynamoDB replicates across multiple Availability Zones (data centers) and is eventual consistent.

The following figure shows how this looks like in the AWS Management Console for the Authentication Service.

Amazon DynamoDB is a 99% managed service. The 1% that is up to you is that you need to provision read and write capacity. When your service makes more request than provisioned you will see errors. So it is your job to monitor the consumed capacity to increase the provisioned capacity before you run out of capacity.

Read more about DynamoDB on our blog

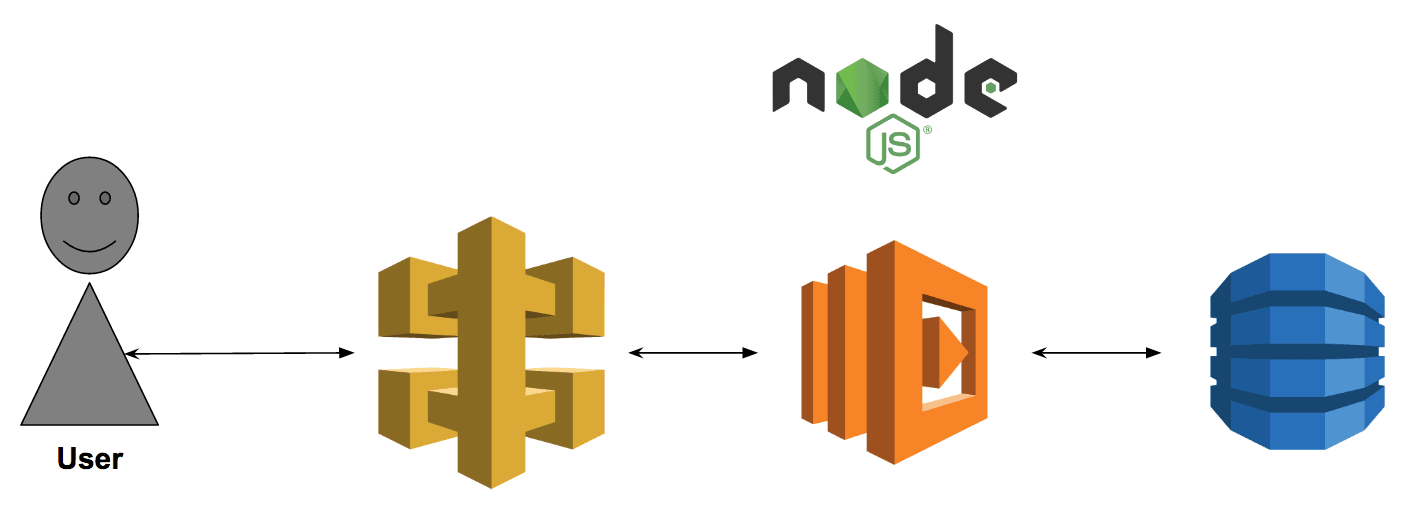

Request flow

The three services work together in the following way:

The user’s HTTP request hits API Gateway. API Gateway checks if the request is valid if so it invokes the Lambda function. The function makes one or multiple requests to the database and executes some business logic. The result of the function is then transformed into an HTTP response by API Gateway.

We now have an environment to run our Microservices.

Logs, Metrics & Alerting

A Blackbox is very hard to operate. That’s why we need as much information from the inside of the system as possible. AWS CloudWatch is the right place to store and analyze this kind of information:

- Metrics (numbers)

- Logs (text)

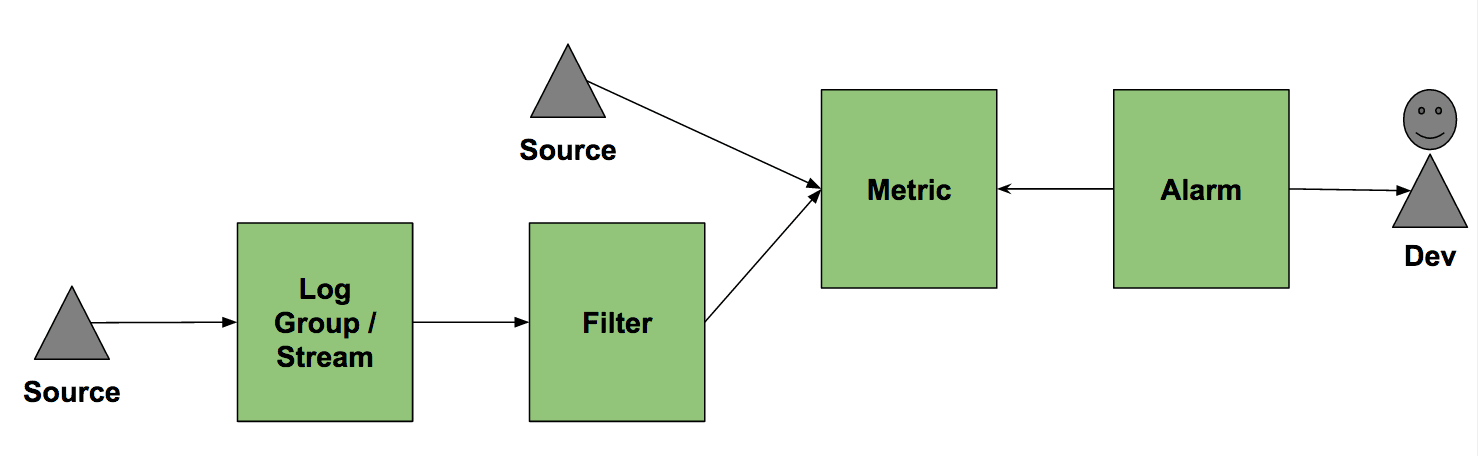

CloudWatch also lets you define alarms on metrics. The following figure demonstrated how the pieces work together.

Operational insights that you get out-of-the-box:

- Lambda writes

STDOUTandSTDERRto CloudWatch Logs. - Lambda publishes Metrics to CloudWatch about number of invocations, runtime duration, number of failures, …

- API Gateway publishes metrics about number of requests, 4XX and 5XX Response Codes, …

- DynamoDB publishes metrics about consumed capacity, number of requests, …

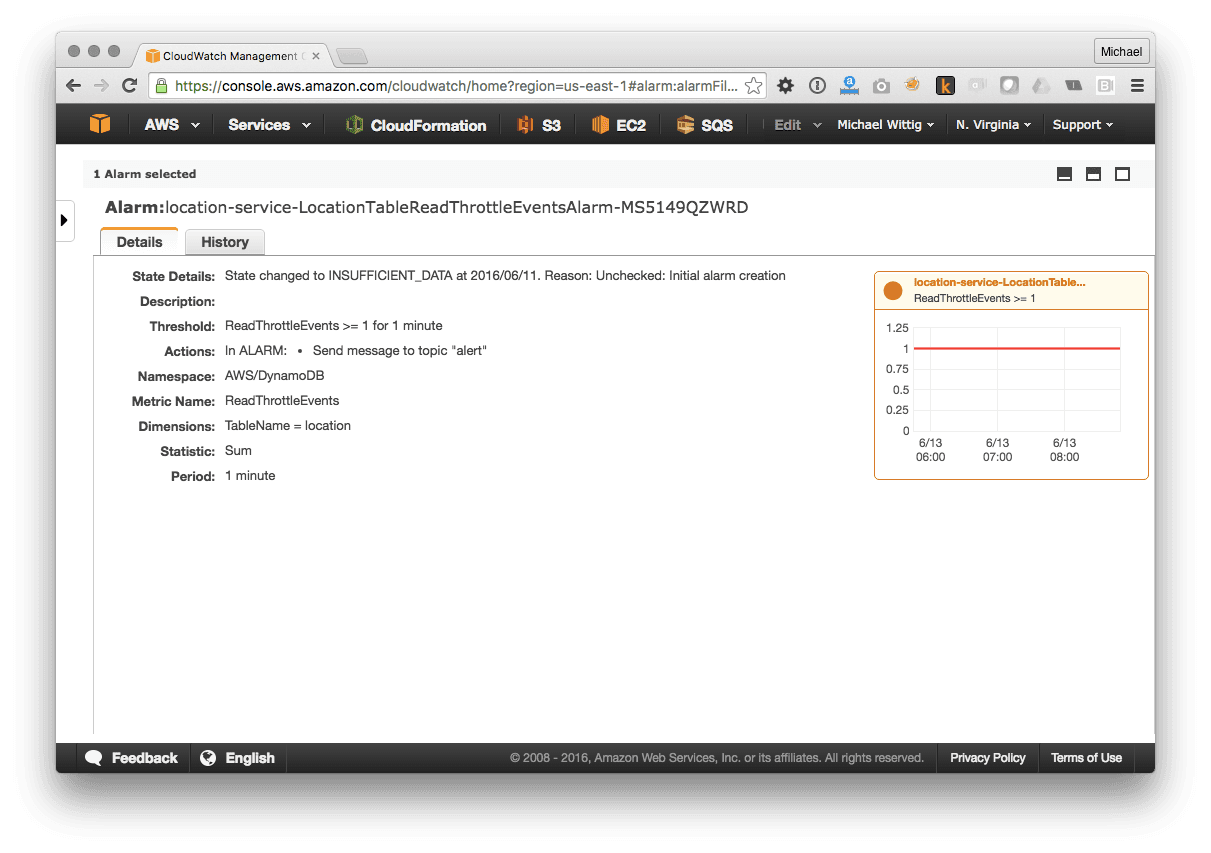

The following figure shows a CloudWatch alarm that is triggered if the number of throttled read requests of the Location Service DynamoDB table is bigger or equal to one. This situation indicates that the provisioned capacity is not sufficient to serve the traffic.

With all those metrics and alarms in place, we now can be confident that we receive an alert if our system is not working properly.

Summary

You can run a high-quality system on AWS by only using managed services. This approach frees you from many operational tasks that are not directly related to your service. Think of operating a monitoring system, a log index system, a database, virtual machines, … Instead, you can focus on operating and improving your service’s code.

The following figure shows the overall architecture of our system:

Serverless or FaaS does not force you to use a specific framework. As long as you are fine with the interface (a function with input and output) you can do whatever you want inside your function to produce an output with the given input.

Code & installation instructions

You can find installation instructions and global resources here.

The source code of the services can be found here:

Pittfals

- CodeCommit only available in us-east-1 at the moment

- CodePipeline only available in us-east-1, us-west-2, and eu-west-1 at the moment

- Soft limits

- Concurrent Lambda invocations (100)

- API Gateway RPS (1000)

- CloudFormation, CodePipeline and Solano CI integration is not yet fully automatable

Further reading

- Article Send CloudWatch Alarms to Slack with AWS Lambda

- Article Integrate SQS and Lambda: serverless architecture for asynchronous workloads

- Article Create a serverless RESTful API with API Gateway, Swagger, Lambda, and DynamoDB

- Article 5 AWS mistakes you should avoid

- Article Event Driven Security Automation on AWS

- Article Introducing the Object Store: S3

- Tag cloudformation

- Tag lambda

- Tag apigateway