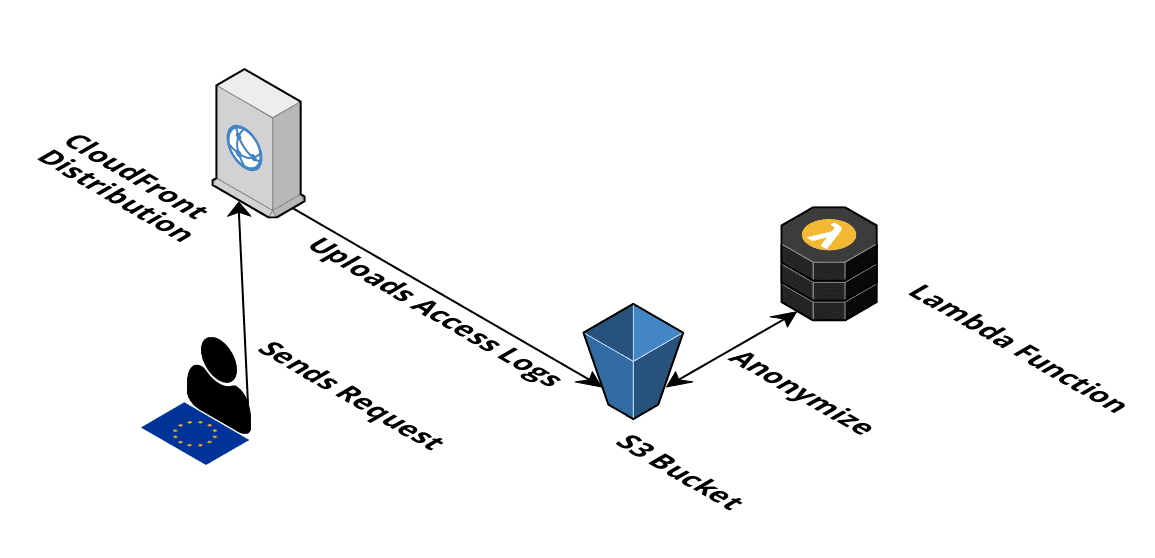

Anonymize CloudFront Access Logs

Amazon CloudFront can upload access log files to an S3 bucket. By default, CloudFront logs the IP address of the client. Optionally, cookies could be logged as well. If EU citizens access your CloudFront distribution, you have to process personally identifiable information (PII) in a General Data Protection Regulation (GDPR) compliant way. IP addresses are considered PII, and cookie data could also contain PII. If you want to process and store PII, you need a reason in the spirit of the GDPR.

Disclaimer: I’m not a lawyer! This is not legal advice.

Access logs are required to support operations to debug issues. For that purpose, it is okay to keep the access logs for seven days1. But you might need access logs for capacity planning as well. How can you keep the access logs for more than seven days without violating GDPR?

The question is: do you really need the IP address in our access logs? The answer is likely no. Unfortunately, CloudFront does not allow us to disable the IP address logging. We have to implement a workaround to anonymize the access logs as soon as they are available on S3. The workaround works like this:

The diagram was created with Cloudcraft - Visualize your cloud architecture like a pro.

We can use a similar mechanism that is implemented by Google Analytics. An IPv4 address like 91.45.135.67 is turned into 91.45.135.0 (the last 8 bits are removed, 24 bits are kept). IPv6 addresses need a different logic: Google removes the last 80 bits. I will go one step further and remove the last 96 bits and keep 32 bits 2.

The following steps are needed to anonymize an access log file:

- Download the object from S3

- Decompress the

gzipdata - Parse the data (tab-separated values, log file format)

- Replace the IP addresses with anonymized values

- Compress the data with

gzip - Upload the anonymized data to S3

- Remove the original data from S3

There is no documented max size of an access log file. We should prepare for files that are larger than the available memory. Luckily, Lambda functions support Node.js, which has superb support to deal with streaming data. If we stream data, we never load all data into memory at once.

First, weload some core Node.js dependencies and the AWS SDK:

const fs = require('fs'); |

It’s time to implement the anonymization:

function anonymizeIPv4Address(str) { |

We also have to deal with TSV (tab-separated values)

function transformLine(line) { |

So far, we process only small amounts of data: a single access log file line. It’s time to deal with the whole file.

Each chunk of data is represented as a buffer in Node.js. A buffer represents binary data in the form of a sequence of bytes. In the buffer, we search for the line-end \n byte. We slice all bytes from beginning to \n and convert them into a string to extract a line. Continue with the apporach until end of file is reached. There is one edge case: A chunk of data can stop in the middle of a line. We have to add the old chunk to the beginning of a new chunk.

async function process(record) { |

Finally, Lambda requires a thin interface that we have to implement. I also ensure that anonymized data is not processed again to avoid an expensive infinit loop.

exports.handler = async (event) => { |

I integrated the workaround into our collection of aws-cf-templates. Check out the documentation or the code on GitHub. A similar approach can be used to anonymize access logs from ELB load balancers (ALB, CLB, NLB).

PS: You should also enable S3 lifecycle rules to delete access logs after 38 months.

Thanks to Thorsten Höger for reviewing this article.

- 1. Germany source: https://www.bsi.bund.de/SharedDocs/Downloads/DE/BSI/Veranstaltungen/ITSiKongress/14ter/Vortraege-19-05-2015/Heidrich_Wegener.pdf?__blob=publicationFile ↩

- 2. One official recommendation I found recommends dropping at least the last 88 bits of an IPv6 address (German source: https://www.datenschutz-bayern.de/dsbk-ent/DSK_84-IPv6.html) ↩