Builder's Diary Vol. 2: Serverless ETL with Airflow and Athena

Get insights into the day-to-day challenges of builders. In this issue, Peter Reitz from our partner tecRacer talks about how to build Serverless ETL (extract, transform and load) pipelines with the help of Amazon Managed Workflows for Apache Airflow (MWAA) and Amazon Athena.

If you prefer a video or podcast instead of reading, here you go.

Do you prefer listening to a podcast episode over reading a blog post? Here you go!

What sparked your interest in cloud computing?

Computers have always held a great fascination for me. I taught myself how to program. That’s how I ended up working as a web developer during my economics studies. When I first stumbled upon Amazon Web Services, I was intrigued by the technology and wide variety of services.

How did you grow into the role of a cloud consultant?

After completing my economics degree, I was looking for a job. By chance, a job ad drew my attention to a vacancy for a cloud consultant at tecRacer. To be honest, my skills didn’t match the requirements very well. But because I found the topic exciting, I applied anyway. Right from the job interview, I felt right at home at tecRacer in Duisburg. Since I had no experience with AWS, there was a lot to learn within the first months. My first goal was to achieve the AWS Certified Solutions Architect - Associate certification. The entire team supported and motivated me during this intensive learning phase. After that, I joined a small team working on a project for one of our consulting clients. This allowed me to gain practical experience at a very early stage.

What does your day-to-day work as a cloud consultant at tecRacer look like?

As a cloud consultant, I work on projects for our clients. I specialize in machine learning and data analytics. Since tecRacer has a 100% focus on AWS, I invest in my knowledge of related AWS services like S3, Athena, EMR, SageMaker, and more. I work remotely or at our office in Hamburg and am at the customer’s site every now and then. For example, to analyze the requirements for a project in workshops.

What project are you currently working on?

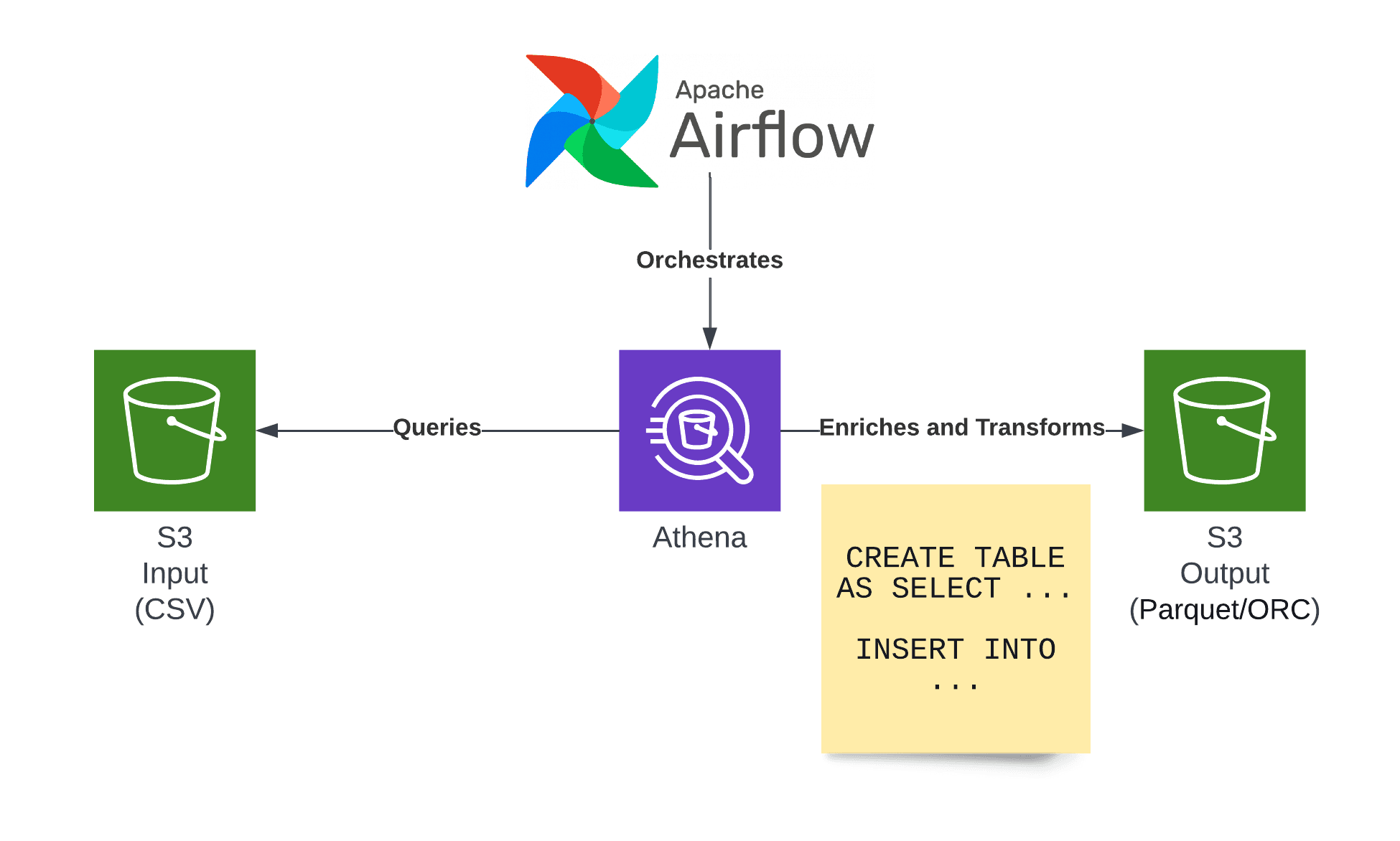

I’m currently working on building an ETL pipeline. Several data providers upload CSV files to an S3 bucket. My client’s challenge is to extract and transform 3 billion data points and store them in a way that allows efficient data analytics. This process can be roughly described as follows.

- Fetch CSV files from S3.

- Parse CSV files.

- Filter, transform, and enrich columns.

- Partition data to enable efficient queries in the future.

- Transform to a file format optimized for data analytics.

- Upload data to S3.

I’ve been implementing similar data pipelines in the past. My preferred solution consists of the following building blocks:

- S3 storing the input data.

- Apache Airflow to orchestrate the ETL pipeline.

- Athena to extract, transform, and load the data.

- S3 storing the output data.

How do you build an ETL pipeline based on Athena?

Amazon Athena enables me to query data stored on S3 on-demand using SQL. The remarkable thing about Athena is that the service is serverless, which means we only have to pay for the processed data when running a query. There are no idle costs except the S3 storage costs.

As mentioned before, in my current project, the challenge is to extract data from CSV files and store the data in a way that is optimized for data analytics. My approach is transforming the CSV files into more efficient formats such as Parquet. The Parquet file format is designed for efficient data analysis and organizes data in rows, not columns, as CSV does. Therefore, Athena skips fetching and processing all other columns when querying only a subset of the available columns. Also, Parquet compresses the data to minimize storage and network consumption.

I like using Athena for ETL jobs because of its simplicity and pay-per-use pricing mode. The CREATE TABLE AS SELECT (CTAS) statement implements ETL as described in the following:

- Extract: Load data from CSV files stored on S3 (

SELECT FROM "awsmp"."cas_daily_business_usage_by_instance_type") - Transform: Filter and enrich columns. (

SELECT product_code, SUM(estimated_revenue) AS revenue, concat(year, '-', month, '-01') as date) - Load: Store results in Parquet file format on S3 (

CREATE TABLE monthly_recurring_revenue).

CREATE TABLE monthly_recurring_revenue WITH ( |

Besides converting the data into the Parquet file format, the statement also partitions the data. This means the keys of the objects start with something like date=2022-08-01, which allows Athena to only fetch relevant files from S3 when querying by date.

Why did you choose Athena instead of Amazon EMR to build an ETL pipeline?

I’ve been using EMR for some projects in the past. But nowadays, I prefer adding Athena to the mix, wherever feasible. That’s because compared to EMR, Athena is a lightweight solution. Using Athena is less complex than running jobs on EMR. For example, I prefer using SQL to transform data instead of writing Python code. It takes me less time to build an ETL pipeline with Athena compared to EMR.

Also, accessing Athena is much more convenient as all functionality is available via the AWS Management Console and API. In contrast, it requires a VPN connection to interact efficiently with EMR when developing a pipeline.

I prefer a serverless solution due to its cost implications. With Athena, our customer only pays for the processed data. There are no idling costs. As an example, I migrated a workload from EMR to Athena, which reduced costs from $3,000 to $100.

What is Apache Airflow?

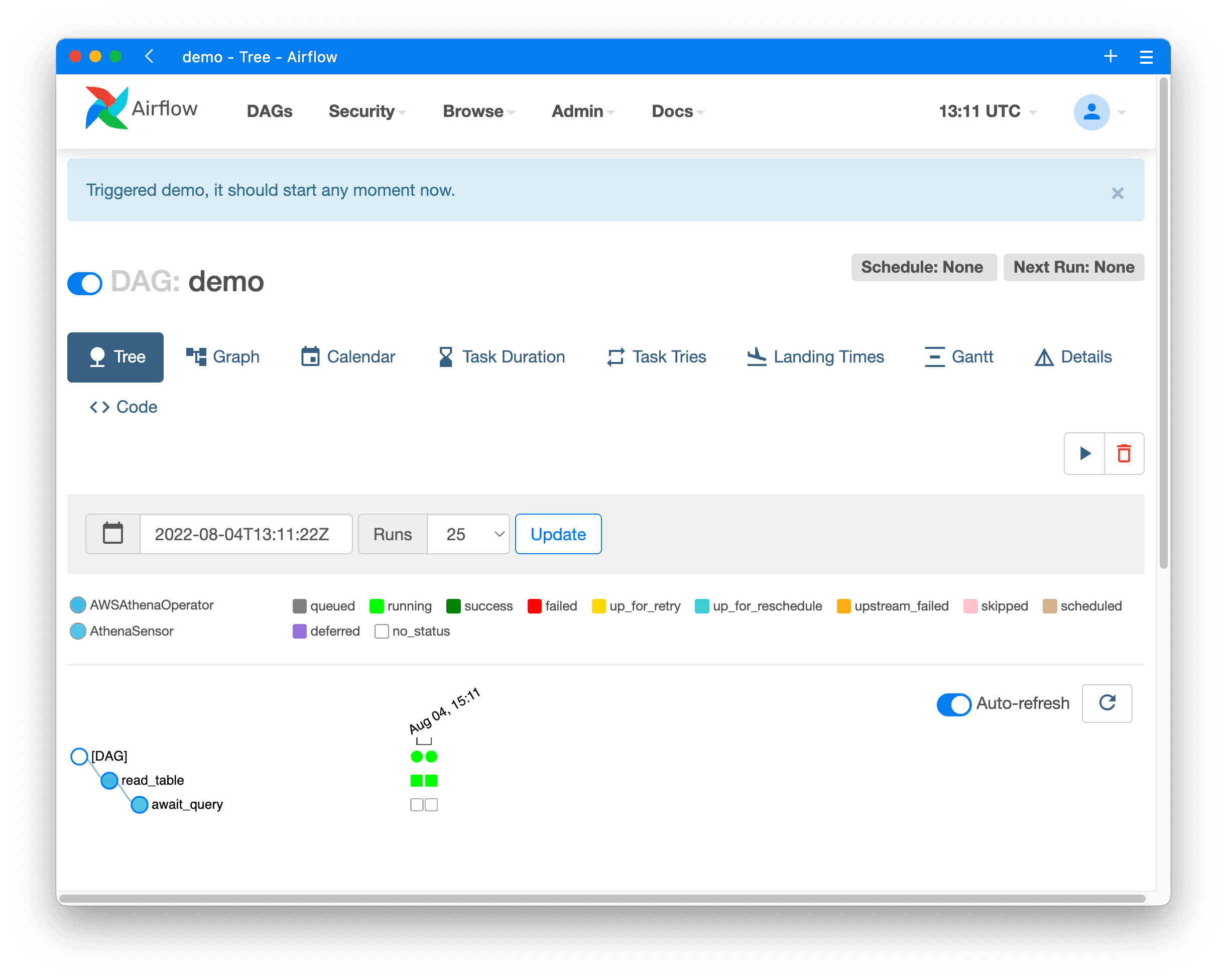

Apache Airflow is a popular open-source project providing a workflow management platform for data engineering pipelines. As a data engineer, I describe an ETL pipeline in Python as a directed acyclic graph (DAG).

Here is a straightforward directed acyclic graph (DAG). The workflow consists of two steps:

- Creating an Athena query.

- Awaiting results from the Athena query.

from airflow.models import DAG |

Airflow allows you to run a DAG manually, via an API, or based on a schedule.

Airflow consists of multiple components:

- Scheduler

- Worker

- Web Server

- PostgreSQL database

- Redis in-memory database

Operating such a distributed system is complex. Luckily, AWS provides a managed service called Amazon Managed Workflows for Apache Airflow (MWAA), which we use in my current project.

What does your development workflow for Airflow DAGs look like?

We built a deployment pipeline for the project I’m currently involved in. So you can think of developing the ETL pipeline like any other software delivery process.

- The engineer pushes changes of DAGs to a Git repository.

- The deployment pipeline validates the Python code.

- The deployment pipeline spins up a container based on aws-mwaa-local-runner and verifies whether all dependencies are working as expected.

- The deployment pipeline runs an integration test.

- The deployment pipeline uploads the DAGs to S3.

- Airflow refreshes the DAGs.

The deployment pipeline significantly speeds up the ETL pipeline’s development process, as many issues are spotted before deploying to AWS.

Why do you use Airflow instead of AWS Step Functions?

In general, Airflow is similar to AWS Step Functions. However, there are two crucial differences.

First, Airflow is a popular choice for building ETL pipelines. Therefore, many engineers in the field of data analytics have already gained experience with the tool. And besides, the open-source community creates many integrations that help build ETL pipelines.

Second, unlike Step Functions, Airflow is not only available on AWS. Being able to move ETL pipelines to another cloud vendor or on-premises is a plus.

Why do you specialize in machine learning and data analytics?

I enjoy working with data. Being able to answer questions by analyzing huge amounts of data and enabling better decisions backed by data motivates me. Also, I’m a huge fan of Athena. It’s one of the most powerful services offered by AWS. On top of that, machine learning, in general, and reinforced learning, in particular, fascinates me, as it allows us to recognize correlations that were not visible before.

Would you like to join Peter’s team to implement solutions with the help of machine learning and data analytics? tecRacer is hiring Cloud Consultants focusing on machine learning and data analytics. Apply now!

Further reading

- Article Builder's Diary Vol. 1: Successful Cloud Migrations

- Article Builder's Diary Vol. 3: Infrastructure Pipeline with GitLab and Terraform Cloud

- Article Builder's Diary Vol. 4: Serverless Software Engineering

- Article Builder's Diary Vol. 5: ECS Anywhere

- Article Builder's Diary Vol. 6: Serverless and DevOps - a match made in heaven

- Tag s3

- Tag athena