Fargate networking 101

Fargate runs Docker containers on AWS. ECS is responsible for orchestrating the containers that Fargate runs. If you are new to Fargate, I recommend you to read: ECS vs. Fargate: What’s the difference?. ECS and Fargate offer deep integration with other parts of AWS. You will learn how to master networking with Fargate while reading through this article.

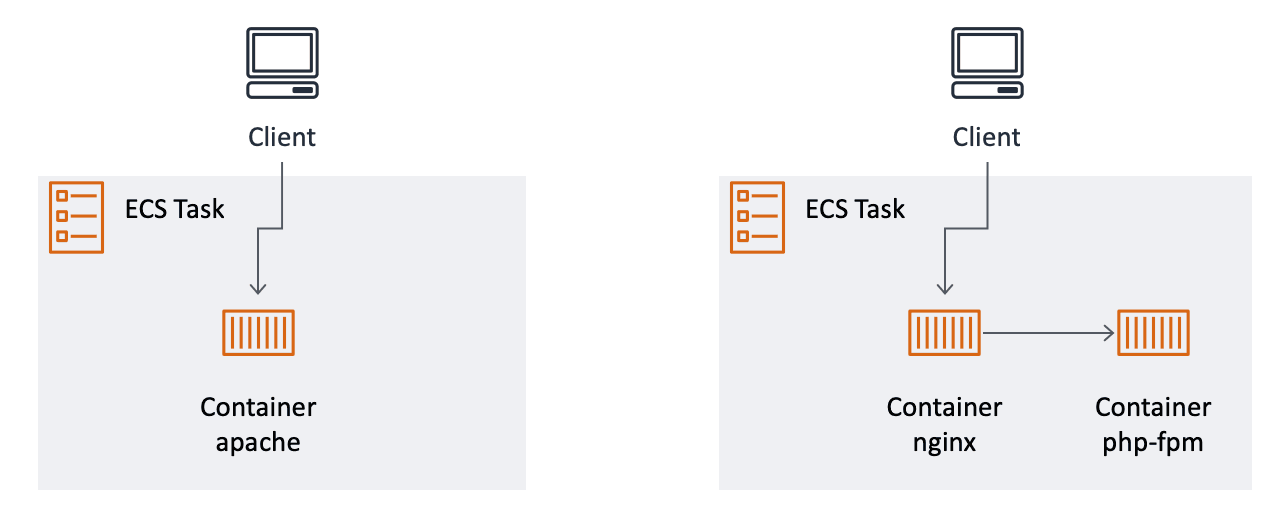

Before I dive into networking, I have to highlight an ECS specific concept: the task. An ECS Task is a group of containers that share a volume or should communicate via a local link and are therefore placed onto the same machine. The following figure demonstrates two different tasks.

A simple task consists only of a single container — for example, an Apache HTTP Server container. But there are also tasks where two containers work together. Think about an nginx container that forwards requests to a PHP-FPM container. When you interact with ECS, you can only start tasks, not containers.

Enough introduction about tasks. Let’s dive into networking.

Task networking capabilities

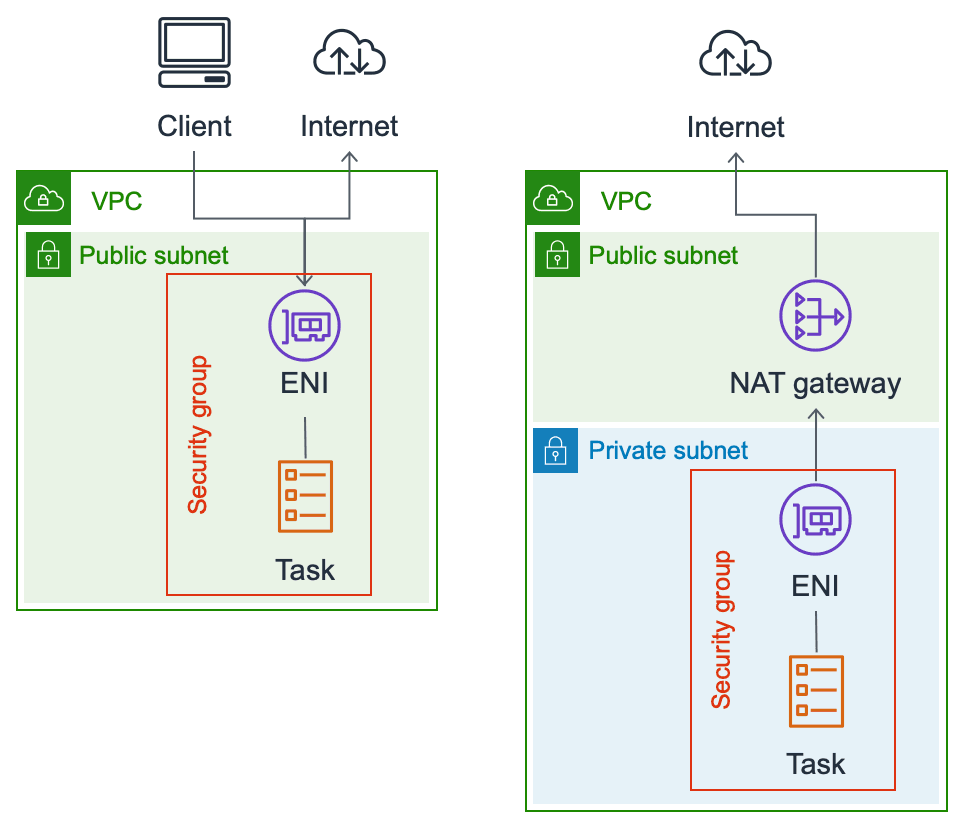

Each task that runs on Fargate is integrated into a VPC. More precisely, each task gets it’s own Elastic Network Interface (ENI). Therefore, a task behaves like an EC2 instance from a networking perspective. A task …

- runs in a single subnet.

- is protected by a security group.

- has a private IP address.

- has an optional public IP address.

The following figure shows the difference between a task running in a public and private subnet.

If a task runs in a private subnet (no route to an Internet Gateway), you can not access the task from the Internet, and you need a NAT Gateway to access the Internet.

Keep in mind that NAT Gateways are bottlenecks (scales from 5 to 45 Gbps) and adds additional costs. To access the AWS API, you should make use of VPC endpoints instead.

So far, I haven’t talked about how the Docker world is integrated with the AWS world. Imagine a task with two containers:

- nginx: runs the nginx process and listens on port 80 (TCP)

- php-fpm: runs the PHP-FPM process and listens on port 9000 (TCP)

When you use Docker locally, you use a command like this to run an nginx container:

docker run --rm -p 8080:80 nginx

The networking magic is configured with the -p 8080:80 parameter. -p instructs Docker to make port 80 on the container available as port 8080 on the host (e.g., your local machine).

When using Fargate, you specify the ports that you want to make available in the portMappings section of your container definition. With Fargate, you set the containerPort to the port that the container listens to (e.g., 80 for nginx). Fargate will ensure that you then can access this container on the same port on the ENI.

You can not run two containers in a task that listen to the same port!

What does this mean for our example?

- From the nginx container, you can access PHP-FPM on

127.0.0.1:9000 - From the php-fpm container, you can access nginx on

127.0.0.1:80 - If the security groups allow inbound access on port 80, you can access nginx by making a request to the private and optional public IP address of the ENI using port 80.

- If the security groups allow inbound access on port 9000, you can access PHP-FPM by making a request to the private and optional public IP address of the ENI using port 9000.

So far, you learned how containers could talk to each other inside the same task over 127.0.0.1 and how you can talk to a task using the public or private IP address. Most workloads run many tasks of the same kind using auto-scaling. If a client requests your website, you want to forward this to one of the running tasks.

Discovering tasks

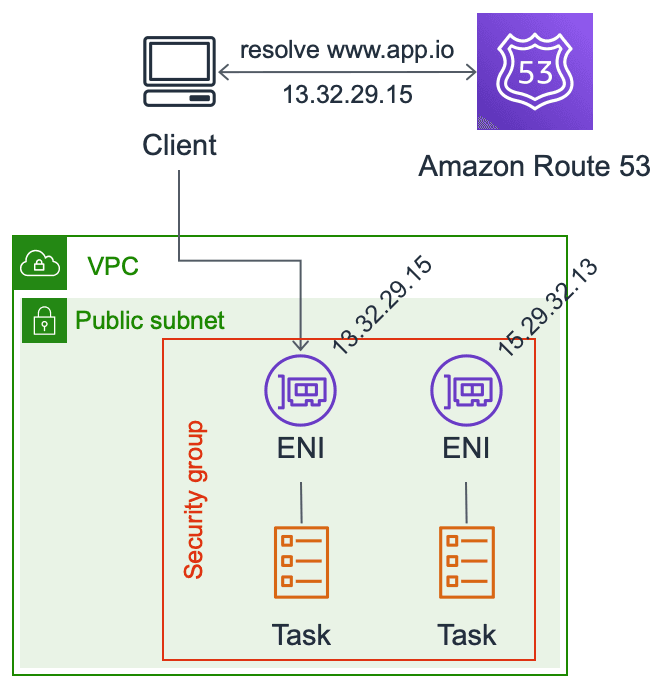

Back to our example, imagine you have two tasks running (nginx + php-fpm) to host your web application. You place the tasks into a public subnet and enable public IP addresses. To make those public IP addresses available to your clients, you can create a DNS A record and map www.app.io to the two public IP addresses of the task ENIs as demonstrated in the following figure.

That would work. The only problem is that this configuration is static and relies heavily on DNS. Before I can start to dive into service discovery, I have to introduce another ECS specific concept: the service. An ECS service is responsible for running a group of tasks of the same kind (using the same ECS Task Definition). Auto Scaling Groups are a similar concept when working with EC2. An ECS service regularly checks if enough tasks are running. If not, tasks are started or stopped accordingly. Besides that, the ECS service also integrates with AWS services to enable service discovery.

Application and Network Load Balancer

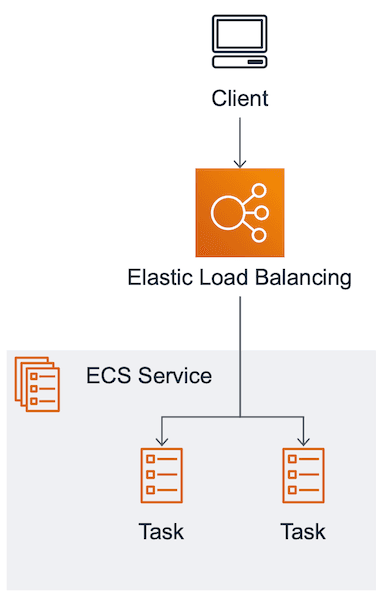

Both the Application Load Balancer (ALB) and the Network Load Balancer (NLB) provide a static endpoint for incoming requests for your clients. Incoming requests are distributed to a dynamic number of tasks as the following figure shows.

The ECS service registers and deregisters tasks at the load balancer. The client does not need to know about the IP addresses of the tasks. Instead, the client sends the request to the load balancer.

Some additional benefits are:

- A load balancers provide metrics (traffic, latency, HTTP errors) and logs.

- The load balancers can terminate HTTPS/TLS for you.

- The ALB can route requests based on hostname and path.

- The ALB ensures that only valid HTTP requests are forwarded to your tasks.

The downsides are:

- A load balancer adds costs.

- The ALB adds latency.

An ALB or NLB is more straightforward to manage. I recommend to use them as the entry point into your system from the outside world (frontend load balancing). If your services communicate heavily with each other, the additional latency and costs might be a problem. Read on for a second solution.

If you are interested in an example, check out our CloudFormation template on Github: https://github.com/widdix/aws-cf-templates/blob/master/fargate/service-dedicated-alb.yaml

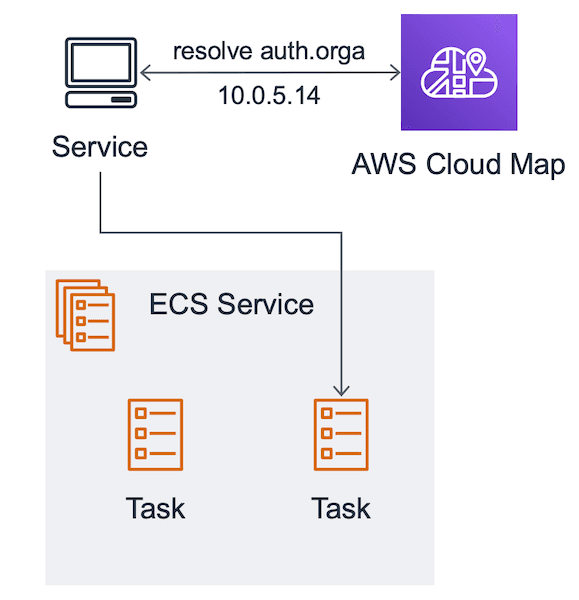

ECS service Discovery

ECS service discovery is based on AWS Cloud Map and integrates with Route53 (DNS) for service discovery. The ECS service registers and deregisters tasks to keeps the DNS records up-to-date.

A client resolves the DNS name and gets back an IP address pointing to one of the service’s tasks. The client connects to the task directly. I only recommend this way of service discovery for inter-service communication with retries and DNS resolvers respecting the TTL of records.

If you are interested in an example, check out our CloudFormation template on Github: https://github.com/widdix/aws-cf-templates/blob/master/fargate/service-cloudmap.yaml

Summary

Fargate networking is very close to what you know from EC2. You can think of a task as an EC2 instance and a container as a process. If you want to distribute end user requests to multiple tasks, you can use an Application or Network Load Balancer. For your inter-service communication, you might want to check out ECS service Discovery aka Cloud Map.

Further reading

- Article Fargate is ready for prime time, and we share our CloudFormation templates

- Article ECS vs. Fargate: What's the difference?

- Article A brief history of AWS architectures

- Tag vpc

- Tag container

- Tag ecs