Migrating CodePipeline to GitHub Actions to improve performance

Recently, we have become increasingly dissatisfied with the time our AWS CodePipeline pipeline takes to deploy a change to production. The pipeline builds the code, runs unit tests, deploys to a test environment, runs acceptance tests in the test environment, and deploys to production. It takes 27 minutes for a full run of our pipeline—too long for impatient developers like me. We analyzed the performance in detail and decided to migrate to GitHub Actions.

Read on to learn about the pitfalls and reasons for the migration.

Pipeline

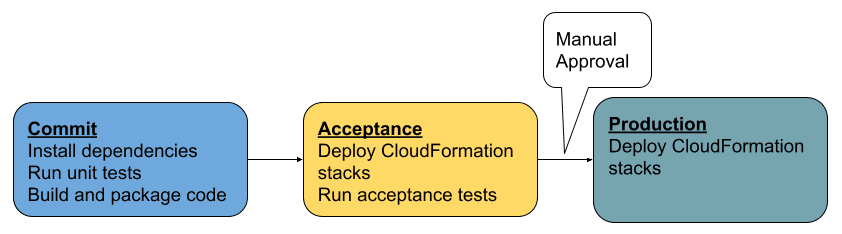

Before we start, here is an overview of the pipeline (click on the image for a huge CodePipeline screenshot). The pipeline deploys our serverless application: ChatOps for AWS - marbot.

Why CodePipeline and CodeBuild are slow

The following table lists the runtime of each stage of our CodePipeline pipeline.

| Stage | Runtime (mm:ss) |

|---|---|

| Commit | 07:01 |

| Acceptance | 09:35 |

| Production | 09:56 |

| Total | 26:32 |

We identified the following performance issues:

- CodePipeline adds a tiny but noticeable overhead with each action. E.g., deploying a CloudFormation stack is usually a second slower than calling the CloudFormation API directly. This adds up if you run actions in sequence because of dependencies (e.g., database deployment must happen before app deployment).

- CodePipeline only orchestrates the pipeline. To run a script, you need to integrate CodePipeline with CodeBuild. CodeBuild adds significant overhead. Queuing times of 60 seconds and provisioning times of 10 seconds are not unusual (us-east-1). We use seven CodeBuild actions and see more than 7 minutes of overhead because of that.

- We use

aws cloudformation packageand the CloudFormation transformAWS::Serverless-2016-10-31, aka SAM, to deploy our application.aws cloudformation packageuses a simple implementation to detect code changes that trigger a deployment of Lambda functions. We useesbuildto build our source code. Even if the content of the output files stay the same, the changing file creation date triggers a deployment.

Issues 1 and 2 are easier to fix with migrating to a different technology. Issue 2 could be partly addressed by combining multiple CodeBuild projects into one, making it harder to identify what went wrong quickly (downloading dependencies failed, tests failed, package failed, …) without checking the logs. Issue 3 has nothing to do with CodePipeline. To address issues 1 and 2, we decided to migrate to GitHub Actions. I also found a workaround for issue 3.

After we decided to deploy our AWS infrastructure and serverless app with GitHub Actions instead of CodePipeline, we learned a few things we want to share with you.

Artifacts

In CodePipeline, each action takes input artifacts (exceptions are the source actions at the very beginning of the pipeline) and optionally produces output artifacts. You must ensure (via runOrder) that action output artifacts are created before they are used as input artifacts in other actions.

GitHub Actions works differently. First, there is a difference between a job and a step. A job typically consists of multiple steps. All steps share the same runner environment and can access files created by previous steps (like in a CodeBuild build). If you want to pass artifacts from one job to another, you must use the GitHub Action upload-artifact and download-artifact.

AWS Authentication

Our pipeline deploys both: our serverless application and the required AWS infrastructure. Therefore, the pipeline requires access to the AWS APIs to configure the API Gateway or Lambda. Consequently, we are using AWS IAM to configure access permissions via policies.

In CodePipeline, you define the IAM role (via roleArn) that CodePipeline assumes to run your pipeline. Keep in mind that each CodeBuild project requires its own IAM Role.

In GitHub Actions, you also define the IAM role, but you need a small piece of AWS infrastructure to make it work called an OpenID Connect (OIDC) identity provider, part of the IAM service.

Check out our CloudFormation template to set everything up in a minute.

Add the following permissions to your workflows at the top level:

permissions:

id-token: write

contents: readUse the GitHub Action aws-actions/configure-aws-credentials to assume the role in your workflow like this:

permissions: {} # shortened

jobs:

demo:

runs-on: ubuntu-22.04

steps:

- uses: actions/checkout@v4

- uses: aws-actions/configure-aws-credentials@v4

with:

role-to-assume: arn:aws:iam::123456789012:role/github-openid-connect

role-session-name: github-actions

aws-region: us-east-1

Running a script

I already mentioned that CodePipeline only orchestrates the pipeline. To run a script, you need to integrate CodePipeline with CodeBuild. The following CloudFormation snippet shows the required AWS resources and the mind-blowing complexity:

Resources: |

GitHub Actions has native support for running scripts. The following workflow installs Node.js dependencies via npm and runs unit tests on each push to master. The test reports are archived as an artifact and can be inspected manually:

name: unit-test |

The default shell for CodeBuild and GitHub Actions

CodeBuild defaults to sh and stops when a “command” from the commands list fails.

GitHub Actions defaults to bash with the following settings:

-e: Exit immediately if a command exits with a non-zero status.

I usually set the default shell to bash in my workflows at the top level:

defaults: |

GitHub Actions now runs bash with the following settings:

--noprofile: Do not read either the system-wide startup file/etc/profileor any of the personal initialization files/.bash_profile/.bash_login,, or~/.profile`.--norc: Do not read and execute the personal initialization file ~/.bashrc if the shell is interactive.-e: Exit immediately if a command exits with a non-zero status.-o pipefail: The return value of a pipeline is the status of the last command to exit with a non-zero status, or zero if no command exited with a non-zero status

AWS Integrations

CodePiepline integrates with a bunch of AWS services natively:

- Amazon ECR

- Amazon ECS

- Amazon S3

- AWS CloudFormation

- AWS CodeBuild

- AWS CodeCommit

- AWS CodeDeploy

- AWS Device Farm

- AWS Elastic Beanstalk

- AWS Lambda

- AWS OpsWorks Stacks

- AWS Service

AWS offers the following actions for GitHub Actions (some of them are not maintained):

- AWS IAM

- AWS CloudFormation (not maintained anymore)

- Amazon EKS

- Amazon CloudWatch (not maintained anymore)

- Amazon CodeGuru

- AWS CodeBuild

- AWS Secrets Manager

- Amazon ECS

- Amazon ECR

On top of that, you can find many 3rd party actions in the GitHub Marketplace.

For example, we forked the official AWS CloudFormation action and added parallel stack deployments.

Parallelization

We learned that executing steps in parallel is not so easy in GitHub Actions. CodePipline provides much better support for that! That’s why we added parallel stack deployments to our fork of the official AWS CloudFormation action.

Reusability

In CodePipeline, there is no way to reuse a stage. My Acceptance and Production stages are very similar. If I make a change to one of them, I have to remember to make the change to the other stage as well. There is no way to reuse a stage or a pipeline.

GitHub Actions provides a way to reuse workflows.

I have created a deploy workflow like this with an input to indicate if dev or prod should be deployed:

|

Manual approval

CodePipeline supports manual approvals.

Unfortunately, GitHub Actions supports manual approvals only for organizations subscribed to the Enterprise plan.

Our workaround for marbot is this:

- We deploy to dev when the master branch changes.

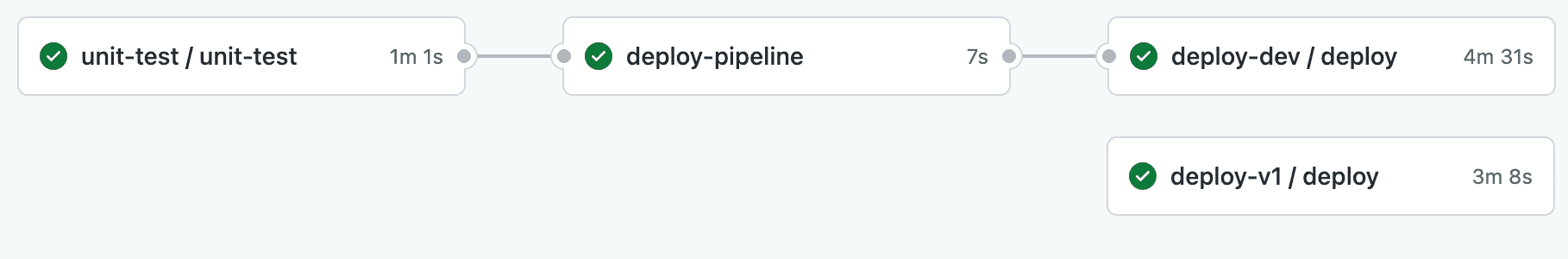

- We create a tag v1.y.z to deploy to prod.

The main limitation of this workaround is that we have to use two workflows. There is no easy way to share artifacts between workflows (workarounds exist). For now, we run npm ci && npm run build twice and hope that the outcome is the same.

Fixing aws cloudformation package

If you agree with me that only a change in the content of a file/files is relevant to decide if a new deployment of a Lambda function is needed, you can add the following line before your aws cloudformation package command (assuming your build output is stored in the build folder):

find build/ -exec touch -m --date="2020-01-01" {} \; |

The above command will set the creation time of all files inside the build folder to 2020-01-01 (the date doesn’t matter as long as it stays constant). Therefore, aws cloudformation package will only trigger a deployment of the Lambda function in case you made changes to your code.

Outcome

Migrating from CodePipeline to GitHub Actions and optimizing the deployment process reduced the deployment time from 27 minutes to 9 minutes (down 66%). Optimizing the existing CodePipeline pipeline would have yielded 20-50% performance improvements. We are delighted with the outcome of the migration.

Further reading

- Article GitHub Actions vs. AWS CodePipeline

- Article Real-world CodePipeline CI/CD examples

- Tag codepipeline

- Tag codebuild

- Tag github