I'm losing trust in AWS. SNS is broken for 65 days.

I’m frustrated. A major service of AWS is broken for 65 days. The Simple Notification Service (SNS) delivers messages to HTTPS subscriptions with a delay of more than 30 minutes. That issue impacts our SaaS business. But AWS did not fix the problem yet.

You will find the latest updates on the issue at the end of the blog post.

The Problem

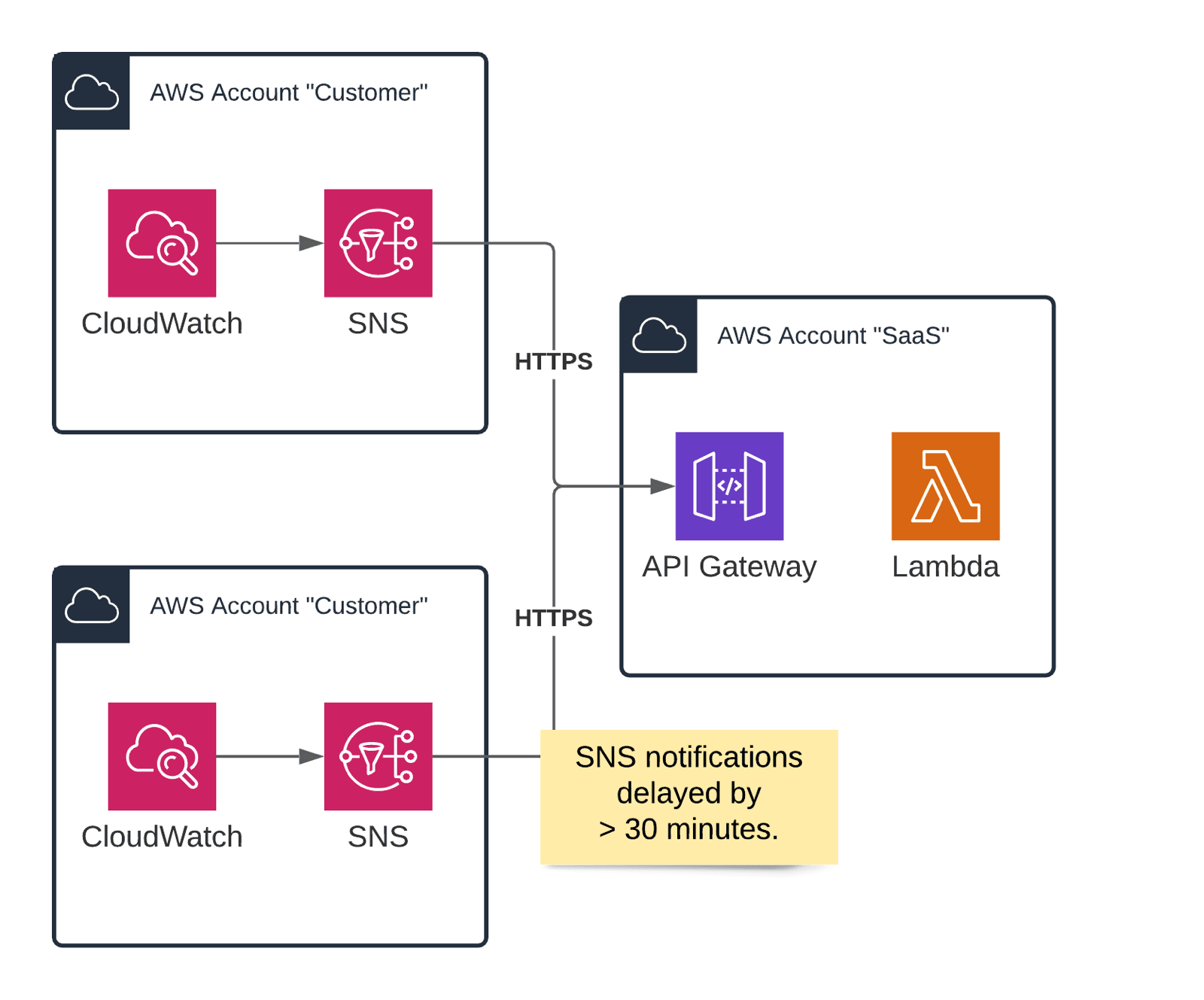

Our SaaS business runs on a Serverless architecture. Our customers send all kinds of alarms from their AWS infrastructure to our chatbot. To do so, our solution configures CloudWatch alarms and an SNS topic within our customer’s AWS accounts. The SNS topic forwards all incoming alarms to our API Gateway by using an HTTP subscription.

On September 1st, a customer wrote in: they observed that CloudWatch alarms showed up in Slack with a delay of more than 30 minutes. A monitoring and incident management solution is kind of worthless with delayed alarms. Therefore, I started investigating immediately.

First of all, I had a look at the AWS Service Health Dashboard. All systems operating normally.

Next, I analyzed our log messages to track down the issue. But I could not find any delayed messages—incoming alarms were delivered to Slack within milliseconds after arriving at our API Gateway.

I was wondering how to find out whether SNS caused the delay. But how to investigate an issue like that? Luckily, I stumbled upon delivery status logging. A SNS topic is capable of writing delivery logs to CloudWatch Logs. The perfect way to debug a problem like that.

I found log messages similar to this one. SNS sent a message to api.marbot.io, and our API Gateway answered with status code 204. SNS tried to deliver the message once. The important information is dwellTimeMs = 2748244. It took SNS about 45 minutes to send the alarm to our backend.

{ |

And this is not an outlier. The same is true for all other messages as well. Immediately, I’ve contacted AWS Support. Unfortunately, the story does not end here.

A Workaround

After the typical back and forth between the support engineer and the service team, I was told to remove the rate limit from our HTTP subscription. AWS announced that feature in December 2011, so I would expect that to be pretty stable, but hey. I’ve removed the throttling policy - the parameter is named maxReceivesPerSecond - and indeed, doing so fixed the problem.

Let me clarify. We are using a rate limit of 1 message per second to avoid flooding our API Gateway in case of misconfigured alarms. Typically only a few messages pass the SNS topic per hour! We are far away from reaching the rate limit. Also, we are talking about the rate limit enforced by our API Gateway, but by our customer’s SNS topics or subscriptions. However, there enabling a rate limit on your SNS topics or subscriptions might cause serious delays.

Fine, there is a workaround for the issue.

All we have to do, is to remove the throttlePolicy from all SNS topics and subscriptions. Our default delivery retry policy looks like this.

{ |

However, implementing the workaround is a challenge for us. We need to update about 1,000 SNS topics. And unfortunately, those SNS topics are not part of our AWS accounts but are managed by our customers. Therefore it is quite expensive to roll out the recommended workaround.

Losing Trust

We love our Serverless architecture. However, our business depends on AWS to operate all the involved services (SNS, API Gateway, Lambda, DynamoDB, Kinesis, Step Functions, etc.) professionally and fix any issues within hours.

Unfortunately, more than 24 days passed, and AWS did not fix the problem. SNS messages are still delayed. Neither AWS Support nor any AWS employee that I have contacted could help with the issue. Until today, we do not even know when AWS is planning to fix the problem - “Unfortunately, I cannot provide any ETA.”. The AWS Service Health Dashboard still states All systems operating normally. It is not!

I’m building on AWS for more than eight years. I’ve never experienced anything like that before. My trust in AWS has strongly decreased over the past month.

Want to learn more about debugging delayed SNS subscriptions? Watch the following video!

1st Update (September 29th, 2020)

This blog post gained traction. It did not take too long until AWS wrote in. I had some background talks about our issue. Not sure what I am allowed to share from these conversations. Luckily, Jeff Barr - Chief Evangelist for AWS - posted a response to our blog post on Reddit publicly. Let me summarize the reaction for you.

- The SNS team acknowledges an issue with HTTP subscriptions when using a throttling policy (

maxReceivesPerSecond). - The SNS team points out that only a few customers/messages are affected by the issue.

- The SNS team promises to fix the problem until October 29, 2020.

I’ve collected some data to quantify the number of affected customers on our side.

20 % of our customers receive at least 50% of their alarms with a delay of more than 15 minutes.

Also, based on the data we collected, it seems to me that some regions are more affected than others. The following table shows the 90th percentile for a period of 24 hours for different regions.

| Region | Delay (ms) |

|---|---|

| us-east-1 | 7011903 |

| us-west-2 | 1020785 |

| ap-southeast-1 | 178609 |

| eu-central-1 | 93179 |

| sa-east-1 | 89614 |

| eu-west-3 | 65389 |

| ap-southeast-2 | 36381 |

| eu-west-2 | 22211 |

| eu-west-1 | 1893 |

| ap-south-1 | 831 |

| ap-northeast-1 | 699 |

| us-east-2 | 367 |

| us-west-1 | 350 |

| ca-central-1 | 331 |

I’m happy to hear that AWS is working on fixing the issue that causes us many troubles. I’m a little bit disappointed that we might have to wait another month for a fix. Let’s hope that AWS learned from that issue and will improve their processes to escalate issues like that much faster.

2nd Update (October 6th, 2020)

AWS informed customers about SNS via email on October 6th. The email included a list of potential affected SNS topics.

- The message delay depends on the

maxReceivesPerSecondattribute. Delay starts at amaxReceivesPerSecondof less than 20,000. - Again, AWS promised to fix the issue until October 29th, 2020.

- AWS explains that disabling the throttling policy is a workaround for the issue.

3rd Update (November 4th, 2020)

The deadline has passed. AWS did not fix the issue with delayed SNS messages yet. Our SaaS is still affected by the problem. I’m frustrated that AWS did not inform other affected customers and us about postponing the ETA for a fix.

4th Update (November 13th, 2020)

AWS did finally fix the issue. We are not observing delayed SNS messages any more. We have been waiting for that for 74 days. Hurray!