Terraform, can you keep a secret?

Did you know that Terraform state can - and most likely does - contain sensitive data? A few examples of sensitive information stored in the Terraform state:

- Initial password for an RDS instance.

- Unencrypted value fetched from SSM parameter (

SecureString). - Preshared keys of VPN connection.

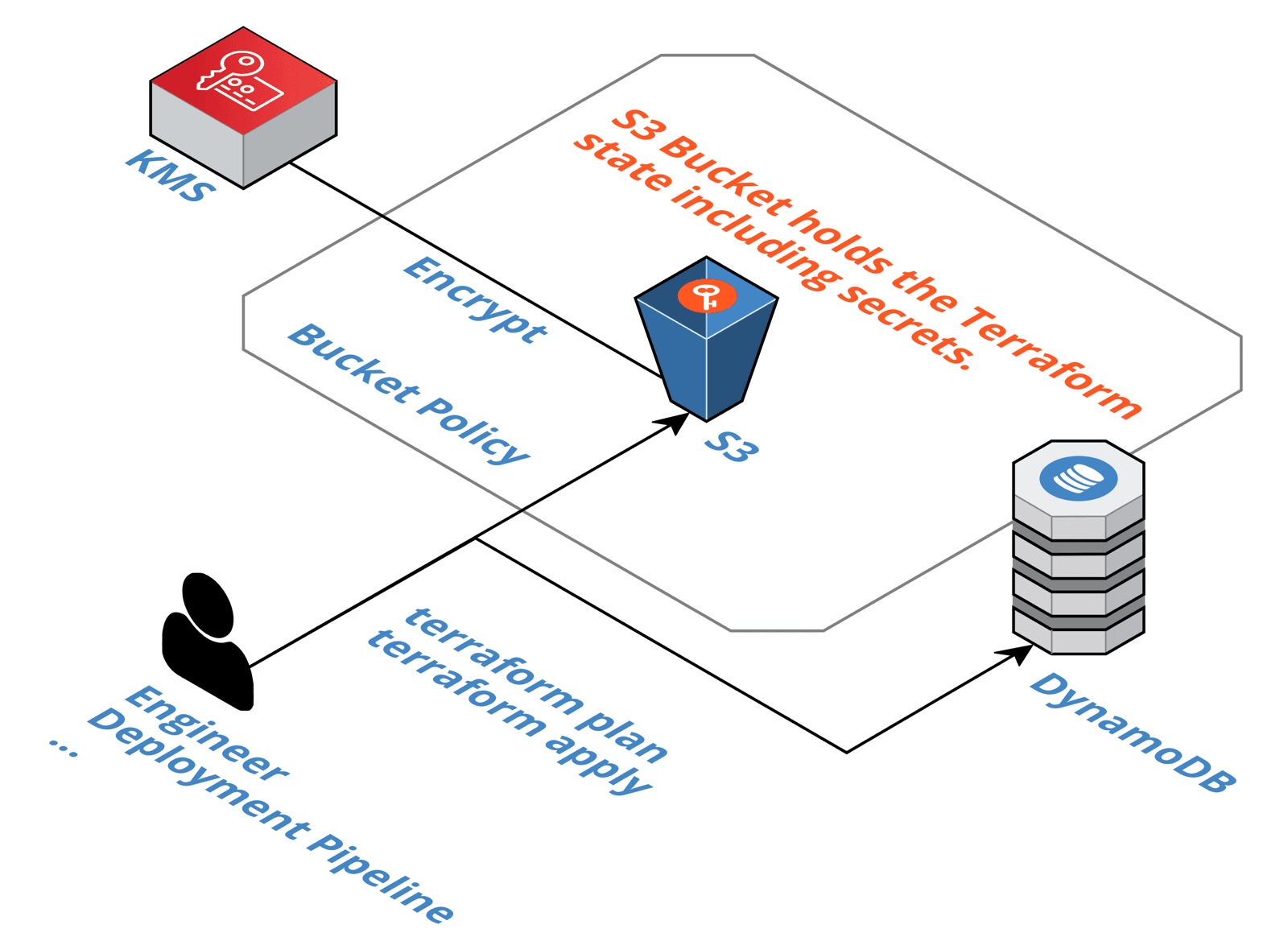

When using Terraform to provision cloud infrastructure on AWS, it is common to use S3 and DynamoDB to store the Terraform state as well. When doing so, Terraform will store sensitive information on S3. By default, the confidential data is neither encrypted at rest nor protected from access from other users or roles from the same AWS account.

When using the S3 backend to manage your Terraform state, you should not forget to enable encryption-at-rest and tight access control to the S3 bucket.

Read on to learn how to protect your sensitive information.

Encryption-at-Rest

Enabling S3 Default Encryption will automatically encrypt the Terraform state when stored on S3. It’s only server-side encryption, but still much better than storing your sensitive information unencrypted.

For full control, I recommend using a customer-managed CMK managed by the Key Management Service (KMS) when configuring the default encryption for your S3 bucket.

The following snippet shows how to enable default encryption with CloudFormation.

StateBucket: |

Access Control

First of all, use a separate S3 bucket to store your Terraform state. I recommend creating an S3 bucket per AWS account and region.

Next, we need to follow the least privilege principle for read and write requests to the S3 bucket. The best way to restrict access to an S3 bucket very tightly is to make use of a bucket policy.

The following bucket policy grants the IAM role tfadmin full access to administer the S3 bucket. The IAM user tfuser is only granted read and write access to the objects within the bucket. Everyone else is neither allowed to modify the bucket nor to access the data stored within the bucket.

As the bucket policy uses

Denystatements with anNotPrincipalelement, it is necessary to specify the account (arn:aws:iam::111111111111:rootin my example) as well as the assumed-role user when using IAM roles. Check out NotPrincipal with Deny to learn more.

{ |

Summary

Terraform will not keep your secrets! Sensitive information like database passwords, secrets stored within the Parameter Store, or shared keys for a VPN connection is at risk. Therefore, you should enable encryption-at-rest and use a bucket policy to tightly control who can access your Terraform state when using Terraform’s S3 backend.

Are you looking for a ready-to-use implementation? I’ve added a CloudFormation template to our open-source project widdix/aws-cf-templates that you can use to create an S3 bucket and DynamoDB optimized for the use as Terraform state backend. Check out the documentation to get started.

Further reading

- Article CloudFormation vs Terraform in 2022

- Article How to avoid S3 data leaks?

- Article Building with EC2: 10 Tips for the Successful Cloud Architect

- Tag security

- Tag terraform

- Tag highlight