All you need to know about AWS re:Invent in 2019

re:Invent was a blast: five days packed with announcements of new services and features. We have created a top 10 list for our re:Invent recap. Here is all you need to know about re:Invent 2019.

Michael has collected some voices in Las Vegas. Listen to what Aleksandar Simovic, Anders Bjørnestad, and Thorsten Höger have to say in our podcast episode. On top of that, the podcast episode includes our top 10 of re:Invent announcements as well.

Do you prefer listening to a podcast episode over reading a blog post? Here you go!

Provisioned Concurrency for AWS Lambda

Provisioned concurrency clears an obstacle out of the way: cold starts. By default, AWS Lambda scales on-demand. However, initializing a new executor takes a while - something between 100 ms and 1 s. Typically, only a small portion of the requests is affected by cold starts. However, there are scenarios where latencies above 200 ms are not acceptable, not even for a small number of requests.

Provisioning memory for concurrent invocations of your Lambda function allows you to reduce or avoid cold starts. For example, provisioning 4 GB memory will pre-warm 16 executors for your Lambda function with 512 MB memory size. As long as the Lambda function does not have to process more than 16 requests in parallel, there will be no cold starts at all. Check out Danilo’s blog post Provisioned Concurrency for Lambda Functions for a latency benchmark between a Lambda function with and without provisioned concurrency.

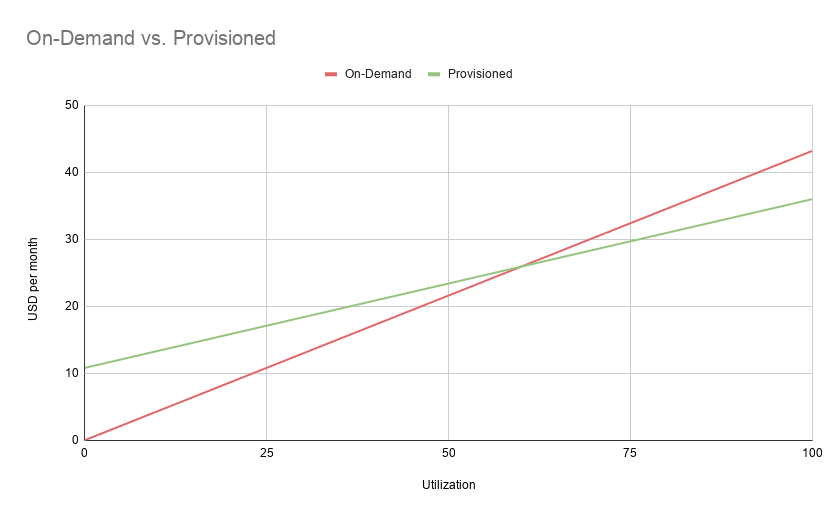

But provisioned concurrency does not only come with technical implications, but it also affects your AWS bill as well. Provisioning capacity for your Lambda function comes with a discount of about 15%. But keep in mind that you pay for provisioned capacity no matter if your Lambda function processes requests or not.

The following figure illustrates the costs for every GB-second at different utilization in US East (N. Virginia). On top of that, you pay $0.20 per 1M requests.

Good news, Provisioned Concurrency for AWS Lambda is ready to build something great!

| Ready to build? | |

|---|---|

| General Available? | ✅ |

| Available in all regions? | ✅ |

| Manage with CloudFormation? | ✅ |

HTTP API

Building a REST API with AWS Lambda is getting easier and cheaper with HTTP APIs, a new service under the Amazon API Gateway umbrella.

HTTP APIs come with a small but powerful set of features.

- Forward incoming requests to AWS Lambda or any other HTTP endpoint.

- Cross-origin resource sharing (CORS).

- Authentication and authorization via JWT.

- Custom domain names, as well as TLS/SSL.

I can’t wait to build a Serverless application with HTTP APIs. However, HTTP APIs are in beta and should not be used for production workloads yet. There is also an important feature missing: throttling. At the moment, you cannot rate-limit requests per client in any way.

| Ready to build? | |

|---|---|

| General Available? | ❌ |

| Available in all regions? | ❌ |

| Manage with CloudFormation? | ✅ |

Fargate for EKS

One of my most essential arguments, why I prefer ECS over EKS, is no longer valid: Fargate is available for EKS as well now. Why is that important? Because there is rarely a business case in which it makes economic sense to take responsibility for two layers of abstraction: virtual machines and containers. Fargate provides compute capacity at the abstraction layer of a container. No virtual machines to manage!

Fargate pricing is the same, no matter if you orchestrate the containers with EKS or ECS. On top of that, you pay $144 per EKS cluster per month. Whereas running an ECS cluster is free of charge.

To use Fargate with EKS, you need to upgrade your cluster to Kubernetes version 1.14 and platform version eks.5. Next, create a Fargate profile that tells the cluster master to launch pods on Fargate for a particular namespace. If needed, you can also use tags to define which pods should launch on Fargate.

Unfortunately, Fargate for EKS is only available in the following regions: US East (Ohio), US East (N. Virginia), Asia Pacific (Tokyo), and EU (Ireland).

| Ready to build? | |

|---|---|

| General Available? | ✅ |

| Available in all regions? | ❌ |

| Manage with CloudFormation? | ❌ |

ALB: Least Outstanding Requests Algorithm and Weighted Target Groups

AWS improved the Application Load Balancer (ALB) significantly with the following new features:

- Least Outstanding Requests algorithm for load balancing

- Weighted Target Groups to distribute traffic among multiple target groups

Why are those new features important?

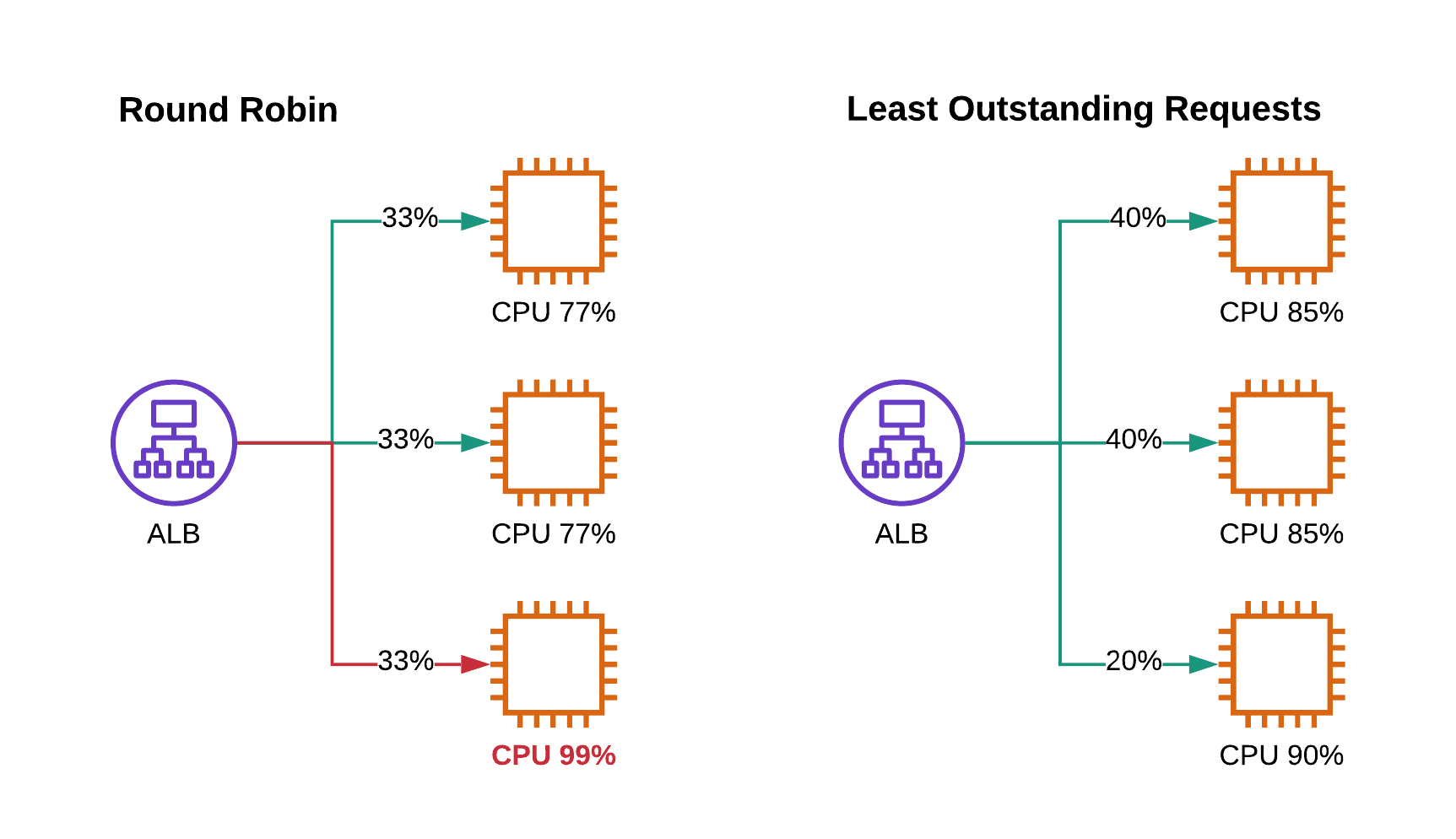

Until now, the ALB used the round-robin algorithm to distribute incoming requests among targets. As illustrated in the following figure, this algorithm leads to hot targets in case some requests consume more resources than others. With the least outstanding requests algorithm, the ALB keeps track of the backlog of each target and sends requests to the target with the least outstanding requests. Doing so distribute the workload more evenly among all targets and prevents from hot targets. Overall, that allows you to reduce over-provisioned capacity.

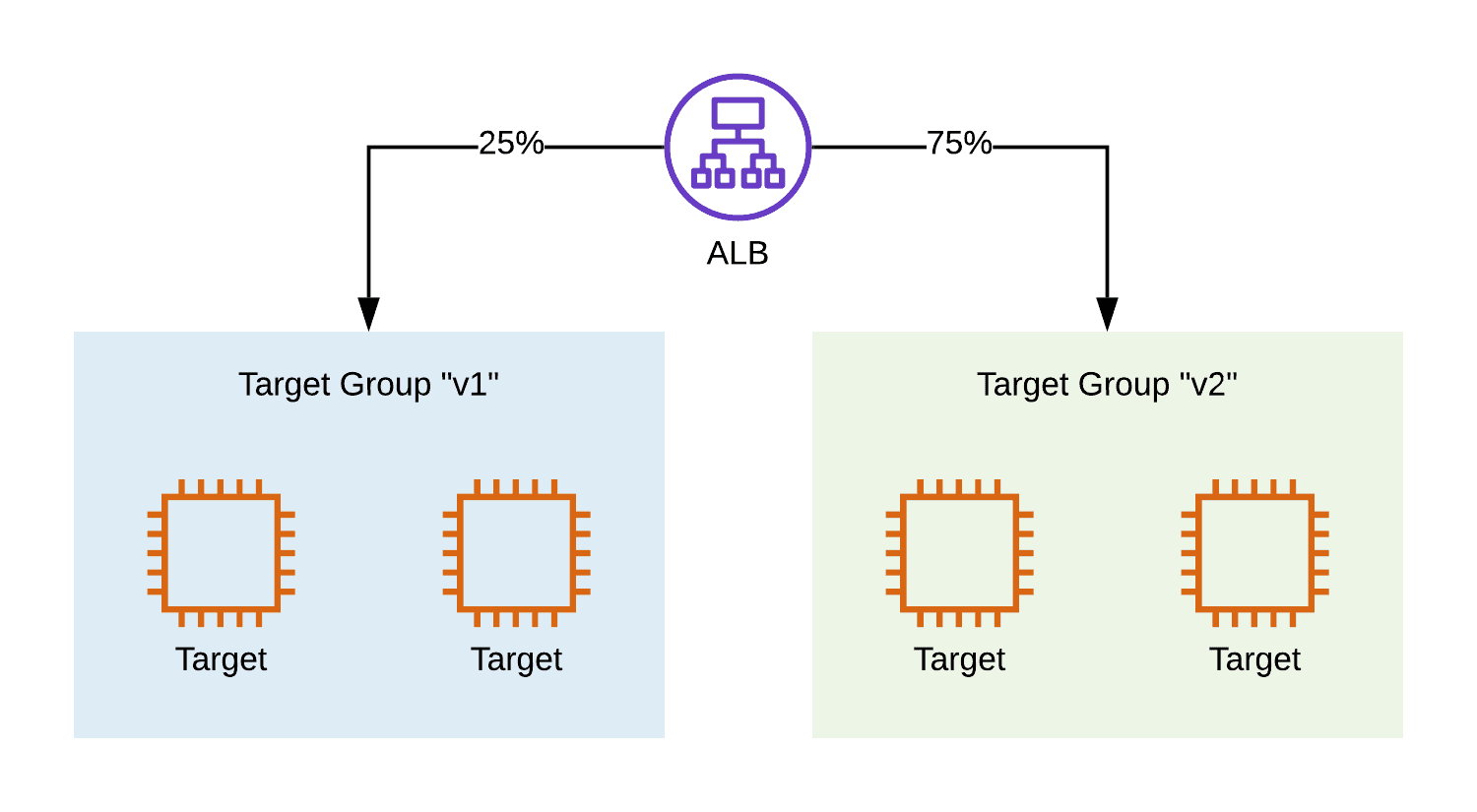

Deploying without downtime is a core skill that organizations adopt when moving their workloads to the cloud. The following figure shows a popular deployment strategy called blue-green deployment. During a deployment, all traffic is switched from the current version (green) to the new version (blue). In case any unexpected problems occur, the deployment rolls back to the green version automatically. The deployment pattern requires full control over the amount of traffic that is sent to the green and blue versions of your application. That’s precisely what weighted target groups are all about!

| Ready to build? | |

|---|---|

| General Available? | ✅ |

| Available in all regions? | ✅ |

| Manage with CloudFormation? | ❌ |

Inter-Region Peering with Transit Gateway

The AWS Transit Gateway was introduced last year at re:Invent. Peering networks have become much more convenient since then. A transit gateway allows you to share a VPN or Direct Connect connection with multiple VPCs. On top of that, it is possible to peer multiple VPCs with each other.

I’m thrilled that the AWS Transit Gateway allows me to peer networks across different regions from now on. A convenient feature for establishing links between your infrastructure distributed among different parts of the world.

Unfortunately, the feature is not available in all regions yet. Currently, the following regions are supported: US East (N. Virginia), US East (Ohio), US West (Oregon), EU (Ireland), and EU (Frankfurt).

| Ready to build? | |

|---|---|

| General Available? | ✅ |

| Available in all regions? | ❌ |

| Manage with CloudFormation? | ❌ |

AWS Fargate Spot Pricing

AWS Fargate received two new features to save money:

- 20-52% saving with Compute Savings Plans

- 69% saving with Spot Pricing

Use Spot pricing with interruption tolerant workloads (2-minute termination notice) and Savings Plans to cover your baseline usage of workloads that require. Unfortunately, we still wait for CloudFormation support.

| Ready to build? | |

|---|---|

| General Available? | ✅ |

| Available in all regions? | ✅ |

| Manage with CloudFormation? | ❌ |

If you are interested in the details of Savings Plans, read our (/reduce-your-aws-bill-with-savings-plans/)[Reduce your AWS bill with Savings Plans] post.

ECS Cluster Auto Scaling

If you run your ECS cluster on EC2 instances instead of Fargate, this is big news for you: Scaling EC2 container instances was very hard, if not impossible. ECS Cluster Auto Scaling solves the issue (as soon as CloudFormation support is added).

| Ready to build? | |

|---|---|

| General Available? | ✅ |

| Available in all regions? | ✅ |

| Manage with CloudFormation? | ❌ |

Step Functions Express Workflows

You get cheaper Step Functions that can scale up to over 100,000 execution per second. The following limitations apply:

- Maximum execution duration is 5 minutes

- Executions appear in CloudWatch Logs, not in the Step Functions API.

- At-least-once execution guarantee (instead of exactly-once).

- Does not support Job-run (.sync) or Callback (.waitForTaskToken) patterns.

- Does not support Step Functions activities.

| Ready to build? | |

|---|---|

| General Available? | ✅ |

| Available in all regions? | ✅ |

| Manage with CloudFormation? | ✅ |

Amplify DataStore

Amplify DataStore is a new Amplify capability to support offline-first applications. You can use the DataStore to write, read, and subscribe to changes no matter if the client is online or offline. The DataStore can optionally sync to the cloud as well as across devices. To sync with the cloud, the Amplify CLI will use the GraphQL schema to deploy an AWS AppSync backend with DynamoDB tables for each type and an additional table used for Delta Sync.

| Ready to build? | |

|---|---|

| General Available? | ⚠️2 |

| Available in all regions? | ✅ |

AWS AppConfig

AWS AppConfig is a way to deploy configuration data to environments in a controlled and safe manner. Configuration data can be validated by a JSON schema or Lambda function. Changes are rolled out step-by-step and are rolled back if a CloudWatch Alarm fires within a “bake time”. Configuration data itself is fetched from SSM Parameter Store or an SSM Document (no, S3 is not supported).

The biggest surprise might be that your application has to poll AppConfig in regular intervals to get configuration updates. The following Node.js snippet shows how it works.

const AWS = require('aws-sdk'); |

Some ideas for the clientId which can be up to 64 characters in length:

- EC2: Instance ID provided by the Metadata Service

- ECS/Fargate: hashed Task ARN provided by the Metadata Endpoint

- Lambda: hashed environment variable

AWS_LAMBDA_LOG_STREAM_NAMEto identify the function instance 1

| Ready to build? | |

|---|---|

| General Available? | ✅ |

| Available in all regions? | ✅ |

| Manage with CloudFormation? | ❌ |

For reasons only AWS understands AWS AppConfig is part of AWS Systems Manager but still has it’s own service name in SDKs & CLI.

That’s it! These were the 10 most important announcements from re:Invent. See you again in Las Vegas next year.