How we run our blog cloudonaut.io

Now and then you ask us: How do you run cloudonaut.io? Today, I want to share some insights with you about the work and technology behind the scenes of our blog.

- How do we come up with new topics?

- How do we host this website?

- How do we survive to be listed on the front page of Hacker News?

Do you prefer listening to a podcast episode over reading a blog post? Here you go!

Finding new topics and planning

We work with AWS daily in customer projects. Because of that, we receive a lot of questions, see a lot of architectures, and we can identify common issues. All of this is the input for new blog posts. Andreas and I aim to publish one post per week. Depending on our workload, we have a queue of posts ready for publication, or we write them the day before you can read them.

Writing: hexo

Andreas and I write all our posts with Sublime Text in Markdown. The last sentence in Markdown looks like this:

## Writing: hexo |

We use the blog framework hexo to convert all the Markdown files into static HTML pages. hexo also supports themes and plugins that we use to customize the generated HTML.

We use plugins to:

- Extract the preview image from the content of an article to support list views.

- Make use of the HTML

<picture>element to optimize the page size. - Support footnotes.

- Offer an RSS feed.

- Display shiny tables.

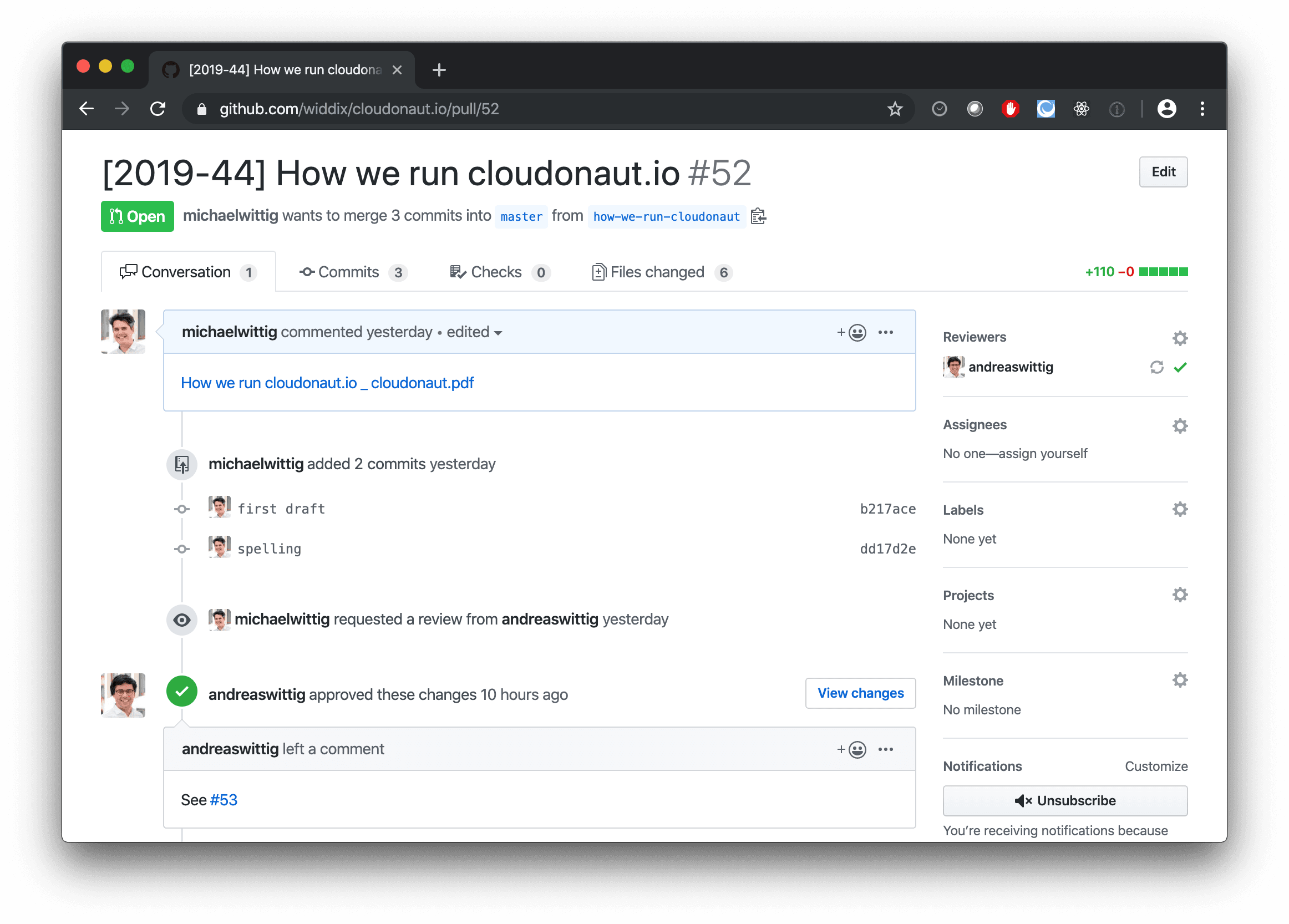

A git branch is created for every new post and pushed to a private repository on GitHub. GitHub supports excellent review capabilities via pull requests, as the following figure shows.

Depending on the length of the blog post, we invest 2-8 hours into a blog post.

Deployment: Jenkins

Once we merge a pull request, the continuous deployment pipeline starts. The most important steps are:

- Install Node.js dependencies:

npm ci --production - Generate website files:

node ./node_modules/.bin/hexo generate - Copy the generated files to S3:

aws s3 sync [...] - Invalidate the CloudFront cache:

INVALIDATION_ID=$(aws cloudfront create-invalidation --distribution-id [...] --paths '/*' --query 'Invalidation.Id' --output text) - Wait for the invalidation to finish:

aws cloudfront wait invalidation-completed --distribution-id [...] --id $INVALIDATION_ID - Probe the most important pages to check that they return expected content (e.g., check if the RSS feed works)

The cloudonaut.io deployment pipeline is hosted on our Jenkins infrastructure. The task to migrate to CodePipeline waits in our backlog for quite some time. 🤷♂️

Static Hosting: S3 & CloudFront

We are using CloudFront as our CDN and S3 as the origin is a proven serverless architecture. We use our open-source CloudFormation template to deploy the setup. CloudFront and S3 ensure that our website does not crash even under heavy load.

Edge intelligence: Lambda@Edge

Lambda@Edge is a way to execute your code in CloudFront. You can execute your code when:

- The request comes in from the client.

- Before the request is forwarded to the origin (cache miss).

- After the response from the origin is received.

- Before the response is sent to the client.

We use Lambda@Edge for redirects and on-the-fly image optimization.

Alerting + Incident Management: CloudWatch & marbot

We use Uptime Robot to check from outside of AWS if our website is reachable. If not, we send an alert to marbot: our Slack based incident management solution plus an email (hosted by Google).

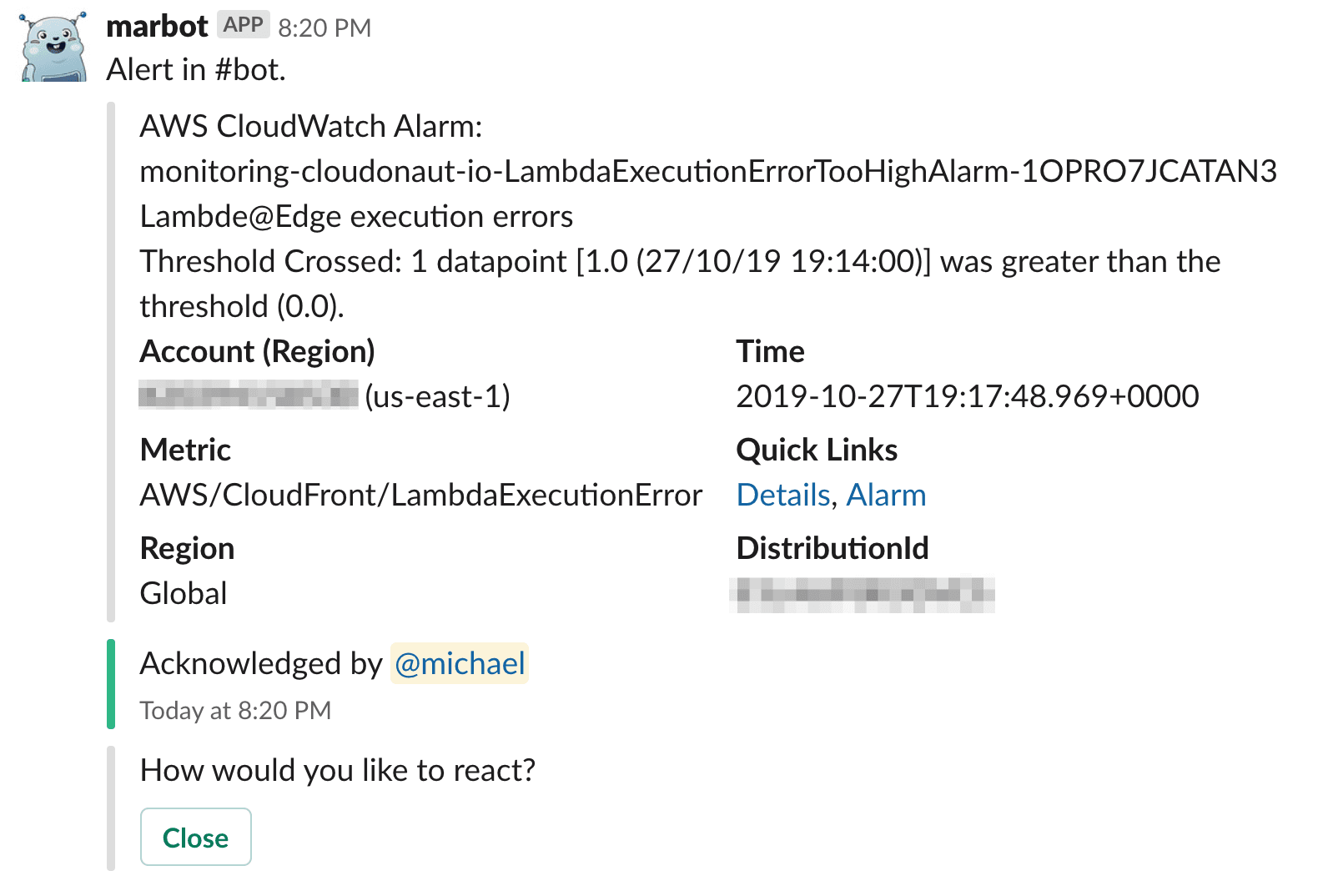

We also observe a bunch of CloudWatch Metrics from CloudFront to detect if our Lambda@Edge functions are having issues or we respond with 5xx codes. Whatever goes wrong, marbot will alert us as the following figure shows.

We will not be able to respond 24/7, but we do our best to keep the site up and running.

The CloudWatch monitoring is part of the next version of our open source CloudFormation templates watch this GitHub pull request.

Analytics: Athena and QuickSight

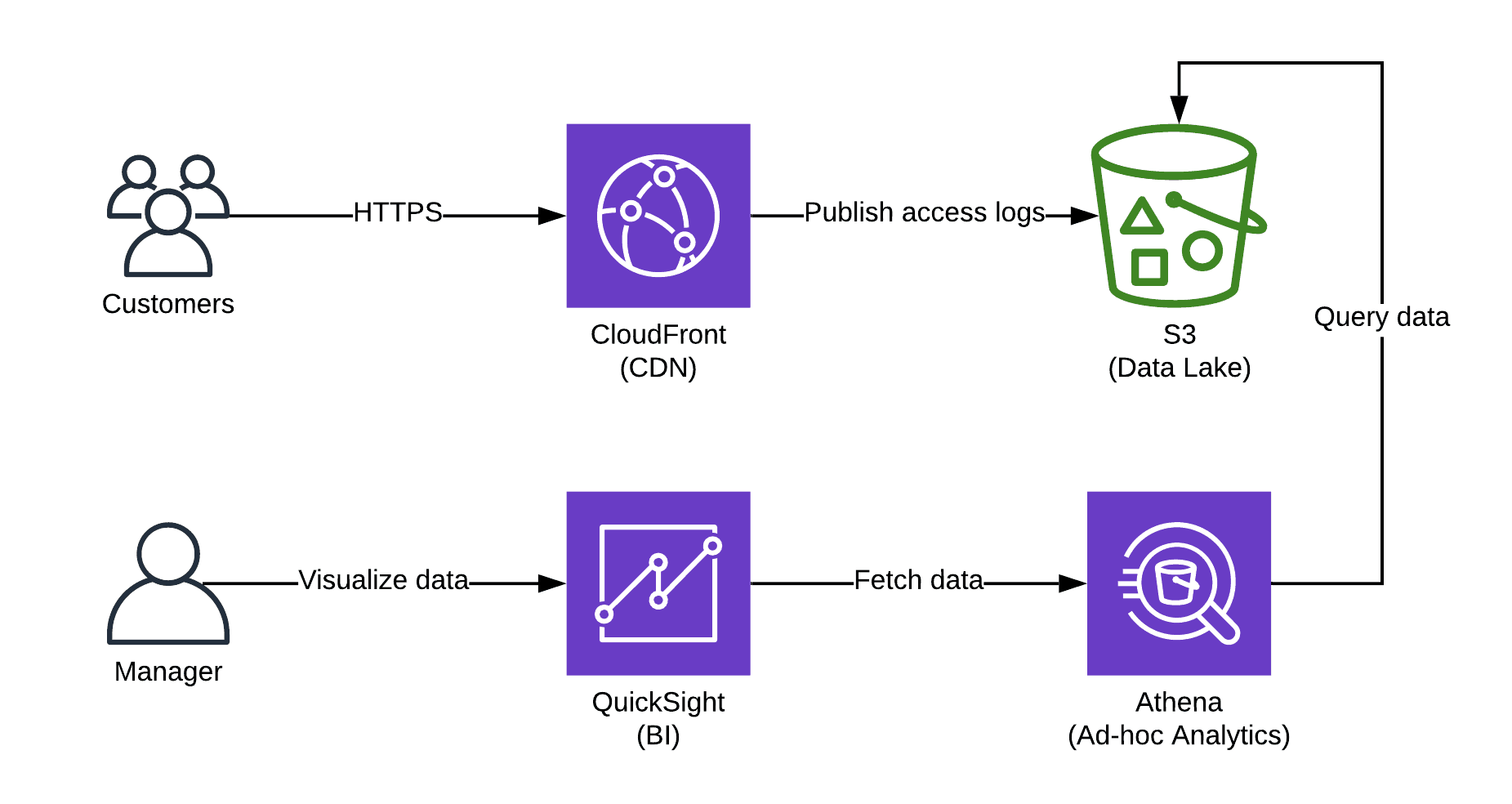

Last but not least, we want to know how many people visit our blog. Earlier this year, we replaced Google Analytics with a solution that we own and control. We also removed all cookies. Andreas wrote a detailed article about the solution. In a nutshell, we use:

- Amazon CloudFront for collecting access logs.

- Amazon Simple Storage Service (S3) as the data lake to store access logs.

- The ad-hoc analytics service Amazon Athena to query data.

- The business intelligence (BI) solution Amazon QuickSight to visualize the tracking data.

The following figure demonstrates how the solution works.

Summary

We love simplicity! Our blog runs on CloudFront and S3, which is maintenance-free and does handle traffic spikes easily. We use the static website generator hexo to publish our content. Lambda@Edge handles redirects and generates optimized images on the fly. Instead of Google Analytics, we are using Athena and QuickSight to get statistics about our blog and posts.

Further reading

- Article Verify SNS messages delivered via HTTP(S) in Node.js

- Article 6 unknown CloudFormation features you should know about

- Article My mental model of AWS

- Tag s3

- Tag serverless

- Tag cloudfront